- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

Re: Remove inactive volumes to use the disk. Disk #1,2,3,4.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Remove inactive volumes to use the disk. Disk #1,2,3,4.

Firmware 6.6.1

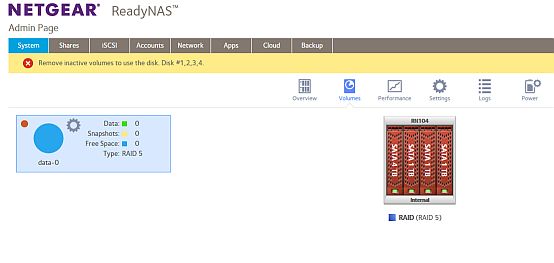

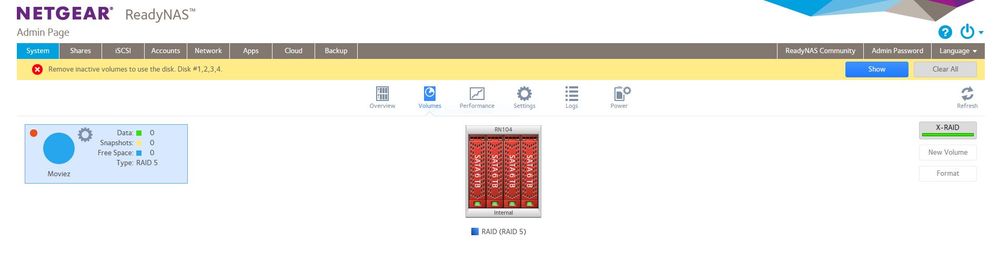

I had 4 x 1TB drives in my system and planned to upgrade one disk a month for four months to aceive a 4 x 4TB system. The initial swap of the first drive seemed to go well but after about 7 hours I came back and had this warning message on the Admin page under volumes. I see several threads about this problem being fixed but none detail the actual method that was used. I would prefer NOT to lose the data on the NAS so I'm curious what the process is here. I have the log files downloaded as well.

As a side note, I attempted to buy premium support but one the last page after entering in credit card info it doesn't give any option for hitting "next" or "enter". It just hits a dead end.

Thank you

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: Remove inactive volumes to use the disk. Disk #1,2,3,4.

Before starting:

I can't see your screenshot as it wasn't approved by a moderator yet.

Maybe, you would like to upvote this "idead": https://community.netgear.com/t5/Idea-Exchange-for-ReadyNAS/Change-the-incredibly-confusing-error-me...

Do you know if any drive is showing errors? Like reallocated sectors, pending sectors, ATA errors? From the GUI, look under System / Performance and hover the cursor on the disk beside the disk number (or look in disk_info.log from the log bundle).

In dmesg.log, do you see any error containing "md127" (start from the end of the file)?

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: Remove inactive volumes to use the disk. Disk #1,2,3,4.

I just took care of that.

@jak0lantash wrote:

I can't see your screenshot as it wasn't approved by a moderator yet.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: Remove inactive volumes to use the disk. Disk #1,2,3,4.

Thanks for the fast replies.

On Disks 2, 3 and 4 (the original 1TB drives) I show 10, 0, 4 ATA errors respectively. Disk 1, the new 4TB also shows 0

Here's what I found towards the end of the dmesg.log file:

[Sun Apr 30 20:06:20 2017] md: md127 stopped.

[Sun Apr 30 20:06:21 2017] md: bind<sda3>

[Sun Apr 30 20:06:21 2017] md: bind<sdc3>

[Sun Apr 30 20:06:21 2017] md: bind<sdd3>

[Sun Apr 30 20:06:21 2017] md: bind<sdb3>

[Sun Apr 30 20:06:21 2017] md: kicking non-fresh sda3 from array!

[Sun Apr 30 20:06:21 2017] md: unbind<sda3>

[Sun Apr 30 20:06:21 2017] md: export_rdev(sda3)

[Sun Apr 30 20:06:21 2017] md/raid:md127: device sdb3 operational as raid disk 1

[Sun Apr 30 20:06:21 2017] md/raid:md127: device sdc3 operational as raid disk 2

[Sun Apr 30 20:06:21 2017] md/raid:md127: allocated 4280kB

[Sun Apr 30 20:06:21 2017] md/raid:md127: not enough operational devices (2/4 failed)

[Sun Apr 30 20:06:21 2017] RAID conf printout:

[Sun Apr 30 20:06:21 2017] --- level:5 rd:4 wd:2

[Sun Apr 30 20:06:21 2017] disk 1, o:1, dev:sdb3

[Sun Apr 30 20:06:21 2017] disk 2, o:1, dev:sdc3

[Sun Apr 30 20:06:21 2017] md/raid:md127: failed to run raid set.

[Sun Apr 30 20:06:21 2017] md: pers->run() failed ...

[Sun Apr 30 20:06:21 2017] md: md127 stopped.

[Sun Apr 30 20:06:21 2017] md: unbind<sdb3>

[Sun Apr 30 20:06:21 2017] md: export_rdev(sdb3)

[Sun Apr 30 20:06:21 2017] md: unbind<sdd3>

[Sun Apr 30 20:06:21 2017] md: export_rdev(sdd3)

[Sun Apr 30 20:06:21 2017] md: unbind<sdc3>

[Sun Apr 30 20:06:21 2017] md: export_rdev(sdc3)

[Sun Apr 30 20:06:21 2017] systemd[1]: Started udev Kernel Device Manager.

[Sun Apr 30 20:06:21 2017] systemd[1]: Started MD arrays.

[Sun Apr 30 20:06:21 2017] systemd[1]: Reached target Local File Systems (Pre).

[Sun Apr 30 20:06:21 2017] systemd[1]: Found device /dev/md1.

[Sun Apr 30 20:06:21 2017] systemd[1]: Activating swap md1...

[Sun Apr 30 20:06:21 2017] Adding 1046524k swap on /dev/md1. Priority:-1 extents:1 across:1046524k

[Sun Apr 30 20:06:21 2017] systemd[1]: Activated swap md1.

[Sun Apr 30 20:06:21 2017] systemd[1]: Started Journal Service.

[Sun Apr 30 20:06:21 2017] systemd-journald[1020]: Received request to flush runtime journal from PID 1

[Sun Apr 30 20:07:09 2017] md: md1: resync done.

[Sun Apr 30 20:07:09 2017] RAID conf printout:

[Sun Apr 30 20:07:09 2017] --- level:6 rd:4 wd:4

[Sun Apr 30 20:07:09 2017] disk 0, o:1, dev:sda2

[Sun Apr 30 20:07:09 2017] disk 1, o:1, dev:sdb2

[Sun Apr 30 20:07:09 2017] disk 2, o:1, dev:sdc2

[Sun Apr 30 20:07:09 2017] disk 3, o:1, dev:sdd2

[Sun Apr 30 20:07:51 2017] IPv6: ADDRCONF(NETDEV_UP): eth0: link is not ready

[Sun Apr 30 20:07:56 2017] mvneta d0070000.ethernet eth0: Link is Up - 1Gbps/Full - flow control off

[Sun Apr 30 20:07:56 2017] IPv6: ADDRCONF(NETDEV_CHANGE): eth0: link becomes ready

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: Remove inactive volumes to use the disk. Disk #1,2,3,4.

Well, that's not a very good start. Sda is not in sync with sdb and sdc. Sdd is not in the RAID array. In other words, dual disk failure (one that you removed, one dead). One disk failed before the RAID array finished rebuilding the new one.

You can check the channel numbers, the device names and serial numbers in disk_info.log (channel number starts at zero).

This is a tricky situation, but you can try the following:

1. Gracefully shut down the NAS from the GUI.

2. Remove the new drive you inserted (it's not in sync anyway).

3. Re-insert the old drive.

4. Boot the NAS.

5. If it boots OK and the volume is accessible, make a full backup and/or replace the disk that is not in sync by a brand new one.

You have two disks with ATA errors which is not very good. Resyncing the RAID array put strain on all the disks, which can push a damaged/old disk to its limits.

Alternatively, you can contact NETGEAR for a Data Recovery contract. They can assess the situation and assist you with recovering the situation.

Thanks @StephenB for approving the screenshot.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: Remove inactive volumes to use the disk. Disk #1,2,3,4.

Just to confirm what you are saying, I should actually put the original back in the Drive A slot but then it also seems I should just put the new 4TB WD Red drive in Slot D to replace the "dead" one, correct?

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: Remove inactive volumes to use the disk. Disk #1,2,3,4.

I still had the error message but realize I had never cleared it so wasn't sure if it was still current or not. i cleared and rebooted (with the existing 1TB drive in SlotD) and here's what the DMESG.LOG came back as:

[Mon May 1 17:29:18 2017] md: md0 stopped.

[Mon May 1 17:29:18 2017] md: bind<sdb1>

[Mon May 1 17:29:18 2017] md: bind<sdc1>

[Mon May 1 17:29:18 2017] md: bind<sdd1>

[Mon May 1 17:29:18 2017] md: bind<sda1>

[Mon May 1 17:29:18 2017] md: kicking non-fresh sdd1 from array!

[Mon May 1 17:29:18 2017] md: unbind<sdd1>

[Mon May 1 17:29:18 2017] md: export_rdev(sdd1)

[Mon May 1 17:29:18 2017] md/raid1:md0: active with 3 out of 4 mirrors

[Mon May 1 17:29:18 2017] md0: detected capacity change from 0 to 4290772992

[Mon May 1 17:29:18 2017] md: md1 stopped.

[Mon May 1 17:29:18 2017] md: bind<sdb2>

[Mon May 1 17:29:18 2017] md: bind<sdc2>

[Mon May 1 17:29:18 2017] md: bind<sda2>

[Mon May 1 17:29:18 2017] md/raid:md1: device sda2 operational as raid disk 0

[Mon May 1 17:29:18 2017] md/raid:md1: device sdc2 operational as raid disk 2

[Mon May 1 17:29:18 2017] md/raid:md1: device sdb2 operational as raid disk 1

[Mon May 1 17:29:18 2017] md/raid:md1: allocated 4280kB

[Mon May 1 17:29:18 2017] md/raid:md1: raid level 6 active with 3 out of 4 devices, algorithm 2

[Mon May 1 17:29:18 2017] RAID conf printout:

[Mon May 1 17:29:18 2017] --- level:6 rd:4 wd:3

[Mon May 1 17:29:18 2017] disk 0, o:1, dev:sda2

[Mon May 1 17:29:18 2017] disk 1, o:1, dev:sdb2

[Mon May 1 17:29:18 2017] disk 2, o:1, dev:sdc2

[Mon May 1 17:29:18 2017] md1: detected capacity change from 0 to 1071644672

[Mon May 1 17:29:19 2017] EXT4-fs (md0): mounted filesystem with ordered data mode. Opts: (null)

[Mon May 1 17:29:19 2017] systemd[1]: Failed to insert module 'kdbus': Function not implemented

[Mon May 1 17:29:19 2017] systemd[1]: systemd 230 running in system mode. (+PAM +AUDIT +SELINUX +IMA +APPARMOR +SMACK +SYSVINIT +UTMP +LIBCRYPTSETUP +GCRYPT +GNUTLS +ACL +XZ +LZ4 +SECCOMP +BLKID +ELFUTILS +KMOD +IDN)

[Mon May 1 17:29:19 2017] systemd[1]: Detected architecture arm.

[Mon May 1 17:29:19 2017] systemd[1]: Set hostname to <ReadyNAS>.

[Mon May 1 17:29:20 2017] systemd[1]: Listening on Journal Socket.

[Mon May 1 17:29:20 2017] systemd[1]: Created slice User and Session Slice.

[Mon May 1 17:29:20 2017] systemd[1]: Reached target Remote File Systems (Pre).

[Mon May 1 17:29:20 2017] systemd[1]: Started Dispatch Password Requests to Console Directory Watch.

[Mon May 1 17:29:20 2017] systemd[1]: Reached target Encrypted Volumes.

[Mon May 1 17:29:20 2017] systemd[1]: Created slice System Slice.

[Mon May 1 17:29:20 2017] systemd[1]: Created slice system-getty.slice.

[Mon May 1 17:29:20 2017] systemd[1]: Starting Create list of required static device nodes for the current kernel...

[Mon May 1 17:29:20 2017] systemd[1]: Listening on Journal Socket (/dev/log).

[Mon May 1 17:29:20 2017] systemd[1]: Starting Journal Service...

[Mon May 1 17:29:20 2017] systemd[1]: Listening on udev Control Socket.

[Mon May 1 17:29:20 2017] systemd[1]: Starting MD arrays...

[Mon May 1 17:29:20 2017] systemd[1]: Created slice system-serial\x2dgetty.slice.

[Mon May 1 17:29:20 2017] systemd[1]: Reached target Slices.

[Mon May 1 17:29:20 2017] systemd[1]: Starting hwclock.service...

[Mon May 1 17:29:20 2017] systemd[1]: Mounting POSIX Message Queue File System...

[Mon May 1 17:29:20 2017] systemd[1]: Started ReadyNAS LCD splasher.

[Mon May 1 17:29:20 2017] systemd[1]: Starting ReadyNASOS system prep...

[Mon May 1 17:29:20 2017] systemd[1]: Reached target Remote File Systems.

[Mon May 1 17:29:20 2017] systemd[1]: Started Forward Password Requests to Wall Directory Watch.

[Mon May 1 17:29:21 2017] systemd[1]: Reached target Paths.

[Mon May 1 17:29:21 2017] systemd[1]: Listening on udev Kernel Socket.

[Mon May 1 17:29:21 2017] systemd[1]: Starting Load Kernel Modules...

[Mon May 1 17:29:21 2017] systemd[1]: Listening on /dev/initctl Compatibility Named Pipe.

[Mon May 1 17:29:21 2017] systemd[1]: Mounted POSIX Message Queue File System.

[Mon May 1 17:29:21 2017] systemd[1]: Started Create list of required static device nodes for the current kernel.

[Mon May 1 17:29:21 2017] systemd[1]: Started ReadyNASOS system prep.

[Mon May 1 17:29:21 2017] systemd[1]: Started Load Kernel Modules.

[Mon May 1 17:29:21 2017] systemd[1]: Mounting Configuration File System...

[Mon May 1 17:29:21 2017] systemd[1]: Starting Apply Kernel Variables...

[Mon May 1 17:29:21 2017] systemd[1]: Mounting FUSE Control File System...

[Mon May 1 17:29:21 2017] systemd[1]: Starting Create Static Device Nodes in /dev...

[Mon May 1 17:29:21 2017] systemd[1]: Mounted Configuration File System.

[Mon May 1 17:29:21 2017] systemd[1]: Mounted FUSE Control File System.

[Mon May 1 17:29:21 2017] systemd[1]: Started Apply Kernel Variables.

[Mon May 1 17:29:21 2017] systemd[1]: Started hwclock.service.

[Mon May 1 17:29:21 2017] systemd[1]: Reached target System Time Synchronized.

[Mon May 1 17:29:21 2017] systemd[1]: Starting Remount Root and Kernel File Systems...

[Mon May 1 17:29:21 2017] systemd[1]: Started Create Static Device Nodes in /dev.

[Mon May 1 17:29:21 2017] systemd[1]: Starting udev Kernel Device Manager...

[Mon May 1 17:29:21 2017] systemd[1]: Started Remount Root and Kernel File Systems.

[Mon May 1 17:29:21 2017] systemd[1]: Starting Rebuild Hardware Database...

[Mon May 1 17:29:21 2017] systemd[1]: Starting Load/Save Random Seed...

[Mon May 1 17:29:21 2017] systemd[1]: Started Load/Save Random Seed.

[Mon May 1 17:29:22 2017] systemd[1]: Started udev Kernel Device Manager.

[Mon May 1 17:29:22 2017] md: md127 stopped.

[Mon May 1 17:29:22 2017] md: bind<sda3>

[Mon May 1 17:29:22 2017] md: bind<sdc3>

[Mon May 1 17:29:22 2017] md: bind<sdd3>

[Mon May 1 17:29:22 2017] md: bind<sdb3>

[Mon May 1 17:29:22 2017] md: kicking non-fresh sdd3 from array!

[Mon May 1 17:29:22 2017] md: unbind<sdd3>

[Mon May 1 17:29:22 2017] md: export_rdev(sdd3)

[Mon May 1 17:29:22 2017] md: kicking non-fresh sda3 from array!

[Mon May 1 17:29:22 2017] md: unbind<sda3>

[Mon May 1 17:29:22 2017] md: export_rdev(sda3)

[Mon May 1 17:29:22 2017] md/raid:md127: device sdb3 operational as raid disk 1

[Mon May 1 17:29:22 2017] md/raid:md127: device sdc3 operational as raid disk 2

[Mon May 1 17:29:22 2017] md/raid:md127: allocated 4280kB

[Mon May 1 17:29:22 2017] md/raid:md127: not enough operational devices (2/4 failed)

[Mon May 1 17:29:22 2017] RAID conf printout:

[Mon May 1 17:29:22 2017] --- level:5 rd:4 wd:2

[Mon May 1 17:29:22 2017] disk 1, o:1, dev:sdb3

[Mon May 1 17:29:22 2017] disk 2, o:1, dev:sdc3

[Mon May 1 17:29:22 2017] md/raid:md127: failed to run raid set.

[Mon May 1 17:29:22 2017] md: pers->run() failed ...

[Mon May 1 17:29:22 2017] md: md127 stopped.

[Mon May 1 17:29:22 2017] md: unbind<sdb3>

[Mon May 1 17:29:22 2017] md: export_rdev(sdb3)

[Mon May 1 17:29:22 2017] md: unbind<sdc3>

[Mon May 1 17:29:22 2017] md: export_rdev(sdc3)

[Mon May 1 17:29:22 2017] systemd[1]: Started MD arrays.

[Mon May 1 17:29:22 2017] systemd[1]: Reached target Local File Systems (Pre).

[Mon May 1 17:29:22 2017] systemd[1]: Started Journal Service.

[Mon May 1 17:29:22 2017] Adding 1046524k swap on /dev/md1. Priority:-1 extents:1 across:1046524k

[Mon May 1 17:29:22 2017] systemd-journald[996]: Received request to flush runtime journal from PID 1

[Mon May 1 17:31:39 2017] md: export_rdev(sdd1)

[Mon May 1 17:31:39 2017] md: bind<sdd1>

[Mon May 1 17:31:39 2017] RAID1 conf printout:

[Mon May 1 17:31:39 2017] --- wd:3 rd:4

[Mon May 1 17:31:39 2017] disk 0, wo:0, o:1, dev:sda1

[Mon May 1 17:31:39 2017] disk 1, wo:0, o:1, dev:sdb1

[Mon May 1 17:31:39 2017] disk 2, wo:0, o:1, dev:sdc1

[Mon May 1 17:31:39 2017] disk 3, wo:1, o:1, dev:sdd1

[Mon May 1 17:31:39 2017] md: recovery of RAID array md0

[Mon May 1 17:31:39 2017] md: minimum _guaranteed_ speed: 30000 KB/sec/disk.

[Mon May 1 17:31:39 2017] md: using maximum available idle IO bandwidth (but not more than 200000 KB/sec) for recovery.

[Mon May 1 17:31:39 2017] md: using 128k window, over a total of 4190208k.

[Mon May 1 17:33:02 2017] md: md0: recovery done.

[Mon May 1 17:33:02 2017] RAID1 conf printout:

[Mon May 1 17:33:02 2017] --- wd:4 rd:4

[Mon May 1 17:33:02 2017] disk 0, wo:0, o:1, dev:sda1

[Mon May 1 17:33:02 2017] disk 1, wo:0, o:1, dev:sdb1

[Mon May 1 17:33:02 2017] disk 2, wo:0, o:1, dev:sdc1

[Mon May 1 17:33:02 2017] disk 3, wo:0, o:1, dev:sdd1

[Mon May 1 17:33:03 2017] md1: detected capacity change from 1071644672 to 0

[Mon May 1 17:33:03 2017] md: md1 stopped.

[Mon May 1 17:33:03 2017] md: unbind<sda2>

[Mon May 1 17:33:03 2017] md: export_rdev(sda2)

[Mon May 1 17:33:03 2017] md: unbind<sdc2>

[Mon May 1 17:33:03 2017] md: export_rdev(sdc2)

[Mon May 1 17:33:03 2017] md: unbind<sdb2>

[Mon May 1 17:33:03 2017] md: export_rdev(sdb2)

[Mon May 1 17:33:04 2017] md: bind<sda2>

[Mon May 1 17:33:04 2017] md: bind<sdb2>

[Mon May 1 17:33:04 2017] md: bind<sdc2>

[Mon May 1 17:33:04 2017] md: bind<sdd2>

[Mon May 1 17:33:04 2017] md/raid:md1: not clean -- starting background reconstruction

[Mon May 1 17:33:04 2017] md/raid:md1: device sdd2 operational as raid disk 3

[Mon May 1 17:33:04 2017] md/raid:md1: device sdc2 operational as raid disk 2

[Mon May 1 17:33:04 2017] md/raid:md1: device sdb2 operational as raid disk 1

[Mon May 1 17:33:04 2017] md/raid:md1: device sda2 operational as raid disk 0

[Mon May 1 17:33:04 2017] md/raid:md1: allocated 4280kB

[Mon May 1 17:33:04 2017] md/raid:md1: raid level 6 active with 4 out of 4 devices, algorithm 2

[Mon May 1 17:33:04 2017] RAID conf printout:

[Mon May 1 17:33:04 2017] --- level:6 rd:4 wd:4

[Mon May 1 17:33:04 2017] disk 0, o:1, dev:sda2

[Mon May 1 17:33:04 2017] disk 1, o:1, dev:sdb2

[Mon May 1 17:33:04 2017] disk 2, o:1, dev:sdc2

[Mon May 1 17:33:04 2017] disk 3, o:1, dev:sdd2

[Mon May 1 17:33:04 2017] md1: detected capacity change from 0 to 1071644672

[Mon May 1 17:33:04 2017] md: resync of RAID array md1

[Mon May 1 17:33:04 2017] md: minimum _guaranteed_ speed: 30000 KB/sec/disk.

[Mon May 1 17:33:04 2017] md: using maximum available idle IO bandwidth (but not more than 200000 KB/sec) for resync.

[Mon May 1 17:33:04 2017] md: using 128k window, over a total of 523264k.

[Mon May 1 17:33:05 2017] Adding 1046524k swap on /dev/md1. Priority:-1 extents:1 across:1046524k

[Mon May 1 17:34:01 2017] md: md1: resync done.

[Mon May 1 17:34:01 2017] RAID conf printout:

[Mon May 1 17:34:01 2017] --- level:6 rd:4 wd:4

[Mon May 1 17:34:01 2017] disk 0, o:1, dev:sda2

[Mon May 1 17:34:01 2017] disk 1, o:1, dev:sdb2

[Mon May 1 17:34:01 2017] disk 2, o:1, dev:sdc2

[Mon May 1 17:34:01 2017] disk 3, o:1, dev:sdd2

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: Remove inactive volumes to use the disk. Disk #1,2,3,4.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: Remove inactive volumes to use the disk. Disk #1,2,3,4.

Operationaly and statistically this doesn't make any sense. The drives stay active all the time with backups and streaming media so I'm not sure why doing a disk upgrade would cause abnormal stress. But even if that is the case and drive D suddenly died, I replaced Drive A with the original fully functional drive which should recover the system. Also, the NAS is on a power conditioning UPS so power failure was not a cause.

Based on the MANY threads on this same topic, I don't think this is the result of a double drive failure. I think there is a firmware or hardware issue that is making the single most important feature of a RAID 5 NAS unreliable.

Even if I could figure out how to pay Netgear for support on this, I don't have any confidence this same thing won't happen next time so I'm not sure it's worth the investment.

Thank you for your assitance though. It was greatly appreciated.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: Remove inactive volumes to use the disk. Disk #1,2,3,4.

@Oversteer71 wrote:

I'm not sure why doing a disk upgrade would cause abnormal stress.

I just wanted to comment on this aspect. Disk replacement (and also volume expansion) require every sector on every disk in the data volume to either be read or written. If there are as-yet undetected bad sectors they certainly can turn up during the resync process.

The disk I/O also is likely higher during resync than normal operation (though that depends on the normal operating load for the NAS).

As far as replacing the original drive A - if there have been any updates to the volume (including automatic updates like snapshots), then that usually won't help. There are event counters on each drive, and if they don't match mdadm won't mount the volume (unless you force it to).

That said, I have seen too many unexplained "inactive volumes" threads here - so I also am not convinced that double-drive failure is the only cause of this problem.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: Remove inactive volumes to use the disk. Disk #1,2,3,4.

similar issue happened to me on rn104 about hour after upgrade to 6.7.1

pulled each disc hooked to linux mint system no smart erros on any drive.

ended up having to rebuild volume.

lucky it was only used to backup running nas314 system.

rn104 had 4 3tb drives in it and 1.5tb free space.

about hour after upgrade system became unrsesponsive and brfts errors on lcd.

seems ok now doing backups and resyncs now.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: Remove inactive volumes to use the disk. Disk #1,2,3,4.

Oversteer71 wrote:

Based on the MANY threads on this same topic, I don't think this is the result of a double drive failure. I think there is a firmware or hardware issue that is making the single most important feature of a RAID 5 NAS unreliable.

I doubt that you will see a lot of people here talking about their dead toaster. It's a storage forum, so yes, there is a lot of people talking about storage issues.

Two of your drives have ATA errors, this is a serious condition. It may work perfectly fine in production, but putting strain on them and they may not sustain it. The full smart logs would allow to tell when those ATA errors were raised.

https://kb.netgear.com/19392/ATA-errors-increasing-on-disk-s-in-ReadyNAS

As I mentioned before and as @StephenB explained, resync is a stressful process for all drives.

The "issue" may be elsewhere, but it's certainly not obvious that this isn't it.

From mdadm point of view, it's a dual disk failure:

[Mon May 1 17:29:22 2017] md/raid:md127: not enough operational devices (2/4 failed)

Also, if nothing happened at drive level, it is unlikely that sdd1 would get marked as out of sync, as md0 is resynced first. Yet it was:

[Mon May 1 17:29:18 2017] md: kicking non-fresh sdd1 from array!

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: Remove inactive volumes to use the disk. Disk #1,2,3,4.

Hi,

I have a similar problem with my RN104 which up until tonight I had been running without issue with 4x6Tb drives installed , running a RAID5 configuration.

After a reboot I get the message Remove inactive volumes in order to use the disk. Disk #1,2,3,4

I have no backup,and i need the data !

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: Remove inactive volumes to use the disk. Disk #1,2,3,4.

Maybe you want to upvote this "idea": https://community.netgear.com/t5/Idea-Exchange-for-ReadyNAS/Change-the-incredibly-confusing-error-me...

You probably don't want to hear that right now, but you should always have a backup of your (important) data.

I can't see your screenshot as it wasn't approved by moderators yet, so I'm sorry if it would have replied to one of these questions.

What happened before the volume became red?

Are all your drives physically healthy? You can check under System / Performance, hover the mouse of the colored circled beside each Disk and look at the error counters (Reallocated Sectors, Pending Sectors, ATA Errors, etc.).

What does the LCD of your NAS show?

If you download the logs from the GUI and search for "md127" in dmesg.log, what does it tell you?

What F/W are you running?

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: Remove inactive volumes to use the disk. Disk #1,2,3,4.

What happened before the volume became red? - The file transfer stopped and 1 share disappeared. After restart - no data in share folder.

Are all your drives physically healthy? You can check under System / Performance, hover the mouse of the colored circled beside each Disk and look at the error counters (Reallocated Sectors, Pending Sectors, ATA Errors, etc.).- all 4 HDD's are healthy - all errors,realocated sectors etc.... are 0

What does the LCD of your NAS show? - no error mesages on the NAS LCD

Status on the RAIDar screen is : Volume ; RAID Level 5 , Inactive , 0MB (0%) of 0 MB used

If you download the logs from the GUI and search for "md127" in dmesg.log, what does it tell you?

[Tue May 2 21:24:58 2017] md: md127 stopped.

Tue May 2 21:24:58 2017] md/raid:md127: device sdd3 operational as raid disk 0

[Tue May 2 21:24:58 2017] md/raid:md127: device sda3 operational as raid disk 3

[Tue May 2 21:24:58 2017] md/raid:md127: device sdb3 operational as raid disk 2

[Tue May 2 21:24:58 2017] md/raid:md127: device sdc3 operational as raid disk 1

[Tue May 2 21:24:58 2017] md/raid:md127: allocated 4280kB

[Tue May 2 21:24:58 2017] md/raid:md127: raid level 5 active with 4 out of 4 devices, algorithm 2

[Tue May 2 21:24:58 2017] RAID conf printout:

[Tue May 2 21:24:58 2017] --- level:5 rd:4 wd:4

[Tue May 2 21:24:58 2017] disk 0, o:1, dev:sdd3

[Tue May 2 21:24:58 2017] disk 1, o:1, dev:sdc3

[Tue May 2 21:24:58 2017] disk 2, o:1, dev:sdb3

[Tue May 2 21:24:58 2017] disk 3, o:1, dev:sda3

[Tue May 2 21:24:58 2017] md127: detected capacity change from 0 to 17988626939904

[Tue May 2 21:24:59 2017] BTRFS info (device md127): setting nodatasum

[Tue May 2 21:24:59 2017] BTRFS critical (device md127): unable to find logical 9299707723776 len 4096

[Tue May 2 21:24:59 2017] BTRFS critical (device md127): unable to find logical 9299707723776 len 4096

[Tue May 2 21:24:59 2017] BTRFS critical (device md127): unable to find logical 9299707731968 len 4096

[Tue May 2 21:24:59 2017] BTRFS critical (device md127): unable to find logical 9299707731968 len 4096

[Tue May 2 21:24:59 2017] BTRFS critical (device md127): unable to find logical 9299707740160 len 4096

[Tue May 2 21:24:59 2017] BTRFS critical (device md127): unable to find logical 9299707740160 len 4096

[Tue May 2 21:24:59 2017] BTRFS critical (device md127): unable to find logical 9299707748352 len 4096

[Tue May 2 21:24:59 2017] BTRFS critical (device md127): unable to find logical 9299707748352 len 4096

[Tue May 2 21:24:59 2017] BTRFS critical (device md127): unable to find logical 9299707723776 len 4096

[Tue May 2 21:24:59 2017] BTRFS critical (device md127): unable to find logical 9299707723776 len 4096

[Tue May 2 21:24:59 2017] BTRFS critical (device md127): unable to find logical 9299707731968 len 4096

[Tue May 2 21:24:59 2017] BTRFS critical (device md127): unable to find logical 9299707731968 len 4096

[Tue May 2 21:24:59 2017] BTRFS critical (device md127): unable to find logical 9299707740160 len 4096

[Tue May 2 21:24:59 2017] BTRFS critical (device md127): unable to find logical 9299707740160 len 4096

[Tue May 2 21:24:59 2017] BTRFS critical (device md127): unable to find logical 9299707748352 len 4096

[Tue May 2 21:24:59 2017] BTRFS critical (device md127): unable to find logical 9299707748352 len 4096

[Tue May 2 21:24:59 2017] BTRFS critical (device md127): unable to find logical 9299707723776 len 4096

[Tue May 2 21:24:59 2017] BTRFS critical (device md127): unable to find logical 9299707723776 len 4096

[Tue May 2 21:24:59 2017] BTRFS critical (device md127): unable to find logical 9299707731968 len 4096

[Tue May 2 21:24:59 2017] BTRFS critical (device md127): unable to find logical 9299707731968 len 4096

[Tue May 2 21:24:59 2017] BTRFS critical (device md127): unable to find logical 9299707740160 len 4096

[Tue May 2 21:24:59 2017] BTRFS critical (device md127): unable to find logical 9299707740160 len 4096

[Tue May 2 21:24:59 2017] BTRFS critical (device md127): unable to find logical 9299707748352 len 4096

[Tue May 2 21:24:59 2017] BTRFS critical (device md127): unable to find logical 9299707748352 len 4096

[Tue May 2 21:24:59 2017] BTRFS: failed to read tree root on md127

[Tue May 2 21:24:59 2017] BTRFS warning (device md127): page private not zero on page 9299707723776

[Tue May 2 21:24:59 2017] BTRFS warning (device md127): page private not zero on page 9299707727872

[Tue May 2 21:24:59 2017] BTRFS warning (device md127): page private not zero on page 9299707731968

[Tue May 2 21:24:59 2017] BTRFS warning (device md127): page private not zero on page 9299707736064

[Tue May 2 21:24:59 2017] BTRFS warning (device md127): page private not zero on page 9299707740160

[Tue May 2 21:24:59 2017] BTRFS warning (device md127): page private not zero on page 9299707744256

[Tue May 2 21:24:59 2017] BTRFS warning (device md127): page private not zero on page 9299707748352

[Tue May 2 21:24:59 2017] BTRFS warning (device md127): page private not zero on page 9299707752448

[Tue May 2 21:24:59 2017] BTRFS: open_ctree failed

What F/W are you running? 6.6.1

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: Remove inactive volumes to use the disk. Disk #1,2,3,4.

So the issue is not the RAID array. The BTRFS volume is corrupted.

You can try to boot the NAS in Read-only mode via the Boot Menu: https://kb.netgear.com/20898/ReadyNAS-ReadyDATA-Boot-Menu

If you have access to your data, back it up.

If you don't, you will need to attempt recovering the BTRFS volume. If you're confident, research "btrfs restore". If not, you will need to contact a data recovery specialist. NETGEAR do offer this kind of services.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: Remove inactive volumes to use the disk. Disk #1,2,3,4.

Hi,

Thanks for the detailed explanation. I had basically exactly the same problem.

I had been upgrading from 2TB drives to 4TB drives progressively but my 4TB on slot4 was showing ATA errors (3175 to be precise..)

I had only slot 1 & 2 to upgrade so I removed the 2TB hdd in slot1 & started resyncing.

Turns out the NAS shut itself down twice while resyncing (!) and each time I powered it back on. After the first time, it kept syncing. After the second time, it wouldn't boot : I had btrfs_search_forward+2ac on the front. Googled it, didn't find much, waited 2h, powered the NAS down & rebooted it.

Then all my volumes had gone red & I had the "Remove inactive volumes".

Anyway, I decided to remove the 4TB disk from slot1 & reinsert the former 2TB drive inside (like you suggested).

Upon rebooting, I'm seeing a "no volume" in the admin page but the screen of the NAS shows "Recovery test 2.86%"

So I am crossing fingers & toes that it is actually rebuilding the array...

If it works, I shall save all I can prior to attempting any further upgrade, but given the situation, how would you go about it? Replace the failing 4TB in slot4 with another 4TB first?

Thanks in advance

Remi

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: Remove inactive volumes to use the disk. Disk #1,2,3,4.

Well, that's one of the biggest difficulty with a dead volume. A step can be good in one situation and bad in another. That's why it's always better to assess the issue by looking at logs before trying to remove drives, reinsert others, etc.

3000+ ATA errors is very high, bad even, and there's a good chance that's why your NAS shuts down.

You'll have to wait for the LCD to show complete. Then if your volume is readable, full backup, then replace the erroring drive first. If necessary, download your logs, look for md127 in dmesg.log and post an extract here.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: Remove inactive volumes to use the disk. Disk #1,2,3,4.

Thu May 4 23:58:14 2017] md: md127 stopped. [Thu May 4 23:58:14 2017] md: bind<sda3> [Thu May 4 23:58:14 2017] md: bind<sdb3> [Thu May 4 23:58:14 2017] md: bind<sdc3> [Thu May 4 23:58:14 2017] md: kicking non-fresh sda3 from array! [Thu May 4 23:58:14 2017] md: unbind<sda3> [Thu May 4 23:58:14 2017] md: export_rdev(sda3) [Thu May 4 23:58:14 2017] md/raid:md127: device sdc3 operational as raid disk 1 [Thu May 4 23:58:14 2017] md/raid:md127: device sdb3 operational as raid disk 3 [Thu May 4 23:58:14 2017] md/raid:md127: allocated 4280kB [Thu May 4 23:58:14 2017] md/raid:md127: not enough operational devices (2/4 failed) [Thu May 4 23:58:14 2017] RAID conf printout: [Thu May 4 23:58:14 2017] --- level:5 rd:4 wd:2 [Thu May 4 23:58:14 2017] disk 1, o:1, dev:sdc3 [Thu May 4 23:58:14 2017] disk 3, o:1, dev:sdb3 [Thu May 4 23:58:14 2017] md/raid:md127: failed to run raid set. [Thu May 4 23:58:14 2017] md: pers->run() failed ... [Thu May 4 23:58:14 2017] md: md127 stopped. [Thu May 4 23:58:14 2017] md: unbind<sdc3> [Thu May 4 23:58:14 2017] md: export_rdev(sdc3) [Thu May 4 23:58:14 2017] md: unbind<sdb3> [Thu May 4 23:58:14 2017] md: export_rdev(sdb3) [Thu May 4 23:58:14 2017] systemd[1]: Started udev Kernel Device Manager. [Thu May 4 23:58:14 2017] md: md127 stopped. [Thu May 4 23:58:14 2017] md: bind<sdb4> [Thu May 4 23:58:14 2017] md: bind<sda4> [Thu May 4 23:58:14 2017] md/raid1:md127: active with 2 out of 2 mirrors [Thu May 4 23:58:14 2017] md127: detected capacity change from 0 to 2000253812736 [Thu May 4 23:58:14 2017] systemd[1]: Found device /dev/md1. [Thu May 4 23:58:14 2017] systemd[1]: Activating swap md1... [Thu May 4 23:58:14 2017] BTRFS: device label 5dbf20e2:test devid 2 transid 1018766 /dev/md127 [Thu May 4 23:58:14 2017] systemd[1]: Found device /dev/disk/by-label/5dbf20e2:test. [Thu May 4 23:58:14 2017] Adding 523708k swap on /dev/md1. Priority:-1 extents:1 across:523708k [Thu May 4 23:58:14 2017] systemd[1]: Activated swap md1. [Thu May 4 23:58:14 2017] systemd[1]: Started Journal Service. [Thu May 4 23:58:15 2017] systemd-journald[1009]: Received request to flush runtime journal from PID 1 [Thu May 4 23:58:15 2017] BTRFS: failed to read the system array on md127 [Thu May 4 23:58:15 2017] BTRFS: open_ctree failed [Thu May 4 23:58:22 2017] IPv6: ADDRCONF(NETDEV_UP): eth0: link is not ready [Thu May 4 23:58:23 2017] NFSD: Using /var/lib/nfs/v4recovery as the NFSv4 state recovery directory [Thu May 4 23:58:23 2017] NFSD: starting 90-second grace period (net c097fc40) [Thu May 4 23:58:27 2017] mvneta d0070000.ethernet eth0: Link is Up - 1Gbps/Full - flow control off [Thu May 4 23:58:27 2017] IPv6: ADDRCONF(NETDEV_CHANGE): eth0: link becomes ready [Thu May 4 23:59:21 2017] nfsd: last server has exited, flushing export cache [Thu May 4 23:59:21 2017] NFSD: Using /var/lib/nfs/v4recovery as the NFSv4 state recovery directory [Thu May 4 23:59:21 2017] NFSD: starting 90-second grace period (net c097fc40)

So, the rebuild failed...

Does it look like I could try a btrfs restore at this point? 2 operational devices out of 4 seems not enough...

It must be why the slot1 disk appears as out of the array (black dot)...

Sigh..

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: Remove inactive volumes to use the disk. Disk #1,2,3,4.

@daelomin wrote:[Thu May 4 23:58:14 2017] md/raid:md127: not enough operational devices (2/4 failed)

Your issue is not at the BTRFS volume level but at the RAID array level (lower layer).

You need to fix the RAID array.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: Remove inactive volumes to use the disk. Disk #1,2,3,4.

Just to clarify, ATA errors do not mean that a drive is failing. Techmically an ATA error occurs when controller and drive fail to accurately communicate a string of data. We would typcially think of this as a drive beginning to fail and that would be the most common reason BUT it can also have several other causes including failing connectors or a failing controller. In my situation, I was getting ATA errors on three drives including a brand new WD 4TB Red. When I moved all these drives to another NAS, the ATA errors went away and the drives appear to be performing as expected.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: Remove inactive volumes to use the disk. Disk #1,2,3,4.

Hi again

I agree with your diagnostic but ... could you give me any pointers on how to rebuild a RAID array in XRAID? Any link with a typical walkthrough?

I am able to find some RAID5 walkthrough but I'm not sure they apply...

Alternatively, a friend of mine seems to think I can extract some drives, plug them into a SATA adapter, connect that to another PC & use a recovery software to access the images that are readable. Do you think that'd work?

(I refuse to give up just yet)

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: Remove inactive volumes to use the disk. Disk #1,2,3,4.

@Oversteer71 wrote:

Just to clarify, ATA errors do not mean that a drive is failing ...

Absolutely correct. Most SMART errors (notably reallocated or pending sectors) always point to the drive.

But ATA errors are different. They most often are the drive, but they don't have to be. In addition to your list, driver bugs can also evoke ATA errors (by issuing illegal commands).

@daelomin wrote:

... could you give me any pointers on how to rebuild a RAID array in XRAID?

...use a recovery software to access the images that are readable. Do you think that'd work?

(I refuse to give up just yet)

Frankly, If I had no backup and had this problem I would use paid netgear support (my.netgear.com). Recovery software on a PC might work - several have reported success with ReclaiMe.

There are some pointers here on how to mount OS6 volumes with shell commands, but you really should understand the root cause of the problem, and not just try to force the array to mount.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: Remove inactive volumes to use the disk. Disk #1,2,3,4.

Thanks for the info about ReclaiME, I'll have a look.

Frankly, I won't pay for Netgear support at this point as I've been going through my old backup (most photos from 2008 to 2014 are there) and I've got some from 2015,2016 on external drives.

Might be a case where I've lost 5 or 10% of total photos but if a recovery software allows me to regain part of that, that's all I'm asking for.

For the future though, what kind of sync would you guys recommend to backup the Netgear to an external RAID on the same LAN? Rsync or is there something graphical and easier ?

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: Remove inactive volumes to use the disk. Disk #1,2,3,4.

fwiw I use a readynas104 to backup the main readynas314.

attached to the 104 I have a 5tb usb drive I then also use for a bi-weekly backup OF some of the backups done on the 104.

so 314 rsyncs to 104 nightly on multiple items and every 3 days or so the stuff I need to keep on the 104 backs up to the 5tb usb drive.

if raid issue happens on the 314 or something I need deleted then can pull from the 104.

if raid issue on the 104 I can pull from the usb.

if all 3 die at same time I am just gonna give up toss everything away and live in a cave.