- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

rndp6000 inactive volume data

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

rndp6000 inactive volume data

Hello there

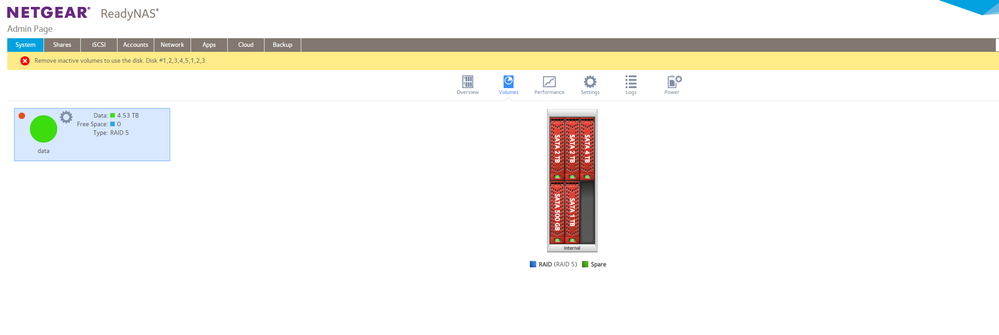

today I suddenly lost access to files over the network, and the NAS was totally freezed. so i force rebooted the system and opened admin console. there was message that volume data is in read only mode. one more reboot (from console)(so stupid i know) turned it to inactive volume.

Can i somehow reboot it in read only mode again to save any files?

ReadynasOs 6.10.4

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: rndp6000 inactive volume data

@Archdivan wrote:

Can i somehow reboot it in read only mode again to save any files?

The RAID array has gotten out of sync. I think the best next step is to test the disks in a Windows PC using vendor tools (Seatools for Seagate; the new WD Digital Dashboard software for Western Digital). You can connect the disk via either SATA or a USB adapter/dock. Run the extended test. Remove the disks with the NAS powered down, and label by slot.

If you can't connect the disks to a PC, then you could try booting up in tech support mode, and then run a similar extended test with smartctl.

There are ways to force the volume to mount, but it is difficult to describe in the forum, as it depends on exactly what failed. Unfortunately tech support isn't an option, since you have a converted NAS.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: rndp6000 inactive volume data

Stephen, great thanks for replying!

at the moment I have tried two ways to check disks: using smartctl command via ssh and disk chesk from the boot menu. SMART looks normal with no obvious critical errors. If it necessary, I can send a report. Also i found some errors in dmesg.log

[Thu May 6 03:47:20 2021] RAID conf printout:

[Thu May 6 03:47:20 2021] --- level:5 rd:5 wd:5

[Thu May 6 03:47:20 2021] disk 0, o:1, dev:sda3

[Thu May 6 03:47:20 2021] disk 1, o:1, dev:sdb3

[Thu May 6 03:47:20 2021] disk 2, o:1, dev:sdc3

[Thu May 6 03:47:20 2021] disk 3, o:1, dev:sdd3

[Thu May 6 03:47:20 2021] disk 4, o:1, dev:sde3

[Thu May 6 03:47:20 2021] md126: detected capacity change from 0 to 1980566863872

[Thu May 6 03:47:20 2021] BTRFS: device label 33ea3ec3:data devid 1 transid 1021063 /dev/md126

[Thu May 6 03:47:21 2021] BTRFS info (device md126): has skinny extents

[Thu May 6 03:47:39 2021] BTRFS critical (device md126): corrupt leaf, slot offset bad: block=2977215774720, root=1, slot=131

[Thu May 6 03:47:39 2021] BTRFS critical (device md126): corrupt leaf, slot offset bad: block=2977215774720, root=1, slot=131

[Thu May 6 03:47:41 2021] BTRFS critical (device md126): corrupt leaf, slot offset bad: block=2977215774720, root=1, slot=131

[Thu May 6 03:47:41 2021] BTRFS critical (device md126): corrupt leaf, slot offset bad: block=2977215774720, root=1, slot=131

[Thu May 6 03:47:41 2021] BTRFS warning (device md126): Skipping commit of aborted transaction.

[Thu May 6 03:47:41 2021] BTRFS: error (device md126) in cleanup_transaction:1864: errno=-5 IO failure

[Thu May 6 03:47:41 2021] BTRFS info (device md126): delayed_refs has NO entry

[Thu May 6 03:47:41 2021] BTRFS: error (device md126) in btrfs_replay_log:2436: errno=-5 IO failure (Failed to recover log tree)

[Thu May 6 03:47:41 2021] BTRFS error (device md126): cleaner transaction attach returned -30

[Thu May 6 03:47:41 2021] BTRFS error (device md126): open_ctree failed

[Thu May 6 03:47:42 2021] NFSD: Using /var/lib/nfs/v4recovery as the NFSv4 state recovery directory

[Thu May 6 03:47:42 2021] NFSD: starting 90-second grace period (net ffffffff88d70240)

[Thu May 6 03:48:01 2021] nfsd: last server has exited, flushing export cache

[Thu May 6 03:48:01 2021] NFSD: Using /var/lib/nfs/v4recovery as the NFSv4 state recovery directory

[Thu May 6 03:48:01 2021] NFSD: starting 90-second grace period (net ffffffff88d70240)

[Thu May 6 05:38:06 2021] RPC: fragment too large: 50331695

[Thu May 6 08:13:35 2021] RPC: fragment too large: 50331695

It looks like corruption in filesystem.

Some googling around tells me that it can be repaired with btrfs but i exactly don't understand how to do this.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: rndp6000 inactive volume data

@Archdivan wrote:

[Thu May 6 03:47:41 2021] BTRFS: error (device md126) in cleanup_transaction:1864: errno=-5 IO failure[Thu May 6 03:47:41 2021] BTRFS: error (device md126) in btrfs_replay_log:2436: errno=-5 IO failure (Failed to recover log tree)

It looks like corruption in filesystem.

It does look like corruption, but I suggest first looking in system.log and kernel.log for more info on these two particular errors. They look disk-related, and those two logs might give you more info on what disk caused them. Once you know the disk, you could attempt to boot the system up w/o it, and see if the volume mounts.

BTW, if it does mount, then the next step would be to off-load data (at least the most critical stuff).

@Archdivan wrote:

Some googling around tells me that it can be repaired with btrfs but i exactly don't understand how to do this.

Though I've done some reading here, it's not something I've needed to do myself. @rn_enthusiast has sometimes offered to review logs and offer tailored advice on this. So maybe wait and see if he will chime in.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: rndp6000 inactive volume data

It looks like a failure to read the filesystem journal.

[Thu May 6 03:47:41 2021] BTRFS: error (device md126) in btrfs_replay_log:2436: errno=-5 IO failure (Failed to recover log tree)

[Thu May 6 03:47:41 2021] BTRFS error (device md126): cleaner transaction attach returned -30

[Thu May 6 03:47:41 2021] BTRFS error (device md126): open_ctree failed

Given the symptoms of the NAS freezing then seeing the journal (tree-log) preventing the mount on next boot, I would expect that a btrfs zero-log will likely fix it.

btrfs rescue zero-log /dev/md126

Then reboot the NAS

But the thing is, it is an estimation of what the problem is. It LOOKS like a bad journal more than anything but it could potentially be other things too. Clearing the journal is only good in cases where the journal is actually the issue :). It does look like, that the journal is the problem here but there are people out there who knows a lot more about BTRFS than me. If you care about the data or don't have a backup, you might seek advise from the BTRFS community first. You can use the BTRFS mailing list:

https://btrfs.wiki.kernel.org/index.php/Btrfs_mailing_list

They would expect you know some Linux at least and please look at the section about which info to get for them (in the above link).

If you just want to "try something", then zero-log would probably be the way to go, though would be at your own descresion.

Cheers

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: rndp6000 inactive volume data

@rn_enthusiast wrote:

Given the symptoms of the NAS freezing then seeing the journal (tree-log) preventing the mount on next boot, I would expect that a btrfs zero-log will likely fix it.

I am wondering if the I/O error was simply due to a bad pointer in the journal, or if there was a disk error when reading the data structure.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: rndp6000 inactive volume data

Exactly, I think it is a bad pointer in the tree log yea. Though you are right, in typical circumstances that can be associated with bad hardware. It would just be some coincidence to have bad HW + a bad journal 🙂 My feeling is that this is tree log issues here. Had a look around the web as well, I see others in exact same situation and it was journal problems for them too. For example here:

I doesn't seem uncommon to experience the bad/corrupt leaf error at the same time.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: rndp6000 inactive volume data

Wow. It works. Now i have mounted read-only volume. At least i can make a backup of my data before next experiments. I'm not a linux user, and your advice really saved me. So next sten i should find hdd causes an error, remove it and totally rebuild a volume?

At the kernel.log still "corrupt leaf" error at md126:

ay 06 23:09:57 ArchiBox kernel: RAID conf printout:

May 06 23:09:57 ArchiBox kernel: --- level:5 rd:5 wd:5

May 06 23:09:57 ArchiBox kernel: disk 0, o:1, dev:sda3

May 06 23:09:57 ArchiBox kernel: disk 1, o:1, dev:sdb3

May 06 23:09:57 ArchiBox kernel: disk 2, o:1, dev:sdc3

May 06 23:09:57 ArchiBox kernel: disk 3, o:1, dev:sdd3

May 06 23:09:57 ArchiBox kernel: disk 4, o:1, dev:sde3

May 06 23:09:57 ArchiBox kernel: md126: detected capacity change from 0 to 1980566863872

May 06 23:09:57 ArchiBox kernel: BTRFS: device label 33ea3ec3:data devid 1 transid 1021064 /dev/md126

May 06 23:09:57 ArchiBox kernel: BTRFS info (device md126): has skinny extents

May 06 23:10:14 ArchiBox kernel: BTRFS error (device md126): qgroup generation mismatch, marked as inconsistent

May 06 23:10:14 ArchiBox kernel: BTRFS info (device md126): checking UUID tree

May 06 23:10:15 ArchiBox kernel: NFSD: Using /var/lib/nfs/v4recovery as the NFSv4 state recovery directory

May 06 23:10:15 ArchiBox kernel: NFSD: starting 90-second grace period (net ffffffff88d70240)

May 06 23:10:18 ArchiBox kernel: BTRFS critical (device md126): corrupt leaf, slot offset bad: block=2977215774720, root=1, slot=131

May 06 23:10:19 ArchiBox kernel: BTRFS critical (device md126): corrupt leaf, slot offset bad: block=2977215774720, root=1, slot=131

May 06 23:10:39 ArchiBox kernel: nfsd: last server has exited, flushing export cache

May 06 23:10:39 ArchiBox kernel: NFSD: Using /var/lib/nfs/v4recovery as the NFSv4 state recovery directory

May 06 23:10:39 ArchiBox kernel: NFSD: starting 90-second grace period (net ffffffff88d70240)

May 06 23:11:07 ArchiBox kernel: BTRFS critical (device md126): corrupt leaf, slot offset bad: block=2977215774720, root=1, slot=131

May 06 23:11:07 ArchiBox kernel: BTRFS critical (device md126): corrupt leaf, slot offset bad: block=2977215774720, root=1, slot=131

May 06 23:11:07 ArchiBox kernel: BTRFS: error (device md126) in btrfs_drop_snapshot:9412: errno=-5 IO failure

May 06 23:11:07 ArchiBox kernel: BTRFS info (device md126): forced readonly

May 06 23:11:07 ArchiBox kernel: BTRFS info (device md126): delayed_refs has NO entry

At the system.log i found a lot of such type of errors:

May 07 00:00:09 ArchiBox dbus[2865]: [system] Activating service name='org.opensuse.Snapper' (using servicehelper)

May 07 00:00:09 ArchiBox dbus[2865]: [system] Successfully activated service 'org.opensuse.Snapper'

May 07 00:00:09 ArchiBox snapperd[11094]: loading 305 failed

May 07 00:00:09 ArchiBox snapperd[11094]: loading 408 failed

May 07 00:00:09 ArchiBox snapperd[11094]: loading 465 failed

May 07 00:00:09 ArchiBox snapperd[11094]: loading 528 failed

May 07 00:00:09 ArchiBox snapperd[11094]: THROW: mkdir failed errno:30 (Read-only file system)

May 07 00:00:09 ArchiBox snapperd[11094]: CAUGHT: mkdir failed errno:30 (Read-only file system)

May 07 00:00:09 ArchiBox snapperd[11094]: loading 379 failed

May 07 00:00:09 ArchiBox snapperd[11094]: loading 482 failed

May 07 00:00:09 ArchiBox snapperd[11094]: loading 601 failed

May 07 00:00:09 ArchiBox snapperd[11094]: loading 602 failed

May 07 00:00:09 ArchiBox snapperd[11094]: loading 603 failed

May 07 00:00:09 ArchiBox snapperd[11094]: loading 604 failed

May 07 00:10:56 ArchiBox snapperd[12595]: delete snapshot failed, ioctl(BTRFS_IOC_SNAP_DESTROY) failed, errno:30 (Read-only file system)

May 07 00:10:56 ArchiBox snapperd[12595]: THROW: delete snapshot failed

May 07 00:10:56 ArchiBox snapperd[12595]: CAUGHT: delete snapshot failed

May 07 00:10:57 ArchiBox snapperd[12595]: delete snapshot failed, ioctl(BTRFS_IOC_SNAP_DESTROY) failed, errno:30 (Read-only file system)

May 07 00:10:57 ArchiBox snapperd[12595]: THROW: delete snapshot failed

May 07 00:10:57 ArchiBox snapperd[12595]: CAUGHT: delete snapshot failed

May 07 00:10:58 ArchiBox snapperd[12595]: delete snapshot failed, ioctl(BTRFS_IOC_SNAP_DESTROY) failed, errno:30 (Read-only file system)

May 07 00:10:58 ArchiBox snapperd[12595]: THROW: delete snapshot failed

May 07 00:10:58 ArchiBox snapperd[12595]: CAUGHT: delete snapshot failed

Something wrong with a snapshots. may be i should delete them all..

Anyway great thanks to you!

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: rndp6000 inactive volume data

@Archdivan wrote:

Wow. It works. Now i have mounted read-only volume. At least i can make a backup of my data before next experiments.

So next step i should find hdd causes an error, remove it and totally rebuild a volume?

That makes sense to me. Though the disks could be fine, they should be tested. Rebuilding the volume (not just resyncing) is the the safest way to make sure all these errors are cleaned up.

After the backup, I suggest running the disk test from the volume settings wheel. That will run the extended SMART test on all drives in the volume - that won't pick up all failures (I have had bad drives pass that test), but it still is pretty good.

Then you could try either destroying/recreating the volume, or doing a factory default (rebuilding the NAS from scratch). They are about the same amount of work (either way you need to reinstall any apps, recreate all the shares, and restore all data from backup), so I'd go with the factory default myself.

Another path is to connect the disks to a Windows PC after the disk test (either with SATA or a USB adapter/dock) after the disk test. Then run the write zeros / erase disk test in the vendor diagnostics (Seatools for Seagate, the Digital Dashboard for Western Digital). That will pick up any bad sectors that the non-destructive SMART test misses. After that completes, you put the disks back in the NAS and power up. The NAS will do a fresh factory install - and as above, you'd reconfigure the NAS and restore data from backup.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: rndp6000 inactive volume data

If your set-up is complicated and you don't want to have to re-create it from scratch, you can save and restore the configuration. Note that you should re-install any apps before you restore the configuration, because there may be configuration files from the backup that were modified by apps that will create problems if the app isn't there.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: rndp6000 inactive volume data

Hi @Archdivan

Glad to hear the NAS is back up and running. There might be deeper filesystem problems and could be the reason you are seeing some of these errors you mention. Good thinking, booting it into Read-only mode. Safest option is, as @StephenB said, to do a factory reset and restore from backups. That will ensure a clean filesystrem on the NAS.

Just make sure you got it all backed up first 🙂

Cheers!