- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

Ready NAS Ultra 6 faulty - drive slot faulty and unable to create new RAID array

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ready NAS Ultra 6 faulty - drive slot faulty and unable to create new RAID array

Netgear Ready NAS Ultra 6 X Raid 2

Firmware Raidiator 4.2.31 Date 11 January 2023.

Apologies for the length of this enquiry, but I hope it is helpful for your assistance.

I had disks 1 2 3 4 slots each with a WD 3TB NAS HDD.

Lasted about 5-6 years until the present.

Disk #2 failed, messages that the C: drive was unprotected and info would be lost if another drive fails. #2 DEAD

Bought new 3TB WD disk. I tested it on W10 desktop and it worked OK.

Placed it in Disk #2 slot.

Re-booted with new 3TB WD - not recognised - no disk #2 - DEAD in Log screen.

Removed the new WD 3TB from disk #2 slot. Tested it in W10 again and it was good.

Powered down (2 presses of power button). Placed the 3TB WD drive , from #2 slot, into Disk #5 slot. NAS screen messages that the #5 would be tested, #5 disk passed, would be used in the rebuild. This sequence happened 3 times from memory. I had previously tried this process by just checking the 3TB WD drive in W10, then plugging it directly into the #2 disk slot. I had similar screen messages. I had left the NAS for 30 hours on this occasion, but still had the C: unprotected message.

Reloaded the latest update from Netgear site, which was already installed and it made no difference to the NAS status. Received emails OK.

I tried the Blinking LED test in the RAID array, only D#1 - #4 shown. D#2 was dead, not testable. D#5 was not identified even though it was installed and "Data volume will be rebuilt with disk 5" message was being displayed in Message Log.

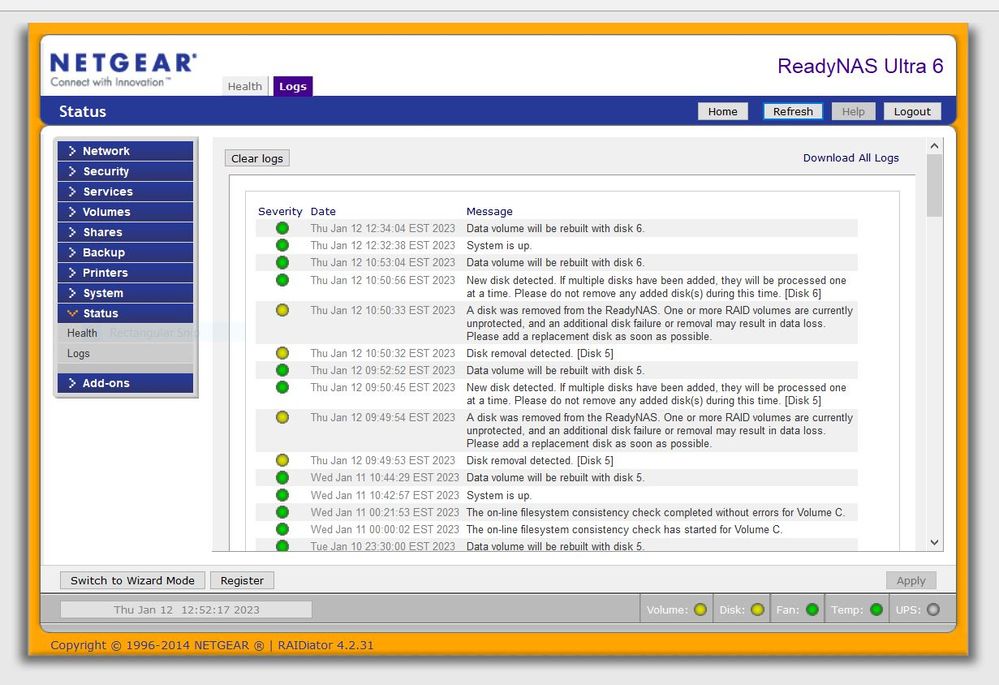

NAS Message LOG

Wed Jan 11 10:44:29 EST 2023 Data volume will be rebuilt with disk 5.

Wed Jan 11 10:42:57 EST 2023 System is up.

Wed Jan 11 00:21:53 EST 2023 The on-line file system consistency check completed without errors for Volume C.

Wed Jan 11 00:00:02 EST 2023 The on-line file system consistency check has started for Volume C.

Tue Jan 10 23:30:00 EST 2023 Data volume will be rebuilt with disk 5.

Tue Jan 10 23:28:23 EST 2023 System is up.

Tue Jan 10 15:26:31 EST 2023 Blinking disk lcd 3. (me testing)

Tue Jan 10 15:26:25 EST 2023 Blinking disk lcd 1. (me testing)

Tue Jan 10 15:25:56 EST 2023 Blinking disk lcd 1. (me testing)

Tue Jan 10 15:25:48 EST 2023 Blinking disk lcd 3. (me testing)

Tue Jan 10 15:25:15 EST 2023 Blinking disk lcd 4. (me testing)

Tue Jan 10 15:19:40 EST 2023 Backup job(s) successfully deleted. ( my action)

Tue Jan 10 09:57:06 EST 2023 Alert test message has been sent.

Mon Jan 9 21:49:05 EST 2023 Data volume will be rebuilt with disk 5.

Mon Jan 9 21:46:55 EST 2023 New disk detected. If multiple disks have been added, they will be processed one at a time. Please do not remove any added disk(s) during this time. [Disk 5]

Mon Jan 9 21:32:26 EST 2023 A disk was removed from the ReadyNAS. One or more RAID volumes are currently unprotected, and an additional disk failure or removal may result in data loss. Please add a replacement disk as soon as possible.

LOG Health Status (date 11 January 2023)

Disk 1 WDC WD30EFRX-68EUZN0 2794 GB , 34 C / 93 F , Write-cache ON OK

Disk 2 WDC WD30EFRX-68EUZN0 2794 GB (slot is vacant) Dead

Disk 3 WDC WD30EFRX-68EUZN0 2794 GB , 33 C / 91 F , Write-cache ON OK

Disk 4 WDC WD30EFRX-68EUZN0 2794 GB , 35 C / 95 F , Write-cache ON OK

Disk 5 WDC WD30EFZX-68AWUN0 2794 GB , 35 C / 95 F , Write-cache ON OK

Fan SYS2 831 RPM OK

Temp CPU 50 C / 122 F [Normal 0-65 C / 32-149 F] OK

Temp SYS 38 C / 100 F [Normal 0-60 C / 32-140 F] OK

So, has the firmware/software cracked up internally in the NAS such that disk slot 2 is now faulty unusable- eg wiring/solder/component failure on PCBs etc.?

While the Disk#2 slot is "dead" with no HDD in the socket, can the NAS operating system software not progress and introduce D#5 into the RAID array?

Have I now got an external HDD with no backup?

Any ideas/suggestions would be helpful

Thank you in advance, Len

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: Ready NAS Ultra 6 faulty - drive slot faulty and unable to create new RAID array

does sound like the motherboard or SATA backplane may have an issue making slot 2 unusable. Everything you posted shows the volume should re-build using the drive in slot 5 as the replacement for the one in slot 2. But it's not doing so? If it isn't, try removing and re-installing the drive with the unit powered, ensuring you get a message in the log that the drive was removed before you re-insert. If that doesn't work, then try a re-boot.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: Ready NAS Ultra 6 faulty - drive slot faulty and unable to create new RAID array

Hello Sandshark,

Thank you for your assistance with my NAS issue.

I tried your suggestion in both Disk5 and later in Disk 6 slots.

I really hoped slot #6 would be the answer to my problem. I don't think the NAS is able to re establish the RAID configuration with Slot #2 reported dead with or without a HDD installed.

I received similar reactions with the relocation of the HDD into either 5 or 6 sockets/slots.. As per the log messages.

I found a post wherein a member suggested to take out all the HDDs and label them. Then place the new HDD is Slot 1 and see if the operating system sets up a single disk. If it does, turn it off and then move the Disk from slot #1 to #2.

This exericse could prove if slot 2 is really faulty. Sounds like an approach?

I'm a bit new on this action - will the NAS keep the existing RAID configuration and various setting in the various folders system,volumes,security etc. if I do the disk 1 to 2 swapping as suggested?

I dont want to have existing data saved on the NAS lost forever, at this point in time.

I have been migrating data for the last week or so and am doing the last data checks to make certain I have everythng corectly identified and catlogued stuff.

Cheers

diynas.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: Ready NAS Ultra 6 faulty - drive slot faulty and unable to create new RAID array

@diynas wrote:

I'm a bit new on this action - will the NAS keep the existing RAID configuration and various setting in the various folders system,volumes,security etc. if I do the disk 1 to 2 swapping as suggested?

All the settings are on the disks.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: Ready NAS Ultra 6 faulty - drive slot faulty and unable to create new RAID array

Thank you StephenB,

Given what you said about folder settings being retained on the HDDs in the RAID array, then if I try a new drive in slot#1 it will get written to by the operating system. When inserted into slot#2 it should then be as though it was in slot #1 ?

The NAS should operate as normal if the #2 slot is not dead? The Health folder logs should let me know what is happening.

Given your suggestion: The Health Log still thinks a 3TB HDD is installed on Slot #2. The slot is vacant, I guess the NAS does have a terminal condition thinking there is a faulty HDD in slot #2 . This is probably stopping the new HDD being integrated in the array.

LOG Health Status (date 11 January 2023)

Disk 1 WDC WD30EFRX-68EUZN0 2794 GB , 34 C / 93 F , Write-cache ON OK

Disk 2 WDC WD30EFRX-68EUZN0 2794 GB (slot is vacant) Dead

Disk 3 WDC WD30EFRX-68EUZN0 2794 GB , 33 C / 91 F , Write-cache ON OK

Disk 4 WDC WD30EFRX-68EUZN0 2794 GB , 35 C / 95 F , Write-cache ON OK

Disk 5 WDC WD30EFZX-68AWUN0 2794 GB , 35 C / 95 F , Write-cache ON OK

Fan SYS2 831 RPM OK

Temp CPU 50 C / 122 F [Normal 0-65 C / 32-149 F] OK

Temp SYS 38 C / 100 F [Normal 0-60 C / 32-140 F] OK

When I want to return to my present 4 disk array with the dud slot #2, I place the original HDDs in their respective slots and cold boot. All should be as normal, that is the present situation.

Do you see any way to remove the phantom 3TB HDD from the RAID "memory" for slot #2 ?

If that is how you see it, I might do it tomorrow and do more data comparison/transferring in the meantime.

Thanks for the help

Cheers

diynas

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: Ready NAS Ultra 6 faulty - drive slot faulty and unable to create new RAID array

Yes, your approach is sound. Use the new drive by itself to test the unit. You'll only have to create a volume on it in Slot 1. As you move it through the slots, it should boot as-is. When you do finally go back to trying to re-insert the new drive into your existing array, it would be best to zero it on a PC first (just removing the partitions is usually enough).

I think you'll find at least all but bay 2 are OK, since the drive was detected in them. Bay 2 may be as well, and your test will determine that.

Since it sees drive 2 as dead and says it's going to re-build with the drive in another slot, I don't think the phantom drive is the problem. But do you have SSH enabled? While I'm not sure of the commands needed with OS4.2.x to delete the phantom drive and/or force a re-build, you'll need SSH to do it since re-booting isn't helping.

Is it possible that the new drive is just a bit smaller than the others?

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: Ready NAS Ultra 6 faulty - drive slot faulty and unable to create new RAID array

The story so far. Its an epistle - sorry about that.

16 January: Thanks to Sandshark's advice I bit the bullet. ( I don't have SSH nor the time to learn it). I have the rescued the original NAS data onto another HDD so it is safe for a while, I hope. I have kept the three original disks safely out of the way during this exercise. I know I can fall back on them if the new RAID setup dies - particularly disk slot #2.

I dismantled the NAS, covers, fans etc. Gave it a good blow out. Fans spun freely. Confirmed by the Log's messages. Did test the Case fan and the alarm came up when no fan was connected to the MoBo. Checked the backplane where the SATA drives plug in with my close up magnifier. Decided that since the problem was around SATA slot #2, either mechanical/component or data related, I re-soldered the back plane PCB pins of the multi-pin SATA data connector. The quality of the soldering looked OK but a reheat and new solder to make sure. The 12v/5V Molex plug was checked for loose wires etc. - OK. I reassembled the case and tested it without any disks present and it went OK. No internal alarms generated. That was the mechanical issues looked at.

I then used single disk testing, a good HDD and the original faulty Disk #2.

The issue was that the good and suspect disks both passed the booting, disk testing, up to the 10 minute grace period in all 6 slots. I now believed that I had a software glitch wherein the three good disks which resided in slots 1,3,4 were always good and Slot #2 retained the faulty HDD identity even when the slot was empty. (Health Logs showed this condition). As I was advised by Sandshark, the data relating to the HDD performance is stored in the RAID disks not the hardware OS. I guess it uses unique disk information Brand, Ser No. etc. to keep track of hardware changes etc.

I have two similar drives, a new 3TB WD (400 hours powered and spinning) and the now "good" original disk #2 (47,000 hours powered, but not running time, installed about March 2014). Before I started the single drive tests, I retested the original #2 HDD again in W10 - and it passed the formatting and system file tests. ( It had passed the W10 test on previous occasions, but had failed once in my reliable HDD Caddy, so it was always a little 'suspect'. I have not used the Caddy again in this current exercise). I realise the 47K hours is high, about 8k hours per year. Usage was jpg/raw files, documents etc. I reflected back on the NAS management hours (maybe 2500) and our usage estimated at 1000 to total maybe about 3,500 total hours of spinning time in 8 years. I am becoming optimistic even though the disks 1,3 and 4 all have about 47K hour powered life. During backing up, the NAS ran unprotected for about 48 hours in 12 hour or longer blocks. If the drives were unstable they would have given problems ? Usually 38oC per drive during the backing up.

This will be a worst case test of the original #2 disk/slot #2 combination and its performance for the next few days. In the Health Log, the older disk #2 runs about 2oC hotter than the new drive, maybe spindle bearing aging. On a cold start the difference is 1oC. and I'm putting that down to manufacturer's tolerances (?).

Since both the NEW and suspect disks both passed the booting, disk testing, up to the 10 minute grace period in all 6 slots, I decided to do a fresh install as a two disk X-RAID2.

Placed the new disk in slot #1 and it booted and configured OK.

Then placed the original "suspect disk" in slot #2.

The OS saw the second disk and happily started to re-sync it into the system.

The syncing took about 8 hours. I have the 'shutdown on no activity' set for 10 minutes.

Today is 19 January. The NAS has been stable and no alarms or log conditions out of the ordinary. See attachment. I think I have the attachment right

.