- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

RN3220 / RN4200 Crippling iSCSI Write Performance

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

RN3220 / RN4200 Crippling iSCSI Write Performance

I've got a mixed OEM ecosystem with several Tier 2 RN3220's and RN4200's along with Tier 1 EqualLogic storage appliances.

All ReadyNAS appliances have 12 drive compliments Seagate Enterprise 4TB drives in them.

I wanted to be able to use the ReadyNAS' as iSCSI options, particuarly for maintainance cycles on the storage network, so I re-purposed them from NFS use. Factory reset them onto 6.6 firmware and they have subsequently been upgraded to 6.7.1 with no change in symptoms.

- The drives have no errors

- It's a new install, so there are no fragmentation issues etc.

- The NAS's use X-RAID

- I've set them up with 2 NIC 1GbE MPIO from the Hyper-V side (I've also tried it on 10GbE and on 1 NIC 1GbE with no impact).

- 9k jumbo frames are on

- There are no teams on the RN's

- Checksum is off

- Quota is off

- iSCSI Target LUN's are all thick

- Write caching is on (UPS's are in place)

- Sync writes are disabled

- As many services as I can find are off, there are no SMB shares

- No NetGear apps or cloud services

- No snapshots

The short summary of the problem is that if I migrate a VM VHDX storage from the EqualLogic on to any of the ReadyNAS appliances, accross the same storage network and running from the same hypervisor, the data migration takes an eternity and once the move completes the write performance is uterrly, utterly crippled.

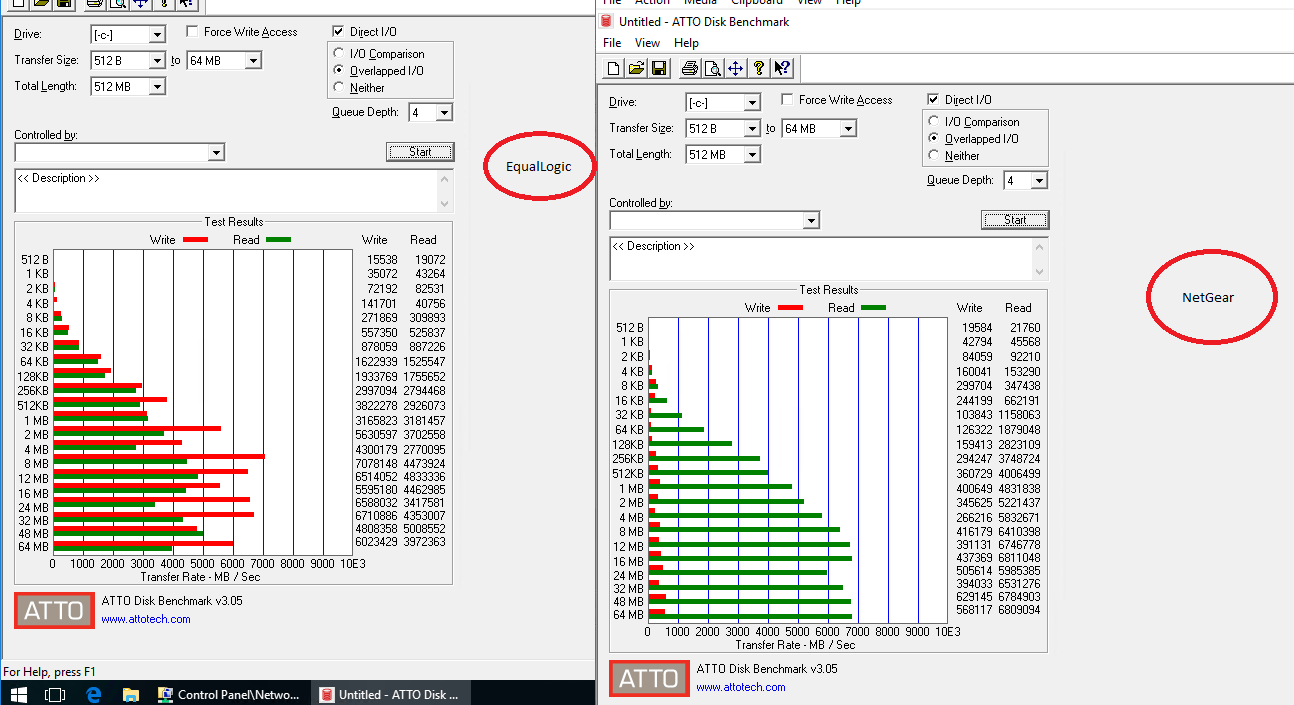

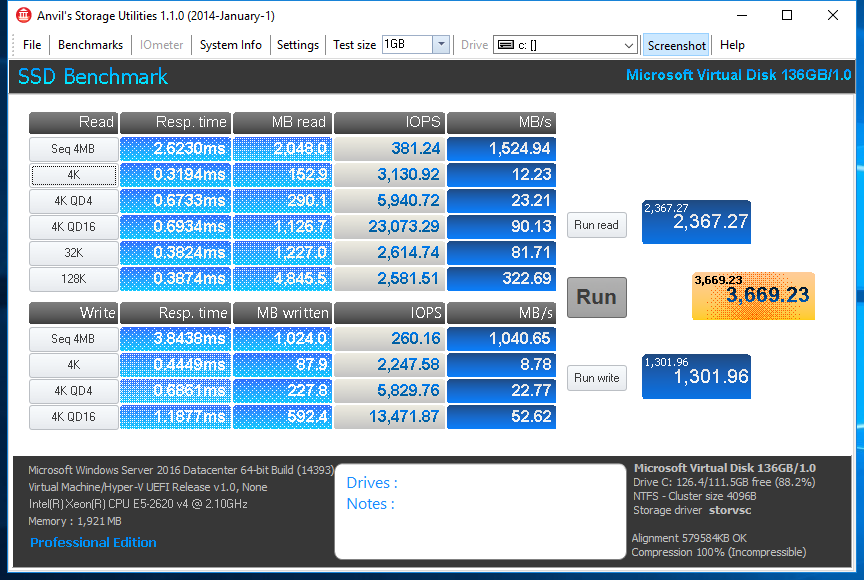

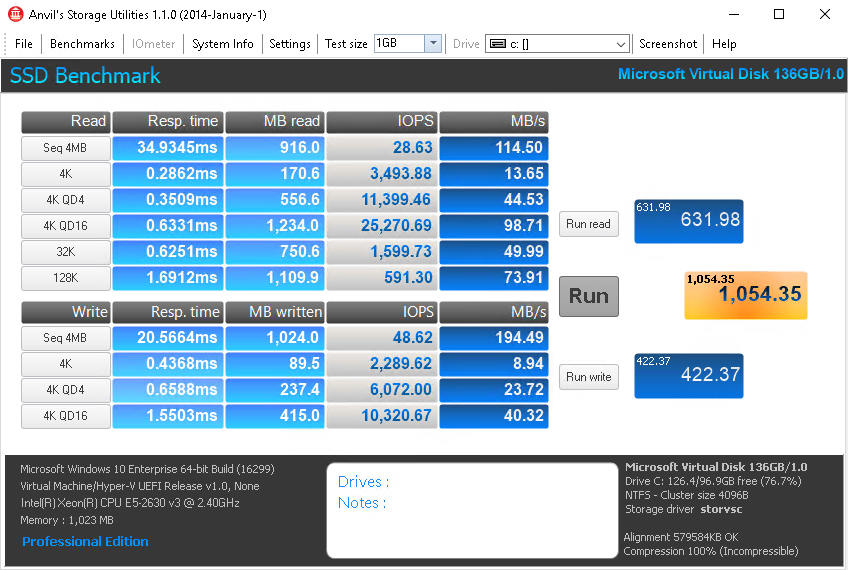

Here are the ATTO stats:

Same VM, on the same 1GbE network. In the case of the above over 1x 1GbE, single path, all running on the same switches.

Moving the VM back to the EqualLogic storage takes a fraction of the time to move and the performance of the VM is instantly restored.

According to the ATTO data above the ReadyNAS should be offering better performance than the substantially more expensive EqualLogic, however in this condition these units are next to useless.

If I create an SMB share and perform a file copy of the storage network, I can get 113MB/s off of a file copy on a 1x1GbE NIC, with no problems. So it does not look like a network issue.

Does anyone have any ideas? I have one of the 3220's in a state that I can do anything to.

The only thing that I haven't done is try Flex-RAID instead of X-RAID, but these numbers do not look like a RAID parity calculation issue.

Many thanks,

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: RN3220 / RN4200 Crippling iSCSI Write Performance

To offer an update.

I've now upgraded the firmware to 6.7.2, with no change in performance.

I deleted the array and created a new RAID6 accross all 12 drives using FLEX-RAID instead of X-RAID. I let it write the parity overnight and complete the sync and re-tested The performance was worse.

Next I deleted the RAID6, recreated it as a RAID0, and then repeated. The same problem, with similar dire write performance levels. The increase in the read performance is evident though.

Again, keeping it single path, no-MPIO. During the VHDX copy into the iSCSI target, the NIC is unable to get higher than 700Mbps and the Max value on the NIC graph on the RN3220 shows 74.1M. The average value is about 450Mbps. Sending it the other way caps out at about 800Mbps (I would expect higher, but the test EqualLogic shelf is actually busy in production). There is however a very clear difference in write behaviours between the two.

Sending the VHDX from the EqualLogic to the NetGear is bursting! It'll go 200, 700, 100, 300, 500, 400, 700, 200, 400, 600, 700, 400 and so on. Sending the VHDX back to the EqualLogic is completely stable at 800Mbps within a margin of ~40Kbps. It is as if the RN3220 has filled a buffer and had to back off.

I've also tried it on a hypervisor that does not have Dell HIT installed on it in case the Dell DSM is being "anti-competitive". There was no change.

I've re-confirmed the 9K end to end frame size is working correctly.

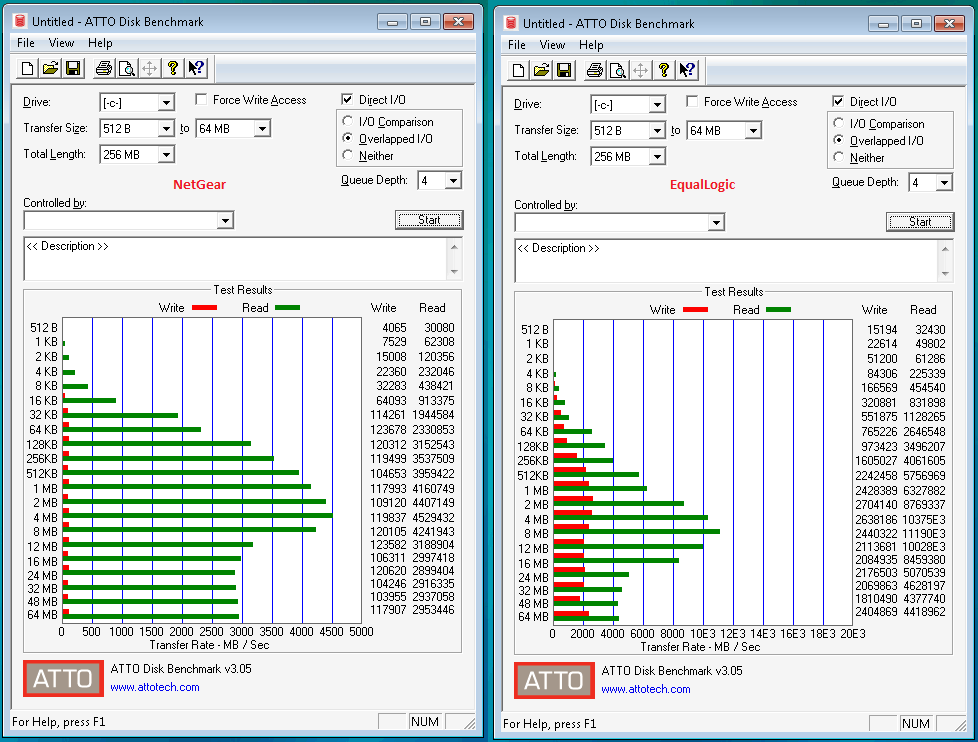

Here is an ATTO retest after everything new that I have tried. The NetGear is RAID0 in this, the EqualLogic RAID10.

Also to add to the above list, the iSCSI LUN's are formatted as 64K NTFS

p.s. the EqualLogic was extremely busy when I did this, hence the far lower performance numbers.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: RN3220 / RN4200 Crippling iSCSI Write Performance

I've now tried playing with the NIC buffers in /etc/sysctl.conf. They are only 206KB by default. I've tried between that and 12MB and while there was a slight improvment in read numbers around the 2MB mark, nothing at all happened with the write speeds. The array is still RAID0.

I've also tried as per waldbauer_com's post in

The Windows 7 VM is so sluggish, if you try and do too much at once, it blue screens out.

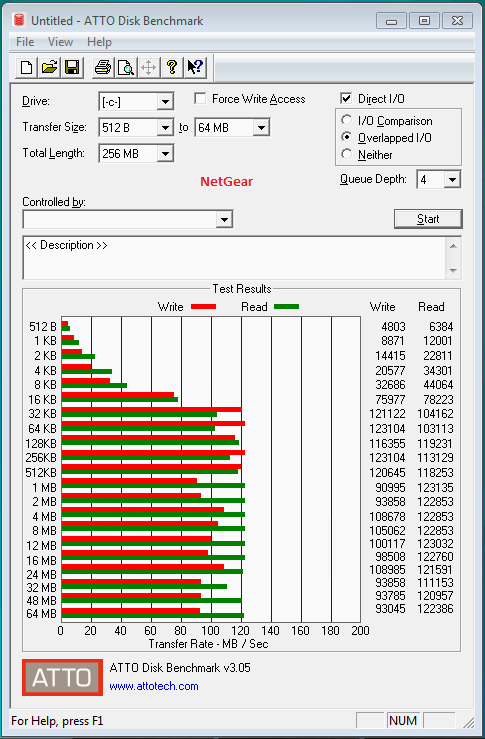

Finally, if I take LUN out of the CSV and re-mount it (on the same iSCSI connection) as local storage on the hypervisor, this is what happens to the ATTO stats and quite a lot of the sluggishness from running on a single 1GbE vanishes too.

So perhaps the issue is actually in NetGear compatibility with CSV?

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: RN3220 / RN4200 Crippling iSCSI Write Performance

I've now swapped into the array an Intel DP NIC and have linked the 10GbE into the system and it's offering even worse performance.

Intel DP ET 1000BASE-T Server Adapter

Switched

9k frames

Tested as one port and dual port RR MPIO

Slightly worse performance in read and write than the built-in adapters

QLogic BCM57810 10GbE 10GSFP+Cu

Point to point on a 3m SFP+DA, no switching involved

No Cluster Shared Volume

On a dedicated 1TB LUN

RAID0 underlying array

Nothing else happening on the array

All other optimisations as above.

So I think it's safe to assume it's not any of the NICs involved here.

First few lines of TOP during a benchmark

%Cpu(s): 0.0 us, 1.9 sy, 0.0 ni, 97.7 id, 0.3 wa, 0.0 hi, 0.1 si, 0.0 st

KiB Mem: 3926940 total, 3679804 used, 247136 free, 2196 buffers

KiB Swap: 3139580 total, 0 used, 3139580 free. 3318992 cached Mem

PID USER PR NI VIRT RES SHR S %CPU %MEM TIME+ COMMAND

6306 root 20 0 0 0 0 S 4.0 0.0 5:23.73 iscsi_trx

Internally, do a lage file copy within a VM and it'll settle at 19.8MB/s

So basically I've inherited several 4x1GbE disk arrays that on 12x 7200 rpm Enterprise SATA drives in RAID 0 cannot write at more than 690Mbps in occasional, yet quite infrequent bursts?

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: RN3220 / RN4200 Crippling iSCSI Write Performance

Hi mdgm,

Thanks for replying,

I have installed 6.7.3 as advised and rebooted.

Going from 9000/1500 on the Netgear, 9014/1514 on the hypervisor yields a more stable sequential file transfer when moving the test VM back in (102GB). It broadly sits between 580 and 660Mbps, predominatly over 600Mbps. This is on 1x1GbE Netgear side. Max Rx on eth2 on the Netgear states 76.7MB (613.6Mbps).

Here is an off thing though, I've set the eth2 IP address to 192.168.171.1 on 1500 MTU and then rebooted the array. Yet, i can still ping 192.168.171.1 with a 8000 byte ICMP echo. ARP confirms that it is talking to the correct NIC.

I've set the hypervisor to 1514

Jumbo Packet Disabled *JumboPacket {1514}

Rebooted the hypervisor.

Inside the CSV:

Not in a CSV (E:\ as a local iSCSI mount) - this was painful as the array had to copy from and to itself via the Hypervisor. The hypervisor was receiving and sending on the NIC at 380Mbps, the Netgear eth2 says 43.6MB. A larger burst variance was visible during the copy, between 230 and 480Mbps.

Max values on the NICs have dropped to 55.8MB, which would presumably be down to the lack of 9K

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: RN3220 / RN4200 Crippling iSCSI Write Performance

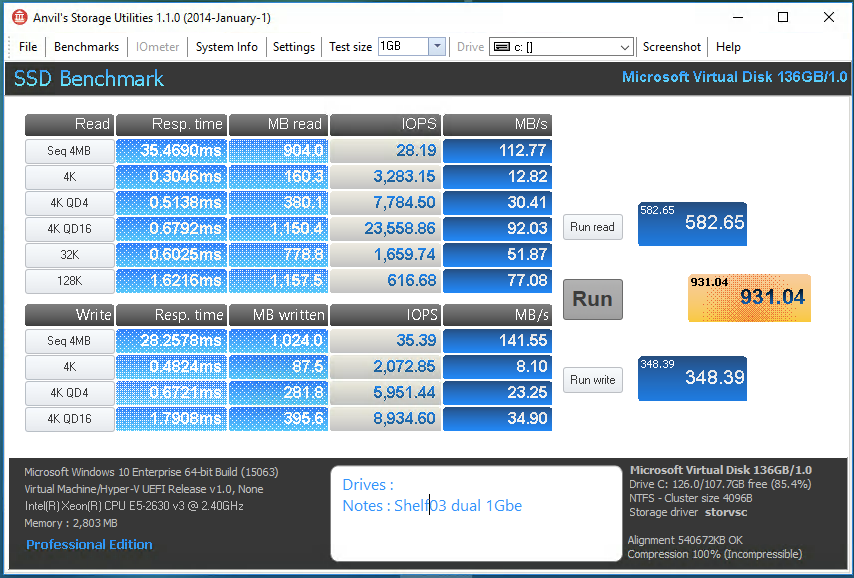

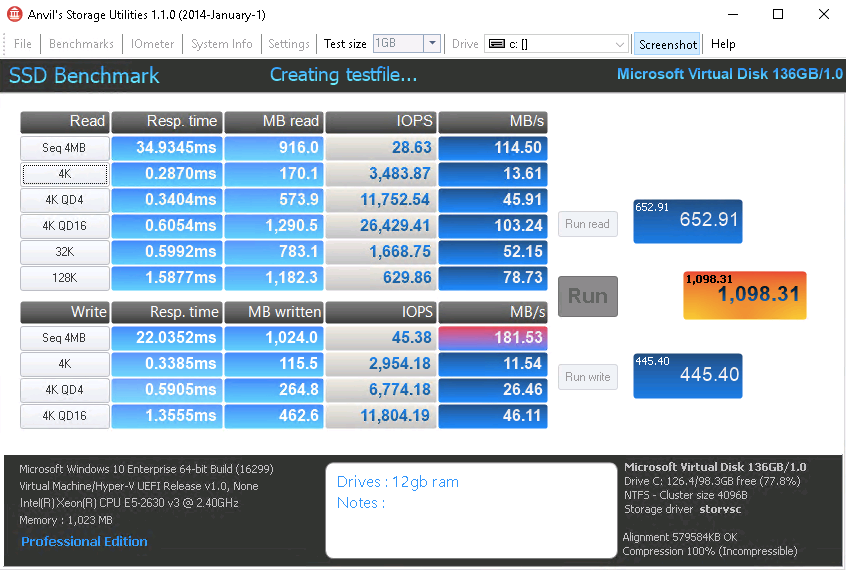

Incidentally, these are the numbers off of a QNAP TVS-1271U-RP on 12x4TB WD Red over the 10GbE controller, same switch fabric, on a very similar hypervisor (PE R630) near-by on local iSCSI (not CSV).

Spot the difference...

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: RN3220 / RN4200 Crippling iSCSI Write Performance

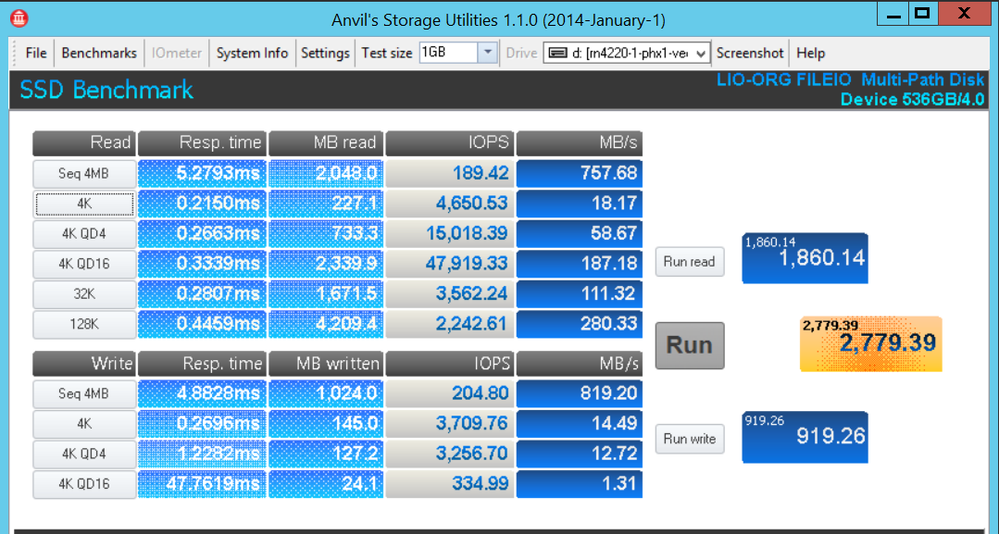

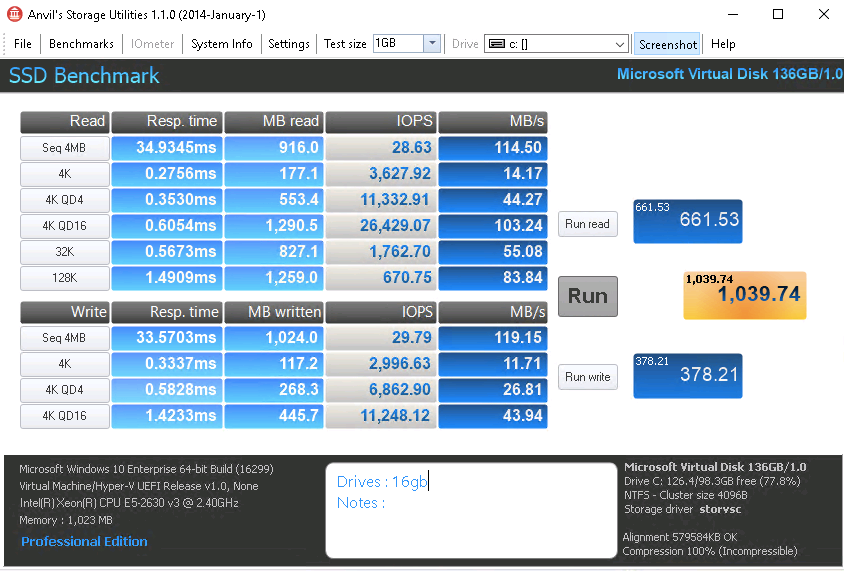

Well this is a bit better!

Clean installed onto OS 6.8.0 and built onto a new X-RAID 10 - all other fabric and infrastructure is identical to previous posts. The is a non-CSV test:

Keeping in mind that we started with a Seq 4MB write of 35.9MB, this is positively glorious. Not amazing but, the VM isn't unusable!

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: RN3220 / RN4200 Crippling iSCSI Write Performance

I'm having an almost identical experience (and configuration) with my 4220. Dual 10Gb Intel NICs, 10Gb switch, jumbo frames enabled end to end (and tried standard frames as well), also with a cluster and CSV. As you experienced, local disk performance is excellent, but the minute I put a VM in the CSV the performance is nearly unusable. I've been fighting this for a week (actually, I've been fighting this for almost a year, and I'm swithing from DPM to Veeam because of all of the problems I had with DPM...only to now realize that my problem was likely with the ReadyNAS)... so I'm doing the installs of a couple new VMs and it's been nearly 4 hours and the OS still isnt installed in the VMs yet.

Performance on the same hosts to our EQL is amazing... so it's not a network fabric issue.

Do you feel like your "fix" was to factory reset the device and reconfigure?

I just updated to 6.8.1 this past weekend with no change...but I havent done a factory reset.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: RN3220 / RN4200 Crippling iSCSI Write Performance

Basically, the short answer is that for CSV use, it isn't fixed. It just doesn't work. It never did for me. OS 6.8 did give an improvement in local iSCSI performance though with a cleanly reinstalled and fully synched X-RAID volume (see the above post).

In replying to you I thought that I would copy a 4GB file into a Windows 10 1709 test VM planted into a CSV on the RAID10 RN3220. It is BURSTING at 2.77MB/s and by bursting, I mean copying 2.77MB and then returning to 0MB/s for 4 seconds before bursting again. Disk latencies are between 0.7ms and 3.27ms.

It sounds as though, like me you are not having fabric issues - 4x aggregate 1GB, single or dual SFP+DA 10GbE makes no difference. Over CSVL iSCSI there is nothing that you can do.

Take it out and use it as local storage and it will give usable, but mediocre performance. An identical QNAP with the same 7200 RPM Seagate Enterprise drives puts it completely to shame. It isn't enterprise hardware, certainly this is true. Yet, if after this many OS releases there hasn't been any change in performance characteristics, we can assume that there isn't going to be.

If you want to iSCSI on a low RPM array, on SATA, and especially if you want to use a cluster or concurrent access file system, stay clear of Netgear. It isn't the product for you.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: RN3220 / RN4200 Crippling iSCSI Write Performance

Thanks for your follow up. I did factory reset and rebuild the X-RAID volume last night, and I was able to get "better" performance out of the device today (even dropping the IOPS of two drives to be global spares). One thing I noted... I had to disable sync writes on EVERY LUN... When I tried it disabled on 1 LUN, nothing was better, but after I disabled it on every LUN it improved. This may be a bug (or limitation).

I hate to always come to the forum and bash Netgear, but I agree the overall enterprise readiness of the devices seems to have degraded (as you may note form my previous posts). I have had just nothing but problems out of the new devices, and my love for them came out of the rock solid behavior and performance of the older models (with 4.x firmware). That just doesnt seem to be the case any more unfortunately.

Oh and the cheap plastic drive trays are cracking at the screws. Apparently this plastic cracks in datacenter temperatures...lol

I may give QNAP or Synology a spin... I know many people swear by them.

Luckily, we are keeping our EQL for production data, and this has (and will always be) a backup target/location...but I'm just floored that I cant even run a single 500GB LUN with a few VMs on it with adequate performance.

Thanks again!

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: RN3220 / RN4200 Crippling iSCSI Write Performance

I havn't observed the sync writes perfomance metric as you say, but I've always turned it off because I could see it was a problem way back when I inherited the array (and this headache).

The irony is that a 2 drive, 1 NIC ReadyNAS Duo v2 with a VHDX sitting on it gives better performance than a 12 drive RN3220/RN4220X does.

For the hell of it, I just span up a 0.5TB local iSCSI target using 2x1GbE (i.e. not in the CSVL) to see what the performance was like with the same VM on the same hypervisor that I just did that 4GB test copy with, using the same file from the same source. It still burts, but it burts between 40MB/s and 74MB/s. Somewhat better than 0 to 2.77MB/s...

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: RN3220 / RN4200 Crippling iSCSI Write Performance

That does seem REALLY bad... I think my performance is much better than yours. Do you have stock/default memory in it? (I think 8GB?).

I upgraded ours to 32GB right off the bat (the memory they shipped with it was bad!!!...and I had 4 sticks laying around from other projects I used).

I wonder if a memory upgrade would help.

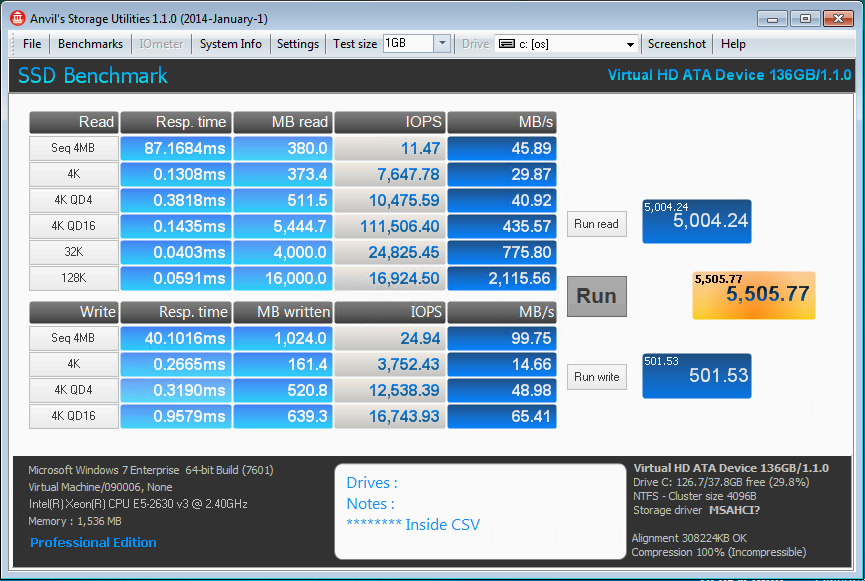

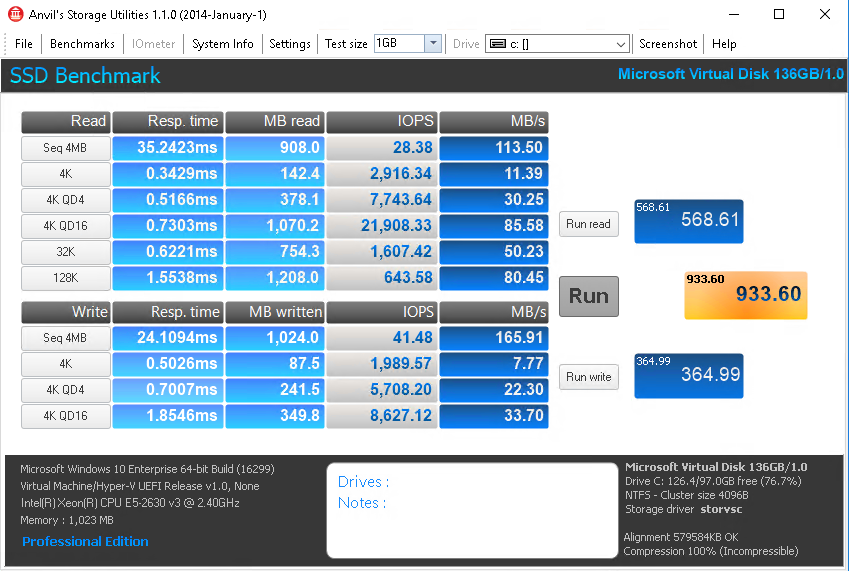

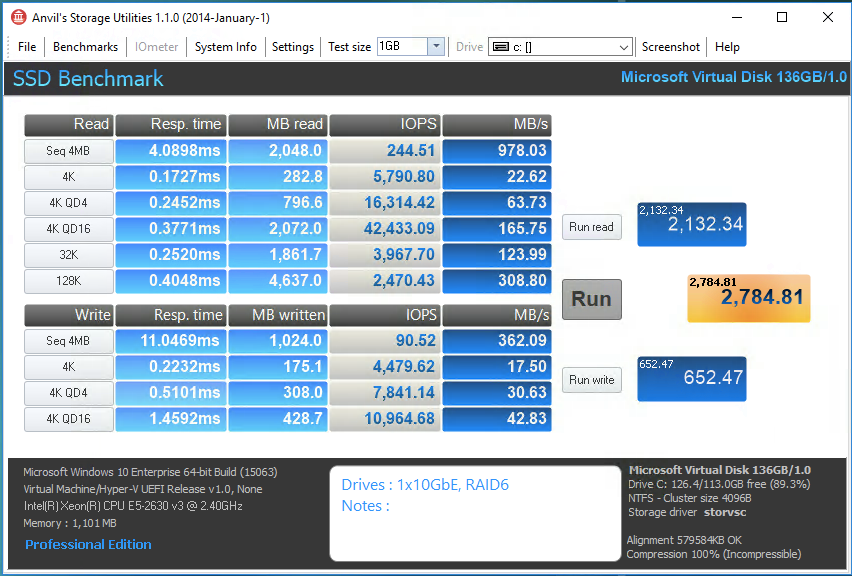

Here's where I'm at right now...which is acceptable (but this is under 0 load with no VMs running, and NOT in CSV).

10 drive X-RAID RAID6 (2 global spares). Just factory reset and rebuilt on 6.8.1. Dual 10Gb Intel X520 NICs (not the crap they come with), jumbo frames

This is WAY better than I was getting yesterday (total score was 1,045)

I used all defaults in the software.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: RN3220 / RN4200 Crippling iSCSI Write Performance

Not something I have much experience with, but I do wonder if RAID-50 would give faster speeds.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: RN3220 / RN4200 Crippling iSCSI Write Performance

Yea, that would definitely help.

If you're looking to test the theoretical maximum performance you can get from your box, 12 drives in a RAID0 would remove any disk bottlenecks. Then you'd have to figure out where the next bottleneck is.

Obviously RAID0 isnt the best choice for critical production data, so RAID50 or 60 would be a likely choice if you can take the hit on total disk space. I'm not willing to give up 1/2 my disk space though, so RAID6 is better for me (and in my configuration I lose 1/3 of my total disk space).

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: RN3220 / RN4200 Crippling iSCSI Write Performance

Quite! Those numbers are closer to the QNAP array figures.

I'm not using 10GbE of course, which will add a little pep to the numbers.

RAID10, 12 drives, 6.8.1, Dual 1GbE (onboard). As you can see, dreadful.

Are you running HyperV, VMWare, Zen? Was your VHDX dynamic or fixed?

RAM: Yes, I did consider it as there are only 8GB in both, but the system never reports using it if you SSH into it. I don't have any compatible sticks lying around though to try and I'm not prepared to spend on these at this point. Chicken or Egg?

nxtgen, if you scroll back through the thread you'll see that I did RAID0 it, with no changes in the numbers. I've also done RAID6 and RAID10. There's virtually nothing between them in CSVL or local numbers.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: RN3220 / RN4200 Crippling iSCSI Write Performance

For the heck of it, I converted the test VM from a dynamic to a fixed size VHDX and re-ran the numbers

The slight reduction in performance is likely just because we're into business hours now and the system is getting busier. So it isn't inefficiencies in dynamic disks.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: RN3220 / RN4200 Crippling iSCSI Write Performance

In addition to trying RAID-50 you may also wish to consider trying SSD Metadata Tiering (a new feature in 6.9.0).

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: RN3220 / RN4200 Crippling iSCSI Write Performance

While that is a very nice feature to have, and I compliment Netgear on finally adding it - and for the other changes in the 6.9.0 changelog. Sticking a SSD cache plaster over a fundimental problem won't address the bottle neck in the product line and encourages people to spend more money on a solution that masks, instead of fixes the problem. In the absense of knowing what the bottle neck is - it may not even fix the problem to begin with.

It also means pulling out two drives from the already populated 12 drive array.

I've got one of the arrays updating to 6.9.0 at the moment.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: RN3220 / RN4200 Crippling iSCSI Write Performance

Here we go, hot off the press.

This is an identically spec'd RN3220, with identical config, wiring etc, speaking to the same hypervisor over 2x 1GbE. The only config difference is that this is on RAID6 rather than RAID10; which my 'usual' victim shelf is currently runing with.

This was a dirty upgrade, not a clean array rebuild.

A within the margin of error improvment.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: RN3220 / RN4200 Crippling iSCSI Write Performance

Hyper-V with dynamic VHDX files. The LUNs are fixed/thick though.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: RN3220 / RN4200 Crippling iSCSI Write Performance

I think he is getting a lot more than "a litttle pep" out of 10GBE.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: RN3220 / RN4200 Crippling iSCSI Write Performance

Side note (and I dont remember if this was mentioned)... you should be using MPIO as well. "Bonding"/Load Balancing/LACP dont provide the same performance as MPIO.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: RN3220 / RN4200 Crippling iSCSI Write Performance

So to clarify after the above replies:

- I'm ONLY using fixed size LUN's on the RN's

- I've tried both fixed and dynamic VHDX's, there's virtually nothing between them stat wise

- Sync writes are disabled

- Copy on write isn't used anywhere

- I agree, you should never team iSCSI, that's page 1 stuff. I've tested with single channel or MPIO in dual channel

Based on the previous comment on RAM from nxtgen, I pulled some DDR3 1866 dimm's from a server temporarily and replaced the DDR3 1600 dimm's. The initial test set it to 12GB, single channel due to 3 dimms.

The second to 16GB, all dimm's identical, dual channel

This is the RAID6 array again, with nothing else on it, still running 6.9.0 and local iSCSI, not CSVL. I repeated the test with MPIO on and off. Writes get dented slightly in no MPIO mode, but that 4MB sequential number just won't move up on these arrays.

As you can see, a whole lot of nada. Running top in SSH shown that with enough activity, the system will claim the full amount for cache, leaving about 400MB free every time.

Rebooting the NAS, booting the VM and running Anvil, leave the NAS with about 4.4GB RAM used and the rest free.

I didn't screen shot it, but I put the array down to 4GB RAM, single channel and ran it again. The numbers were identical! No difference!!!

Copy a file to them over SMB or NFS and the arrays will saturate the link(s), no issues there. It's purely the iSCSI.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: RN3220 / RN4200 Crippling iSCSI Write Performance

Now that we've got the 1GbE write up to par (remember we started with 34MB/s, I've gone back to the 10GbE.

RAID6 array, 1x10GbE on TwinAx DAC, local, not CSVL (CSV doesn't work still)

It isn't anywhere near touching the 7200 x12 QNAP, and the write's are still way down, but it is better than it was.