- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

Remove inactive volumes to use the disk. Disk 1,2,3,4,1,2,3,4

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Remove inactive volumes to use the disk. Disk 1,2,3,4,1,2,3,4

Hello,

I have recently updated my ReadyNAS 104 to firmware 6.9.5 a few days ago.

It has been performing a bit slow lately until today when I lost write access to any share. I rebooted the device, was able to browse all my shares but upon trying to write a file to a folder it halted.

I checked the logs and I found I was receiving an error in the logs:

"crit:volume:LOGMSG_SYSTEM_USAGE_WARN System volume root's usage is 96%. This condition should not occur under normal conditions. Contact technical support."

I did some searching and though this may be caused by the Antivirus. I SSH'd into the device and cleared out all the temp files for ClamAV... I followed the solution as posted here: https://community.netgear.com/t5/Using-your-ReadyNAS-in-Business/System-volume-root-s-usage-is-90-RN...

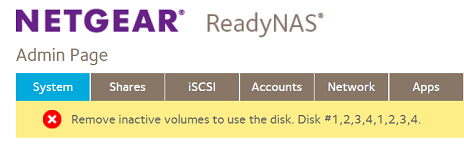

I'm no longer receiving this error. Hoping this fixed the issue I restarted the device again and this time was greeted on the admin page with the following error:

"Remove inactive volumes to use the disk. Disk #1,2,3,4,1,2,3,4"

I've check through the logs but can't find anything obvious which may have caused this error.

Currently populated with 4x 4TB NAS HDDs. Of course, I do not have a backup of this data so factory reset/wipe is not an option. This is a home NAS used for storing files and media. Current status: "Healthy"

This ReadyNAS has been alive for just over 6 years so all warranty and support has expired. I'd rather not pay hundreds of dollars if there is a simple solutions such as ssh, change a line or two and reboot.

I'm happy to share my logs if anyone can assist in finding a solution to this error.

Many thanks. 🙂

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: Remove inactive volumes to use the disk. Disk 1,2,3,4,1,2,3,4

You are welcome to upload the logs to a Google link or similar and PM me the link. Then I can take a look for you. Cheers

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: Remove inactive volumes to use the disk. Disk 1,2,3,4,1,2,3,4

PM sent. 🙂

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: Remove inactive volumes to use the disk. Disk 1,2,3,4,1,2,3,4

Hi again @caraz

Thanks for sending over the logs.

Your disks and your raids are healthy so the problem is not hardware. The error appears because your filesystem cannot mount. From the Kernel logs:

Mar 29 11:41:01 kernel: md126: detected capacity change from 0 to 6000767729664 Mar 29 11:41:01 kernel: BTRFS: device label 0e36b824:data devid 2 transid 315571 /dev/md126 Mar 29 11:41:02 kernel: BTRFS error (device md126): bad tree block start 2350059173328504026 19741748822016 Mar 29 11:41:02 kernel: BTRFS error (device md126): bad tree block start 2350059173328504026 19741748822016 Mar 29 11:41:02 kernel: BTRFS warning (device md126): failed to read tree root Mar 29 11:41:02 kernel: BTRFS error (device md126): bad tree block start 2350059173328504026 19741748822016 Mar 29 11:41:02 kernel: BTRFS error (device md126): bad tree block start 2350059173328504026 19741748822016 Mar 29 11:41:02 kernel: BTRFS warning (device md126): failed to read tree root Mar 29 11:41:44 kernel: BTRFS error (device md126): logical 19742819614720 len 1073741824 found bg but no related chunk Mar 29 11:41:44 kernel: BTRFS error (device md126): failed to read block groups: -2 Mar 29 11:41:44 kernel: BTRFS error (device md126): failed to read block groups: -17 Mar 29 11:41:44 kernel: BTRFS error (device md126): failed to read block groups: -17 Mar 29 11:41:45 kernel: BTRFS error (device md126): open_ctree failed

The readynas daemon cannot see the filesystem properly. Likely it is having issues reading the superblocks or it cannot see attributes from the filesystem.

Mar 29 11:53:03 readynasd[2856]: ERROR: not a btrfs filesystem: /data Mar 29 11:53:03 readynasd[2856]: ERROR: not a btrfs filesystem: /data Mar 29 11:53:03 readynasd[2856]: ERROR: can't access '/data'

The filesystem is "crashing" and we see stack traces in the kernel logs as well. It looks like it fails the checksum which indicates corruption.

Mar 29 09:35:04 kernel: [<c0023474>] (warn_slowpath_null) from [<c0285358>] (btree_csum_one_bio+0xb4/0xe0) Mar 29 09:35:04 kernel: [<c0285358>] (btree_csum_one_bio) from [<c0283e38>] (run_one_async_start+0x30/0x40) Mar 29 09:35:04 kernel: [<c0283e38>] (run_one_async_start) from [<c02c1e74>] (normal_work_helper+0xe0/0x1a4) Mar 29 09:35:04 kernel: [<c02c1e74>] (normal_work_helper) from [<c0036074>] (process_one_work+0x1cc/0x314) Mar 29 09:35:04 kernel: [<c0036074>] (process_one_work) from [<c00364f0>] (worker_thread+0x304/0x41c) Mar 29 09:35:04 kernel: [<c00364f0>] (worker_thread) from [<c003acec>] (kthread+0x108/0x120) Mar 29 09:35:04 kernel: [<c003acec>] (kthread) from [<c000ed00>] (ret_from_fork+0x14/0x34) Mar 29 09:35:04 kernel: ---[ end trace f426276db0176d56 ]--- Mar 29 09:35:04 kernel: WARNING: CPU: 0 PID: 13 at fs/btrfs/disk-io.c:541 btree_csum_one_bio+0xb4/0xe0() Mar 29 09:35:04 kernel: Modules linked in: vpd(PO) Mar 29 09:35:04 kernel: CPU: 0 PID: 13 Comm: kworker/u2:1 Tainted: P W O 4.4.157.armada.1 #1 Mar 29 09:35:04 kernel: Hardware name: Marvell Armada 370/XP (Device Tree) Mar 29 09:35:04 kernel: Workqueue: btrfs-worker btrfs_worker_helper Mar 29 09:35:04 kernel: [<c00151ec>] (unwind_backtrace) from [<c00116d0>] (show_stack+0x10/0x18) Mar 29 09:35:04 kernel: [<c00116d0>] (show_stack) from [<c0371b10>] (dump_stack+0x78/0x9c) Mar 29 09:35:04 kernel: [<c0371b10>] (dump_stack) from [<c0023434>] (warn_slowpath_common+0x80/0xa8) Mar 29 09:35:04 kernel: [<c0023434>] (warn_slowpath_common) from [<c0023474>] (warn_slowpath_null+0x18/0x20) Mar 29 09:35:04 kernel: [<c0023474>] (warn_slowpath_null) from [<c0285358>] (btree_csum_one_bio+0xb4/0xe0) Mar 29 09:35:04 kernel: [<c0285358>] (btree_csum_one_bio) from [<c0283e38>] (run_one_async_start+0x30/0x40) Mar 29 09:35:04 kernel: [<c0283e38>] (run_one_async_start) from [<c02c1e74>] (normal_work_helper+0xe0/0x1a4) Mar 29 09:35:04 kernel: [<c02c1e74>] (normal_work_helper) from [<c0036074>] (process_one_work+0x1cc/0x314) Mar 29 09:35:04 kernel: [<c0036074>] (process_one_work) from [<c00364f0>] (worker_thread+0x304/0x41c) Mar 29 09:35:04 kernel: [<c00364f0>] (worker_thread) from [<c003acec>] (kthread+0x108/0x120) Mar 29 09:35:04 kernel: [<c003acec>] (kthread) from [<c000ed00>] Mar 29 09:35:04 kernel: ---[ end trace f426276db0176d57 ]---

BTRFS does have great tools to deal with this kind of problem. However, unless you are very familiar with Linux and BTRFS I suggest that you reach out to NETGEAR and ask their assistance in recovering from this.

It is difficult to say how deep that corruption goes and when it really started. The 96% full root volume error that you got is unlikely to be a cause as that refers to the OS and not the data volume. I suspect that the issue has been ongoing for a while and gotten worse over time.

Chances of recovery is hard to estimate but as you don't have a backup you have to go that route.

NETGEAR are quite good at dealing with these cases but it is gonna cost you a coupled hundred bucks for the data recovery contract and will be "best effort". Again, with no backup to restore from, is certainly worth doing.

Sorry, I couldn't bring you better news 😞

Cheers

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: Remove inactive volumes to use the disk. Disk 1,2,3,4,1,2,3,4

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content