- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

RN 316 - Raid 5 config, Data Volume Dead

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

RN 316 - Raid 5 config, Data Volume Dead

Hello. I have a ReadyNas 316 with 6x hd running in a raid 5 configuration. The other day I had disk2 go from ONLINE to FAILED and the system entered a degraded state. This forced a resync. As it was resyncing, I then had a failure in disk3, and it went from ONLINE to FAILED. This caused disk2 to change from RESYNC to ONLINE, but the whole system moved from degraded to failed. Now it's stating the Volume: Volume data is Dead. At this point, I have powered down the system.

These hard drives are more than 5-6 years old and non enterprise. In hindsight, I should *not* have allowed the resync to occur and instead performed a backup of the NAS before resyncing the data. The resyncing of the raid array taxed disk3 resulting in its failure and breaking the whole system which I’m learning is quite common. At this point, I have lost 2 disks of my 6 hds, thus making recovery via the raid 5 configuration impossible.

I believe I can be certain that data in disk2 is gone. However, it is possible that I may be able to recover disk3 despite it being marked FAILED.

Based on my preliminary research, what I need to do is clone each drive to preserve whatever data is recoverable. If I’m lucky and can access disk 3 and clone it (along with disk 1,4,5,6) and not run into any other issues, I *should* be able to rebuild/recover the raid array as I have enough drives to do so.

I may consider professional data recovery, but I do want to assess if I could do this on my own first and what costs of that could look like. I know professional recovery can be very expensive and is not 100% guaranteed.

Now I need to formulate a plan. But some questions first:

- The raid 5 configuration was setup using netgear’s X-Raid. Does this imply rebuilding this raid array outside of netgears ReadyNAS HW is impossible? I think X-Raid is proprietary to netgear?

- Can I say with certainty that disk2 is useless in the context of using it for any data recovery, hence I can skip cloning it? It's the drive that first FAILED, which started the resyncing process, which never completed. I think any data that existed here to recover the raid array is gone or not useable from the incomplete resync. Am I wrong?

- Cloning every drive to preserve their data would require me purchasing 6 new older hd models that are compatible with the ReadyNAS 316. Before I make this investment, I do want to be able get some confidence or indication that I can recover the data. What's the best way to start? I'm thinking maybe I just attempt to clone disk3, then replace it with the new clone in ReadyNAS 316.

Appreciate all help!

PS. pasting the last batch of logs that lead to this failure state:

Mar 27, 2024 01:00:17 AM Volume: Volume data is Dead.

Mar 26, 2024 10:23:57 PM Disk: Detected increasing ATA error count: [334] on disk 3 (Internal) [WDC WD30EFRX-68EUZN0, WD-WMC4N1002183] 2 times in the past 30 days. This condition often indicates an impending failure. Be prepared to replace this disk to maintain data redundancy.

Mar 26, 2024 10:22:45 PM Disk: Disk in channel 2 (Internal) changed state from RESYNC to ONLINE.

Mar 26, 2024 10:22:34 PM Disk: Disk in channel 3 (Internal) changed state from ONLINE to FAILED.

Mar 26, 2024 10:22:34 PM Volume: Volume data health changed from Degraded to Dead.

Mar 26, 2024 08:17:57 PM Disk: Detected increasing reallocated sector count: [1967] on disk 3 (Internal) [WDC WD30EFRX-68EUZN0 WD-WMC4N1002183] 53 times in the past 30 days. This condition often indicates an impending failure. Be prepared to replace this disk to maintain data redundancy.

Mar 26, 2024 08:15:46 PM Disk: Detected increasing reallocated sector count: [1959] on disk 3 (Internal) [WDC WD30EFRX-68EUZN0 WD-WMC4N1002183] 52 times in the past 30 days. This condition often indicates an impending failure. Be prepared to replace this disk to maintain data redundancy.

Mar 26, 2024 08:09:11 PM Disk: Detected increasing reallocated sector count: [1954] on disk 3 (Internal) [WDC WD30EFRX-68EUZN0 WD-WMC4N1002183] 51 times in the past 30 days. This condition often indicates an impending failure. Be prepared to replace this disk to maintain data redundancy.

Mar 26, 2024 08:00:48 PM Disk: Detected increasing reallocated sector count: [1948] on disk 3 (Internal) [WDC WD30EFRX-68EUZN0 WD-WMC4N1002183] 50 times in the past 30 days. This condition often indicates an impending failure. Be prepared to replace this disk to maintain data redundancy.

Mar 26, 2024 07:59:22 PM Disk: Detected increasing reallocated sector count: [1923] on disk 3 (Internal) [WDC WD30EFRX-68EUZN0 WD-WMC4N1002183] 49 times in the past 30 days. This condition often indicates an impending failure. Be prepared to replace this disk to maintain data redundancy.

Mar 26, 2024 07:56:36 PM Disk: Detected increasing reallocated sector count: [1758] on disk 3 (Internal) [WDC WD30EFRX-68EUZN0 WD-WMC4N1002183] 48 times in the past 30 days. This condition often indicates an impending failure. Be prepared to replace this disk to maintain data redundancy.

Mar 26, 2024 07:55:23 PM Disk: Detected increasing reallocated sector count: [1757] on disk 3 (Internal) [WDC WD30EFRX-68EUZN0 WD-WMC4N1002183] 47 times in the past 30 days. This condition often indicates an impending failure. Be prepared to replace this disk to maintain data redundancy.

Mar 26, 2024 07:52:13 PM Disk: Detected increasing reallocated sector count: [1718] on disk 3 (Internal) [WDC WD30EFRX-68EUZN0 WD-WMC4N1002183] 46 times in the past 30 days. This condition often indicates an impending failure. Be prepared to replace this disk to maintain data redundancy.

Mar 26, 2024 07:50:55 PM Disk: Detected increasing reallocated sector count: [1713] on disk 3 (Internal) [WDC WD30EFRX-68EUZN0 WD-WMC4N1002183] 45 times in the past 30 days. This condition often indicates an impending failure. Be prepared to replace this disk to maintain data redundancy.

Mar 26, 2024 07:48:37 PM Disk: Detected increasing reallocated sector count: [1696] on disk 3 (Internal) [WDC WD30EFRX-68EUZN0 WD-WMC4N1002183] 44 times in the past 30 days. This condition often indicates an impending failure. Be prepared to replace this disk to maintain data redundancy.

Mar 26, 2024 06:54:00 PM Disk: Disk Model:WDC WD30EFRX-68EUZN0 Serial:WD-WMC4N0956058 was added to Channel 2 of the head unit.

Mar 26, 2024 06:53:43 PM Disk: Disk Model:WDC WD30EFRX-68EUZN0 Serial:WD-WMC4N0956058 was removed from Channel 2 of the head unit.

Mar 26, 2024 06:52:29 PM Disk: Detected increasing reallocated sector count: [1663] on disk 3 (Internal) [WDC WD30EFRX-68EUZN0 WD-WMC4N1002183] 43 times in the past 30 days. This condition often indicates an impending failure. Be prepared to replace this disk to maintain data redundancy.

Mar 26, 2024 06:52:28 PM Disk: Disk in channel 2 (Internal) changed state from ONLINE to FAILED.

Mar 26, 2024 06:52:22 PM Volume: Volume data health changed from Redundant to Degraded.

Mar 26, 2024 06:44:27 PM Disk: Detected increasing reallocated sector count: [1439] on disk 3 (Internal) [WDC WD30EFRX-68EUZN0 WD-WMC4N1002183] 42 times in the past 30 days. This condition often indicates an impending failure. Be prepared to replace this disk to maintain data redundancy.

Mar 26, 2024 06:43:14 PM Disk: Detected increasing reallocated sector count: [1407] on disk 3 (Internal) [WDC WD30EFRX-68EUZN0 WD-WMC4N1002183] 41 times in the past 30 days. This condition often indicates an impending failure. Be prepared to replace this disk to maintain data redundancy.

Mar 26, 2024 06:13:26 PM Disk: Detected increasing reallocated sector count: [1361] on disk 3 (Internal) [WDC WD30EFRX-68EUZN0 WD-WMC4N1002183] 40 times in the past 30 days. This condition often indicates an impending failure. Be prepared to replace this disk to maintain data redundancy.

Mar 26, 2024 04:37:29 PM Disk: Detected increasing reallocated sector count: [1360] on disk 3 (Internal) [WDC WD30EFRX-68EUZN0 WD-WMC4N1002183] 39 times in the past 30 days. This condition often indicates an impending failure. Be prepared to replace this disk to maintain data redundancy.

Mar 26, 2024 04:20:57 PM Disk: Detected increasing reallocated sector count: [1326] on disk 3 (Internal) [WDC WD30EFRX-68EUZN0 WD-WMC4N1002183] 38 times in the past 30 days. This condition often indicates an impending failure. Be prepared to replace this disk to maintain data redundancy.

Mar 26, 2024 04:18:32 PM Disk: Detected increasing reallocated sector count: [1325] on disk 3 (Internal) [WDC WD30EFRX-68EUZN0 WD-WMC4N1002183] 37 times in the past 30 days. This condition often indicates an impending failure. Be prepared to replace this disk to maintain data redundancy.

Mar 26, 2024 03:37:44 PM Disk: Detected increasing reallocated sector count: [1317] on disk 3 (Internal) [WDC WD30EFRX-68EUZN0 WD-WMC4N1002183] 36 times in the past 30 days. This condition often indicates an impending failure. Be prepared to replace this disk to maintain data redundancy.

Mar 26, 2024 01:38:10 PM Disk: Detected increasing reallocated sector count: [1314] on disk 3 (Internal) [WDC WD30EFRX-68EUZN0 WD-WMC4N1002183] 35 times in the past 30 days. This condition often indicates an impending failure. Be prepared to replace this disk to maintain data redundancy.

Mar 26, 2024 11:21:25 AM Volume: Scrub started for volume data.

Mar 26, 2024 10:12:34 AM Volume: Disk test failed on disk in channel 5, model WDC_WD3003FZEX-00Z4SA0, serial WD-WCC130725037.

Mar 26, 2024 10:12:33 AM Volume: Disk test failed on disk in channel 3, model WDC_WD30EFRX-68EUZN0, serial WD-WMC4N1002183.

Mar 26, 2024 10:12:33 AM Volume: Disk test failed on disk in channel 2, model WDC_WD30EFRX-68EUZN0, serial WD-WMC4N0956058.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: RN 316 - Raid 5 config, Data Volume Dead

@halbertn wrote:

- The raid 5 configuration was setup using netgear’s X-Raid. Does this imply rebuilding this raid array outside of netgears ReadyNAS HW is impossible? I think X-Raid is proprietary to netgear?

X-RAID is built on Linux software RAID (mdadm). All X-RAID adds is software that automatically manages expansion.

Rebuilding the array and mounting the data volume is certainly possible on a standard linux PC that has mdadm and btrfs (the file system used by the NAS) installed.

@halbertn wrote:

However, it is possible that I may be able to recover disk3 despite it being marked FAILED.

Mar 26, 2024 08:17:57 PM Disk: Detected increasing reallocated sector count: [1967] on disk 3 (Internal)

This says ~2000 failed sectors on disk 3. There could easily be more that haven't been detected yet.

Plus you have these errors:

Mar 26, 2024 10:12:34 AM Volume: Disk test failed on disk in channel 5, model WDC_WD3003FZEX-00Z4SA0, serial WD-WCC130725037.

Mar 26, 2024 10:12:33 AM Volume: Disk test failed on disk in channel 3, model WDC_WD30EFRX-68EUZN0, serial WD-WMC4N1002183.

Mar 26, 2024 10:12:33 AM Volume: Disk test failed on disk in channel 2, model WDC_WD30EFRX-68EUZN0, serial WD-WMC4N0956058.

suggesting that disk 5 also is at risk.

I've helped quite a few people deal with failed volumes over the years. Honestly your odds of success aren't good.

BTW, did you download the full log zip file from the NAS before you powered it down?

@halbertn wrote:

Based on my preliminary research, what I need to do is clone each drive to preserve whatever data is recoverable. If I’m lucky and can access disk 3 and clone it (along with disk 1,4,5,6) and not run into any other issues, I *should* be able to rebuild/recover the raid array as I have enough drives to do so.

When you try to recover from failing disks, the recovery can stress the disks - which can push them to complete failure. The benefit of cloning is that it can limit that additional damage.

There is a downside. RAID recovery can only help recover/repair failed sectors when it can detect which sectors have failed. Cloning hides that information. A failed sector in the source will result in garbage data on the corresponding sector of the clone, and there is no way RAID recovery software can identify that sector on the clone is bogus,

Still, in your case cloning makes sense.

There is a related path with similar plusses and minuses. That is to image the disks, and then work with the images. That can be done in a ReadyNAS VM or using RAID recovery software. If you have a large enough destination disk, it can hold multiple images.

@halbertn wrote:Can I say with certainty that disk2 is useless in the context of using it for any data recovery,

It's hard to say whether disk 2 is in worse shape than disk 3 or not. The fact that the system started to resync means that the disk 2 came back on line after the degraded message.

It is possible that disk 2 has completely failed, but you can't conclude that yet.

@halbertn wrote:

Cloning every drive to preserve their data would require me purchasing 6 new older hd models that are compatible with the ReadyNAS 316.

No. It would be a big mistake to try and purchase old hard drive models from the HCL

Current Seagate Ironwolf drives and WD Red Plus drives are all compatible with your NAS. So are all enterprise-clasee drives.

Avoid desktop drives and WD Red models, even if they are on the compatibility list. Most desktop drives in the 2-6 TB size range are now SMR drives that are not good choices for your NAS. Regrettably current WD Red drives are also SMR (but not Red Plus or Red Pro). These drives often have very similar model numbers to older CMR drives on the compatibility list.

Plus in recent years Netgear really didn't test all the drives they added to the HCL. Many were added based on tests of different models that Netgear believed were similar.

Bottom line here is that you can't trust the HCL, and it is best ignored.. Just get Red Plus, Seagate Ironwolf, or enterprise class disks.

@halbertn wrote:

Before I make this investment, I do want to be able get some confidence or indication that I can recover the data. What's the best way to start? I'm thinking maybe I just attempt to clone disk3, then replace it with the new clone in ReadyNAS 316.

I'd start with asking yourself how much your data is worth to you. That would establish a ceiling on how much you are willing to pay to recover it.

It is also worth asking yourself what you will do if the recovery fails. If you plan to bring the NAS back on line anyway (starting fresh), then investing in new disks wouldn't be wasted money, as you need to replace the failed disks anyway.

As far as where to start - I'd connect each drive to a PC (either with a USB adapter/dock or with SATA) and test it with WD's dashboard utility. That will give you more information on how many disks are failing. Label the disks by slot number as you remove them from the NAS.

As I mentioned above I think successful recovery will be problematic even for a professional. In addition to dealing with multiple disk failures, it sounds like you have no experience with data recovery. How much experience do you have with the linux command line interface? Have you ever worked with mdadm or btrfs using those interfaces? It is very easy to do more damage (making recovery even more difficult) if you don't really know what you are doing.

@halbertn wrote:

These hard drives are more than 5-6 years old and non enterprise. In hindsight, I should *not* have allowed the resync to occur and instead performed a backup of the NAS before resyncing the data. The resyncing of the raid array taxed disk3 resulting in its failure and breaking the whole system which I’m learning is quite common. At this point, I have lost 2 disks of my 6 hds, thus making recovery via the raid 5 configuration impossible.

Did you do something to trigger the resync? Or did it happen on its own???

FWIW, I think the lesson here is that RAID isn't enough to keep data safe - you definitely need a backuip plan in place to do that. Disks can fail without warning (as can a NAS or any storage device). Waiting until there's a failure to make a backup often results in waiting too long.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: RN 316 - Raid 5 config, Data Volume Dead

Data is all personal/unique and important enough to attempt recovery. Not the end of the world if the data is lost, but valuable enough to try. My thinking is spend a little to do an initial but safe evaluation of my drives. If it does look possible for me to proceed with data recovery, pause and assess if I continue on my own or consult with a professional based on their price estimates.

I was unable to pull the latest logs from the RN 316 admin dashboard. Although I was able to navigate around the dashboard, each time I tried to download the logs, I was presented with a generic web service error. I don’t recall the error…I want to say it was a 503 error code. The web service was not able to respond to my request to download the logs.

Question: are you familiar enough with the internals of how RN 316 stores and manages its log? My thinking is it should have its own internal storage or memory to collect logs (and not use the hdd that make up the raid array). If so, I could remove and label all drives, power up the RN and connect to it and attempt to either download the logs from the web portal or ssh in and access the logs from the terminal. What do you think? Is this worth pursuing?

I do however, have logs from a day earlier, prior to the failure of the NAS. It’s important to note from the logs that disk3 has multiple warning reports.

Yes, the resync happened on its own. A scheduled scrub was running, which caused Disk2 to “lose sync” and that triggered the resync to automatically occur. This is what lead to Disk3s reported failure and then data volume dying.

Question: since the NAS reported that disk2 was offline and in a resync state, does this imply that any data that was on this disk to preserve the data volume has been lost or become invalid? It had only been resyncing for a 3 hours of the 27 hours it estimated for completion. I’m asking b/c I want to know if I can even consider disk2 as a candidate for restoring the data from the NAS.

I don’t have experience in data recovery. I am very comfortable working in Linux environment and running command lines. I’m not experienced with mdadm or btrf so i would need to study up on those filesystems.

The total volume size was at 18TB. I do see 20+ TB drives in the market that are reasonably priced. I prefer to proceed with your suggestion for cloning each drive as an image and store them on a single hard drive before attempting the data recovery process. At least I will then have images of each drive saved and can then decide how to proceed with data recovery using the original hard drives.

Question:

- You suggested I connect each drive to a PC running Western Digitals dashboard utility to check the status of the drive. Are you referring to this https://support-en.wd.com/app/answers/detailweb/a_id/31759/~/download%2C-install%2C-test-drive-and-u...

- Assuming tool recognizes the connected drive from the NAS, what should I be looking for?

- I understand I can run various tests, but I’m not sure I want to run them at the risk of stressing the drives further.

Here’s my proposed plan. Please let me know what you think:

- Purchase a 20TB+ hard drive for storing images of each cloned drive. Even if data recovery is impossible, I can utilize this drive for other purposes so I’m willing to make this expense in the beginning.

- Connect disk3 (drive that was in a FAILED state) to a PC running WD dashboard.

- If the drive is recognized by the dashboard, proceed with cloning the drive.

- Question: I’m learning that ddrescue for Linux is the best tool for cloning. Assuming I get this far, I’d need to reboot the machine and open my Linux environment. Is this the right tool and env to use for cloning? Alternatively, I can setup ddrescue-gui in advance to be prepared to do a clone in a windows environment.

Thank you!

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: RN 316 - Raid 5 config, Data Volume Dead

@halbertn wrote:I understand I can run various tests, but I’m not sure I want to run them at the risk of stressing the drives further.

That is a risk. You could limit the test to the short self test (which normally only takes about 2 minutes). If that fails, the drive has certainly failed. Though success wouldn't mean that the drive is ok, given the brevity of the test.

You can run essentially the same test using smartctl on a linux system.

@halbertn wrote:

Question: are you familiar enough with the internals of how RN 316 stores and manages its log?

Many of the files in the zip extracted from the systemd journal. There are some others - mdstat.log is a copy of \etc\mdstat. lsblk is just the output of lsblk, etc. If you can log in via ssh (or tech support mode) you can create the zip file manually with rnutil create_system_log -o </path/filename> Use root as the username when you log in.

@halbertn wrote:

Question: since the NAS reported that disk2 was offline and in a resync state, does this imply that any data that was on this disk to preserve the data volume has been lost or become invalid? It had only been resyncing for a 3 hours of the 27 hours it estimated for completion. I’m asking b/c I want to know if I can even consider disk2 as a candidate for restoring the data from the NAS.

It depends on whether you were doing a lot of other writes to the volume during the resync. It the scrub/resync is all that was happening, then the data being written to the disk would have been identical to what was already on the disk. Likely there was some other activity. But I think it is reasonable to try recovery with disk 2 if you can clone it. There is no harm in trying.

@halbertn wrote:

Question: I’m learning that ddrescue for Linux is the best tool for cloning. Assuming I get this far, I’d need to reboot the machine and open my Linux environment. Is this the right tool and env to use for cloning? Alternatively, I can setup ddrescue-gui in advance to be prepared to do a clone in a windows environment.

You would need to install ddrescue. In general, installing stuff on the NAS will require some changes to the apt config.

More info on that is here:

Or you could just boot the PC up using a linux live boot flash drive.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: RN 316 - Raid 5 config, Data Volume Dead

@StephenB

During the scrub/resync process, there should have been 0 writes to the volume. I wasn't accessing the drive or writing any new data to it. It should have been idling with the exception of whatever operation a scrub or sync would have been doing. From your answer and based on the overall health of the drive, Disk2 could be a likely candidate for recovering the data volume.

Question:

1. If my intention is to clone an image of every drive from my NAS onto a single harddrive, which filesystem should I put on this new drive that will be storing my images? I'm assuming ext4 as it only needs to store the images and be accessible in Linux (and windows).

2. Let's assume I'm able to access each drive and clone an image of them. Doesn't this imply that if I were to re-insert these drives back into their original slots in the RN 316 enclosure, then *maybe* the data volume may just come up and and be readable?

3. Suppose I can only clone 5 of the 6 drives because 1 of the drives is failing to be recognized or fails to be cloned. Then same question as 2 but with the 5 drives.

4. Assuming 2 or more drives are unaccessible, then I can declare that recovery on my own is impossible? Maybe I consider professional help, but they are unlikely to succeed considering that the drives have failed.

5. Suppose I do have 5 or 6 images cloned, and I'm not able to access the data volume in the RN 316 enclosure using the original drives. Then I attempt to access the data volume by rebuilding the raid array using the cloned images in a linux environment as a next step?

Thank you!

PS. How are you performing an inline response to my post? I can't find the option to do that.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: RN 316 - Raid 5 config, Data Volume Dead

@halbertn wrote:

PS. How are you performing an inline response to my post? I can't find the option to do that.

I use the quote tool to get a full quote of the original post, and then edit it. This is a bit buggy - I do need to shift to html sometimes to clean it up. It's tedious in the beginning, but like most things gets easier/faster with practice.

@halbertn wrote:

Question:

1. If my intention is to clone an image of every drive from my NAS onto a single harddrive, which filesystem should I put on this new drive that will be storing my images? I'm assuming ext4 as it only needs to store the images and be accessible in Linux (and windows).

You want something that can handle a 3 TB drive image, so ext4 or ntfs could both work. ext4 is probably the right option.

@halbertn wrote:

2. Let's assume I'm able to access each drive and clone an image of them. Doesn't this imply that if I were to re-insert these drives back into their original slots in the RN 316 enclosure, then *maybe* the data volume may just come up and and be readable?

Likely you would also need to forcibly assemble the RAID array (since it will be out of sync). BTRFS repair might also be needed.

But if you had 6 clones (instead of images), you could put these in the NAS and do those steps in the NAS.

@halbertn wrote:

3. Suppose I can only clone 5 of the 6 drives because 1 of the drives is failing to be recognized or fails to be cloned. Then same question as 2 but with the 5 drives.

You'll need at least 5 drives, as RAID can reconstruct the 6th from the remaining 5. Though if there are unreadable sectors on the source drives, that reconstruction will have some errors.

@halbertn wrote:

4. Assuming 2 or more drives are unaccessible, then I can declare that recovery on my own is impossible?

Yes.

@halbertn wrote:

Maybe I consider professional help, but they are unlikely to succeed considering that the drives have failed.

Likely true. Forensic recovery tools might be able to read sectors that dd rescue can't. Their recovery software might also be able to get some things back.

@halbertn wrote:

5. Suppose I do have 5 or 6 images cloned, and I'm not able to access the data volume in the RN 316 enclosure using the original drives. Then I attempt to access the data volume by rebuilding the raid array using the cloned images in a linux environment as a next step?

Yes, though you could just directly move to working with the images. @Sandshark's posts explain how to mount the images as disks in a ReadyNAS VM. You can substitute a generic Linux VM for that also.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: RN 316 - Raid 5 config, Data Volume Dead

@halbertn wrote:

Question: are you familiar enough with the internals of how RN 316 stores and manages its log? My thinking is it should have its own internal storage or memory to collect logs (and not use the hdd that make up the raid array). If so, I could remove and label all drives, power up the RN and connect to it and attempt to either download the logs from the web portal or ssh in and access the logs from the terminal. What do you think? Is this worth pursuing?

Readynas store absolutely nothing on the flash that's not there from the factory or an OS update. They run from and put all data, including configuration data, on the the drives. It's a separate partition of the drives -- one that is replicated on them all in RAID1. Removing the drives effectively returns the unit to factory configuration.

If your OS partition has become read-only because of the drive errors, that may be the source of your error in creating the log .zip.

Another way to get the log zip is using SSH command. Here is what I run daily on my RD5200 converted to OS6:

rnutil create_system_log -o /data/logs/log_RD5200A_$(date +%Y%m%d%H%M%S).zip

You, of course, need to choose your own name for the file, including a directory you currently have in place of "logs". Since you have no accessible volume on which to put it, you'll have to copy it to a mounted drive (USB or network) or use rsync to get it where you can access it. And if the OS partition is read-only, you'll have to make the target directory one that's on a mounted device that's not read-only.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: RN 316 - Raid 5 config, Data Volume Dead

Hello @StephenB

@halbertn wrote:

2. Let's assume I'm able to access each drive and clone an image of them. Doesn't this imply that if I were to re-insert these drives back into their original slots in the RN 316 enclosure, then *maybe* the data volume may just come up and and be readable?Likely you would also need to forcibly assemble the RAID array (since it will be out of sync). BTRFS repair might also be needed.

But if you had 6 clones (instead of images), you could put these in the NAS and do those steps in the NAS.

Ok. I think I understand: no matter what, some repair or assembly of the raid would have to take place. Therefore, I need to perform this against the new clones (not the original hd as they won't be able to withstand the operation without risk of failure).

Question1: If I load the images of each clone using a ReadyNAS VM, then would the ReadyNAS VM be able to assembly the raid array and possibly repair the BTRFS filesystem? I assume this will require the VM to have to write to each image to perform these operations, which it should have permission to do? (For some reason, I'm thinking images are readonly, but I think I'm wrong... as long as the write permissions are set, it should be okay)

Question 2: I'm researching how to clone disks using ddrescue. I'm learning ddrescue performs multiple passes against the hd when cloning. Therefore, one recommendation is to split the clone into multiple phases:

# first phase - skip bad sectors

sudo ddrescue --verbose --idirect --no-scrape [source] [dest] disk.logfile# retry bad sectors up to 3 times

sudo ddrescue --verbose --idirect -r3 --no-scrape [source] [dest] disk.logfile

With this last run as optional:

# last run - removing 'no-scrape'

sudo ddrescue --verbose --idirect -r3 [source] [dev] disk.logfile

What do you think? My use-case may be different as I am trying to recover as much data to rebuild the raid-array after the clone operation. How would you go about performing the clone for each disk?

Thank you!

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: RN 316 - Raid 5 config, Data Volume Dead

If you use a VM, the images must be write enabled -- VirtualBox requires that they are. They will behave like real drives in a real NAS, except be slower. So they, too, are likely to be out of sync and require a forced re-assembly.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: RN 316 - Raid 5 config, Data Volume Dead

Recap: I had 6hdd in a raid5 configuration that failed and reported volume is dead.

Cause of failure: while running a scrub, disk2 fell out of sync forcing the raid array to resync it. During resync, disk3 failed.

Update:

I’ve begun the process of cloning each of my 6 drives to an image using ddrescue. Prior to performing the clone, I ran a smart test to get the status of each drive.

Disk 1, 4, 6 - test passed

Disk 2, 3, 5 - test failed

Assuming I would be successful in cloning disk1,4,6, I proceeded to begin cloning the failed disks first.

Disk 2 - clone was successful! No errors

Disk 3 - Stopped cloning after reaching 66% due to too many errors. Gave up on this disk.

Disk 5 - cloned 99.99% of drive.

Here is the output from ddrescue on disk5:

root@mint:~# ddrescue --verbose --idirect --no-scrape /dev/sdb /m

media/ mnt/

root@mint:~# ddrescue --verbose --idirect --no-scrape /dev/sdb /media/mint/1d4d6331-7e59-4156-824d-16f53d438b19/hdd_images/disk5.log /media/mint/1d4d6331-7e59-4156-824d-16f53d438b19/disk5.log

GNU ddrescue 1.23

About to copy 3000 GBytes from '/dev/sdb' to '/media/mint/1d4d6331-7e59-4156-824d-16f53d438b19/hdd_images/disk5.log'

Starting positions: infile = 0 B, outfile = 0 B

Copy block size: 128 sectors Initial skip size: 58624 sectors

Sector size: 512 Bytes

Press Ctrl-C to interrupt

ipos: 6716 kB, non-trimmed: 0 B, current rate: 8874 B/s

opos: 6716 kB, non-scraped: 3072 B, average rate: 104 MB/s

non-tried: 0 B, bad-sector: 1024 B, error rate: 170 B/s

rescued: 3000 GB, bad areas: 2, run time: 8h 28s

pct rescued: 99.99%, read errors: 3, remaining time: 1s

time since last successful read: 0s

Finished

root@mint:~# ddrescue --verbose --idirect -r3 --no-scrape /dev/sdb /media/mint/1d4d6331-7e59-4156-824d-16f53d438b19/hdd_images/disk5.log /media/mint/1d4d6331-7e59-4156-824d-16f53d438b19/disk5.log

GNU ddrescue 1.23

About to copy 3000 GBytes from '/dev/sdb' to '/media/mint/1d4d6331-7e59-4156-824d-16f53d438b19/hdd_images/disk5.log'

Starting positions: infile = 0 B, outfile = 0 B

Copy block size: 128 sectors Initial skip size: 58624 sectors

Sector size: 512 Bytes

Press Ctrl-C to interrupt

Initial status (read from mapfile)

rescued: 3000 GB, tried: 4096 B, bad-sector: 1024 B, bad areas: 2

Current status

ipos: 6716 kB, non-trimmed: 0 B, current rate: 0 B/s

opos: 6716 kB, non-scraped: 3072 B, average rate: 0 B/s

non-tried: 0 B, bad-sector: 1024 B, error rate: 256 B/s

rescued: 3000 GB, bad areas: 2, run time: 13s

pct rescued: 99.99%, read errors: 6, remaining time: n/a

time since last successful read: n/a

Finished

root@mint:~# ddrescue --verbose --idirect -r3 /dev/sdb /media/mint/1d4d6331-7e59-4156-824d-16f53d438b19/hdd_images/disk5.log /media/mint/1d4d6331-7e59-4156-824d-16f53d438b19/disk5.log

GNU ddrescue 1.23

About to copy 3000 GBytes from '/dev/sdb' to '/media/mint/1d4d6331-7e59-4156-824d-16f53d438b19/hdd_images/disk5.log'

Starting positions: infile = 0 B, outfile = 0 B

Copy block size: 128 sectors Initial skip size: 58624 sectors

Sector size: 512 Bytes

Press Ctrl-C to interrupt

Initial status (read from mapfile)

rescued: 3000 GB, tried: 4096 B, bad-sector: 1024 B, bad areas: 2

Current status

ipos: 6716 kB, non-trimmed: 0 B, current rate: 0 B/s

opos: 6716 kB, non-scraped: 0 B, average rate: 0 B/s

non-tried: 0 B, bad-sector: 4096 B, error rate: 256 B/s

rescued: 3000 GB, bad areas: 1, run time: 1m 4s

pct rescued: 99.99%, read errors: 30, remaining time: n/a

time since last successful read: n/a

Finished

Here is its map log file:

# Mapfile. Created by GNU ddrescue version 1.23

# Command line: ddrescue --verbose --idirect -r3 /dev/sdb /media/mint/1d4d6331-7e59-4156-824d-16f53d438b19/hdd_images/disk5.log /media/mint/1d4d6331-7e59-4156-824d-16f53d438b19/disk5.log

# Start time: 2024-04-02 13:25:45

# Current time: 2024-04-02 13:26:36

# Finished

# current_pos current_status current_pass

0x00667E00 + 3

# pos size status

0x00000000 0x00667000 +

0x00667000 0x00001000 -

0x00668000 0x2BAA0E0E000 +

Question: what should I do with this drive or image? Should I attempt to fix the image by mounting it and running chkdsk to fix it? Or should I proceed with rebuilding the raid array as is after I have finished cloning disk 1,4,6?

Thank. you!

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: RN 316 - Raid 5 config, Data Volume Dead

@halbertn wrote:

Question: what should I do with this drive or image? Should I attempt to fix the image by mounting it and running chkdsk to fix it? Or should I proceed with rebuilding the raid array as is after I have finished cloning disk 1,4,6?

I don't see any point to chkdsk, as there are no errors in the image (just some sectors that failed to copy).

So I would continue cloning, and then attempt to assemble the RAID array.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: RN 316 - Raid 5 config, Data Volume Dead

I have been able to successfully clone 100% of disk1,2,4,6.

Disk5 is at 99.9% with a missing 4k block.

I have my 5 of 6 disk images and am ready to proceed with raid reassembly. The simplest way to re-assemble the raid will be to use a ReadyNAS VM in a Linux environment.

Before I proceed I think I should do the following:

- purchase another 20TB hard drive

- Backup the 5 cloned images onto this new drive.

My reasoning is because I know the raid re-assembly will write to each image. By having a backup, I can revert to them if I ever need to and never touch my physical hard drives.

Question: At this point, what do you think is my success rate proceeding to re-assemble the raid array?

Question: Are there any other unknowns that may curb my success?

One item that is lurking in the back of my mind is that the whole raid failed when attempting to resync disk2, therefore questioning the data integrity of disk2. However,

@StephenB wrote:

It depends on whether you were doing a lot of other writes to the volume during the resync. It the scrub/resync is all that was happening, then the data being written to the disk would have been identical to what was already on the disk. Likely there was some other activity. But I think it is reasonable to try recovery with disk 2 if you can clone it. There is no harm in trying.

I do want to pause a moment to assess my progress and chance of success with the new information I have gathered from reaching a milestone in cloning 5/6 drives. I'm about to invest in another 20TB drive to backup my images, so I want reassess my odds at this point. Better yet, if there is any additional tools that I can run to gather more data to assess my success rate, now would be the best time to do them.

@StephenB wrote:

I've helped quite a few people deal with failed volumes over the years. Honestly your odds of success aren't good.

What do you think @StephenB...Have my odds gone up now?

Thank you!

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: RN 316 - Raid 5 config, Data Volume Dead

@halbertn wrote:

What do you think @StephenB...Have my odds gone up now?

If the 5 successful clones only lost one block on disk 5, then the odds have gone up.

Whether that missing block is a real issue or not depends on what sector it is. If it's free space, then it would have no effect whatsoever.

One thing to consider is that after the dust settles you could use the 2x20TB drives in the RN316, giving you 20 TB of RAID-1 storage.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: RN 316 - Raid 5 config, Data Volume Dead

You can always make another image of the 4 healthy drives, so backing up those images only saves you time in case you need to start over.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: RN 316 - Raid 5 config, Data Volume Dead

@Sandshark wrote:

You can always make another image of the 4 healthy drives, so backing up those images only saves you time in case you need to start over.

FWIW, it's not clear how healthy they are, given that several failed the self test (per the logs).

That said, I agree that disk 5 is the most important one to backup up, so if there is enough storage on the PC for that image, @halbertn could skip the others.

Though 2x20TB is a reasonable way to start over on the NAS, and @halbertn likely will also need storage for offload the files.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: RN 316 - Raid 5 config, Data Volume Dead

@StephenB wrote:

@Sandshark wrote:You can always make another image of the 4 healthy drives, so backing up those images only saves you time in case you need to start over.

FWIW, it's not clear how healthy they are, given that several failed the self test (per the logs).

That said, I agree that disk 5 is the most important one to backup up, so if there is enough storage on the PC for that image, @halbertn could skip the others.

Since my plan is to use VirtualBox to run the ReadyNAS OS VM, I will need the extra hard drive storage so that I can covert each .img into the .vdi and store them. Unfortunately VirtualBox only supports .vdi

Though 2x20TB is a reasonable way to start over on the NAS, and @halbertn likely will also need storage for offload the files.

Yes, I like this idea. I've already ordered my second drive.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: RN 316 - Raid 5 config, Data Volume Dead

@StephenB @Sandshark

I finished converting all my img to .vdi so that I could mount them using virtualbox. I assigned each drive as follows:

ReadyNasVM.vdk - SATA port 0

Disk1.vid - SATA port 1

Disk2.vdi - SATA port 2

Disk4.vid - SATA port 4

Disk5.vdi - SATA port 5

Disk6.vdi - SATA port 6

I intentionally skip SATA port3 as that should be the slot at which disk3 went in, but my disk3 clone was bad, so I'm ignoring that slot for now.

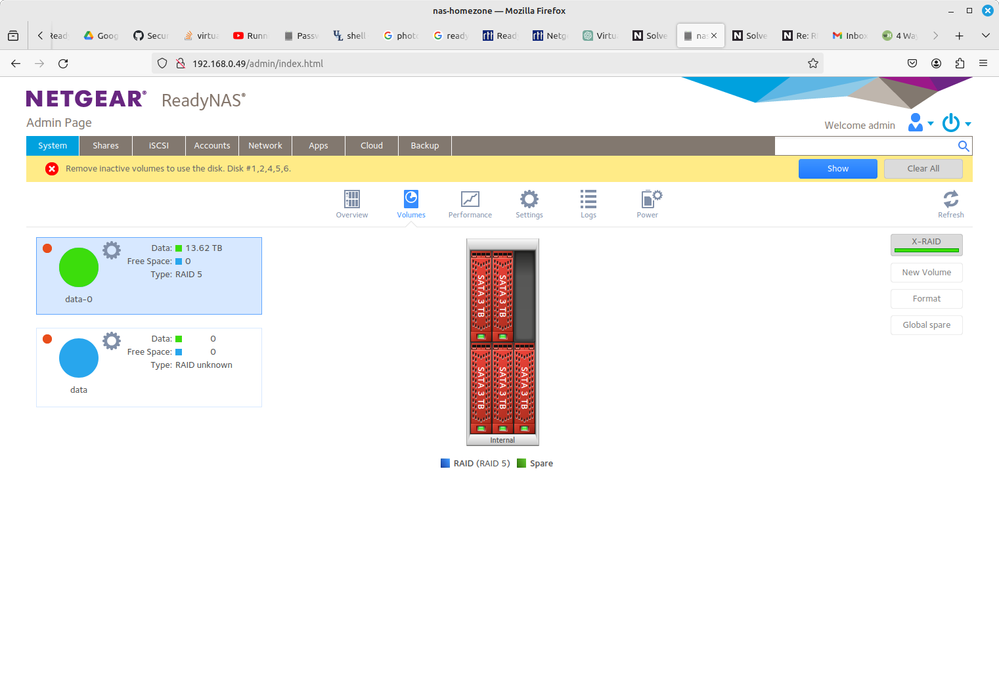

I boot up the VM in VirtualBox. However, my Raid5 volume is not recognized. I have an error stating "Remove inactive volumes to use the disk. Disk #1, 2, 4, 5, 6". I also have two data volumes, both which are inactive.

I'm including a screenshot below my post. I also have a zip of the latest logs, but I can't attach zips to this post. If you can point me to which log you'd like to review, I can include that in my next post.

Any ideas on what went wrong? Or am I missing a step to rebuild the raid array (I assumed it would ReadyNAS would to it automatically on boot).

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: RN 316 - Raid 5 config, Data Volume Dead

@halbertn wrote:

I'm including a screenshot below my post. I also have a zip of the latest logs, but I can't attach zips to this post. If you can point me to which log you'd like to review, I can include that in my next post.

Likely the volume is out of sync.

The best approach is to get me the full log zip. Do that in a private message (PM) using the envelope link in the upper right of the forum age. Put the log zip into cloud storage, and include a link in the PM. Make sure the permissions are set so anyone with the link can download.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: RN 316 - Raid 5 config, Data Volume Dead

@StephenB sent you a DM with a link to the logs.zip. Tried to also include the message below, but I don't think it was formatted properly. Including it here in case you have trouble reading it:

If you look at systemd-journal.log beginning at line: 3390, you'll see below

Apr 06 19:24:02 nas-homezone kernel: md: bind<sdd3>

Apr 06 19:24:02 nas-homezone kernel: md: bind<sdf3>

Apr 06 19:24:02 nas-homezone kernel: md: bind<sde3>

Apr 06 19:24:02 nas-homezone kernel: md: bind<sdc3>

Apr 06 19:24:02 nas-homezone kernel: md: bind<sdb3>

Apr 06 19:24:02 nas-homezone kernel: md/raid:md127: device sdb3 operational as raid disk 0

Apr 06 19:24:02 nas-homezone kernel: md/raid:md127: device sde3 operational as raid disk 5

Apr 06 19:24:02 nas-homezone kernel: md/raid:md127: device sdf3 operational as raid disk 4

Apr 06 19:24:02 nas-homezone kernel: md/raid:md127: device sdd3 operational as raid disk 3

Apr 06 19:24:02 nas-homezone kernel: md/raid:md127: allocated 6474kB

Apr 06 19:24:02 nas-homezone start_raids[1295]: mdadm: failed to RUN_ARRAY /dev/md/data-0: Input/output error

Apr 06 19:24:02 nas-homezone start_raids[1295]: mdadm: Not enough devices to start the array.

Apr 06 19:24:02 nas-homezone systemd[1]: Started MD arrays.

Apr 06 19:24:02 nas-homezone systemd[1]: Reached target Local File Systems (Pre).

Apr 06 19:24:02 nas-homezone systemd[1]: Reached target Swap.

Apr 06 19:24:02 nas-homezone systemd[1]: Starting udev Coldplug all Devices...

Apr 06 19:24:02 nas-homezone kernel: md/raid:md127: not enough operational devices (2/6 failed)

Apr 06 19:24:02 nas-homezone kernel: RAID conf printout:

Apr 06 19:24:02 nas-homezone kernel: --- level:5 rd:6 wd:4

Apr 06 19:24:02 nas-homezone kernel: disk 0, o:1, dev:sdb3

Apr 06 19:24:02 nas-homezone kernel: disk 3, o:1, dev:sdd3

Apr 06 19:24:02 nas-homezone kernel: disk 4, o:1, dev:sdf3

Apr 06 19:24:02 nas-homezone kernel: disk 5, o:1, dev:sde3Notice that sdc3 is not included in the raid array. The device sdc matches disk2.vdi, which was a clone of disk2 - if you recall, disk2 was the drive that fell out of sync in the original ReadyNAS HW unit. This forced a 'resync', against disk2, which lead to disk3 failing and the volume dying.

I wonder if this means disk2 is also bad and unusable for rebuilding the array?