NETGEAR is aware of a growing number of phone and online scams. To learn how to stay safe click here.

Forum Discussion

nickjames

May 13, 2017Luminary

Volume operations causing slowness across SMB

Greetings!

I'm trying to understand the behavior that I'm experiencing, whether or not its expected and most importantly, how can I improve it.

We are a graphic design shop. We use a lot of Adobe Illustrator files (*.ai, *.eps, *.pdf, etc.) that range from just a few hundred KB all the way up to 4GB per file.

The problem that we have experienced is opening those said files, editing them and then saving them back to the NAS, using Illustrator. I understand that this takes time even if the file was on the local machine however, occasionally when saving back to the NAS, other users that are using the NAS will report slower than usual file/directory access (SMB). Once the file finishes saving, the issue goes away.

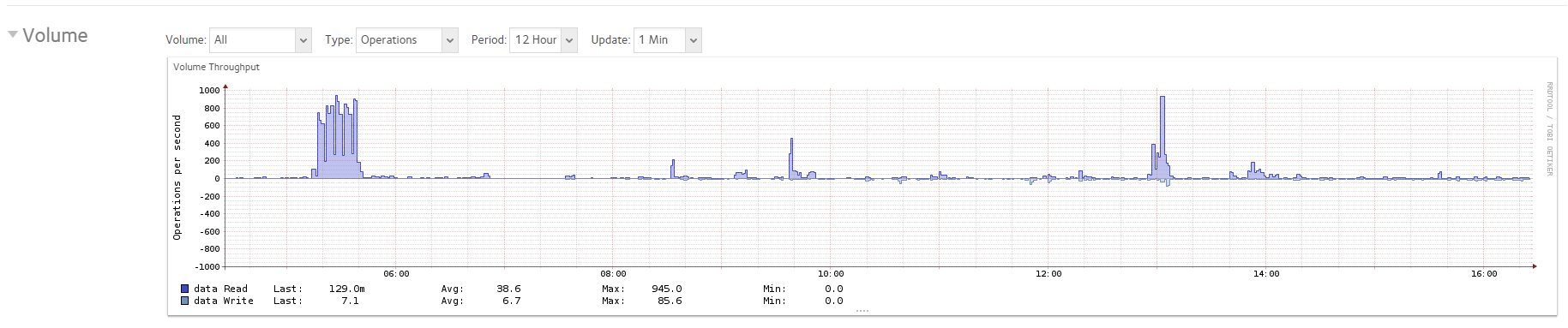

When I look performance graphs within the webUI of the NAS, during business hours, the average is probably under 200 operations per second, with occasional spikes between 500-600 operations per second maybe 2-3 times a day, if that. I would not expect this to be considered high utilization.

I have snapshots occurring only x2 a day (12am and 12pm) and the users are not complaining about this during those times.

That said, here is a screenshot from the last occurrence when this happened. Business hours are between 7am-5pm so anything outside of this timeframe is more than likely a backup of some sort. I'm specifically looking at what is going on just before 10am.

→ Do you think the NAS is really being pushed? I don't but I need to be sure.

→ How can I improve this experience?

Thanks in advance!

Nick

22 Replies

Replies have been turned off for this discussion

- jak0lantashMentor

A lot of small files leads to a lot of "access". Two snapshots per day may lead to fragmentation, therefore even more access. You may want to look at a faster RAID level, such as RAID10, or SSDs. Also look at balance and defrag on your volume.

600 IOPS is significant on a RAID5 volume of 6 mechanical HDDs.

- Retired_Member

With SMB connections there is an app "SMB plus", which could help to avoid defragmentation right at the beginning, when files are written to your volume by your users. Just make sure you set option "Preallocation" to "enable" after the installation is complete.

The benefit would be faster reading of files during users' access and faster defragmentation operations in general.

- jak0lantashMentorI don't think this would help with fragmentation due to snapshots. And won't help reduce access time either. But it's worth a try to measure the difference in user experience.

- Retired_Member

Very well put, jak0lantash. However, I got the impression, that user experience is key here.

Together wit a chance in current snapshot policy, nickjames, it could make a significant difference over time. Let me recommend to choose different points in time for snapshots. Or better (assuming there are no nightshifts), I would do only one snapshot at the very end of your business day, which could be 24:00. ...And don't do them on weekends (assuming your users are not working through the weekends, I hope :-)

- Retired_Member

Sorry, in my earlier post "wit a chance" should read "with a change"

- jak0lantashMentorThis is expected. LACP is good for multiple devices.

To determine which Ethernet link to send the packet through, LACP calculates a hash. Here you chose L2+L3. Because you're transferring data from a single client to a single NAS. the source data is always the same, therefore the hash is always the same, therefore the Ethernet link used is always the same. That's why you're capped at the throughput of a single link.

If you want to test the gain in throughput, you need to test from multiple clients simultaneously.- StephenBGuru - Experienced User

jak0lantash wrote:

This is expected. LACP is good for multiple devices.

To determine which Ethernet link to send the packet through, LACP calculates a hash. Here you chose L2+L3. Because you're transferring data from a single client to a single NAS. the source data is always the same, therefore the hash is always the same, therefore the Ethernet link used is always the same. That's why you're capped at the throughput of a single link.

If you want to test the gain in throughput, you need to test from multiple clients simultaneously.Exactly so. LACP is working as designed. If you aggregate 1 gbps links, the maximum flow to each client connection through the bond is intentionally limited to 1 gbps. That prevents packet loss at layers 1 and 2.

Your original use case was that when one "primary" user was copying a large file, all other users were blocked until that was complete. LACP should reduce how often that happens, though for each of the other user's there's still a 50-50 chance that LACP's hash will put them on the same NIC as the "primary" user creating the congestion. For those users the bandwidth starvation will still happen.

You could try a different aggregation mode (with NAS using ALB perhaps) - that has different downsides, but might work out better.

10 gigabit etherent in the server is the best technical answer, but that requires at least a 52x NAS (and of course a 10 gigabit switch).

- jak0lantashMentorAlso, the throughput is only as good as the slowest link in the path. So if your test PC is connected to switch A via a single Ethernet cable, you won't see more than 1Gbps (so <125Mo/s - overhead).

Related Content

NETGEAR Academy

Boost your skills with the Netgear Academy - Get trained, certified and stay ahead with the latest Netgear technology!

Join Us!