NETGEAR is aware of a growing number of phone and online scams. To learn how to stay safe click here.

Forum Discussion

ITisTheLaw

May 14, 2017Guide

RN3220 / RN4200 Crippling iSCSI Write Performance

I've got a mixed OEM ecosystem with several Tier 2 RN3220's and RN4200's along with Tier 1 EqualLogic storage appliances.

All ReadyNAS appliances have 12 drive compliments Seagate Enterprise 4TB drives in them.

I wanted to be able to use the ReadyNAS' as iSCSI options, particuarly for maintainance cycles on the storage network, so I re-purposed them from NFS use. Factory reset them onto 6.6 firmware and they have subsequently been upgraded to 6.7.1 with no change in symptoms.

- The drives have no errors

- It's a new install, so there are no fragmentation issues etc.

- The NAS's use X-RAID

- I've set them up with 2 NIC 1GbE MPIO from the Hyper-V side (I've also tried it on 10GbE and on 1 NIC 1GbE with no impact).

- 9k jumbo frames are on

- There are no teams on the RN's

- Checksum is off

- Quota is off

- iSCSI Target LUN's are all thick

- Write caching is on (UPS's are in place)

- Sync writes are disabled

- As many services as I can find are off, there are no SMB shares

- No NetGear apps or cloud services

- No snapshots

The short summary of the problem is that if I migrate a VM VHDX storage from the EqualLogic on to any of the ReadyNAS appliances, accross the same storage network and running from the same hypervisor, the data migration takes an eternity and once the move completes the write performance is uterrly, utterly crippled.

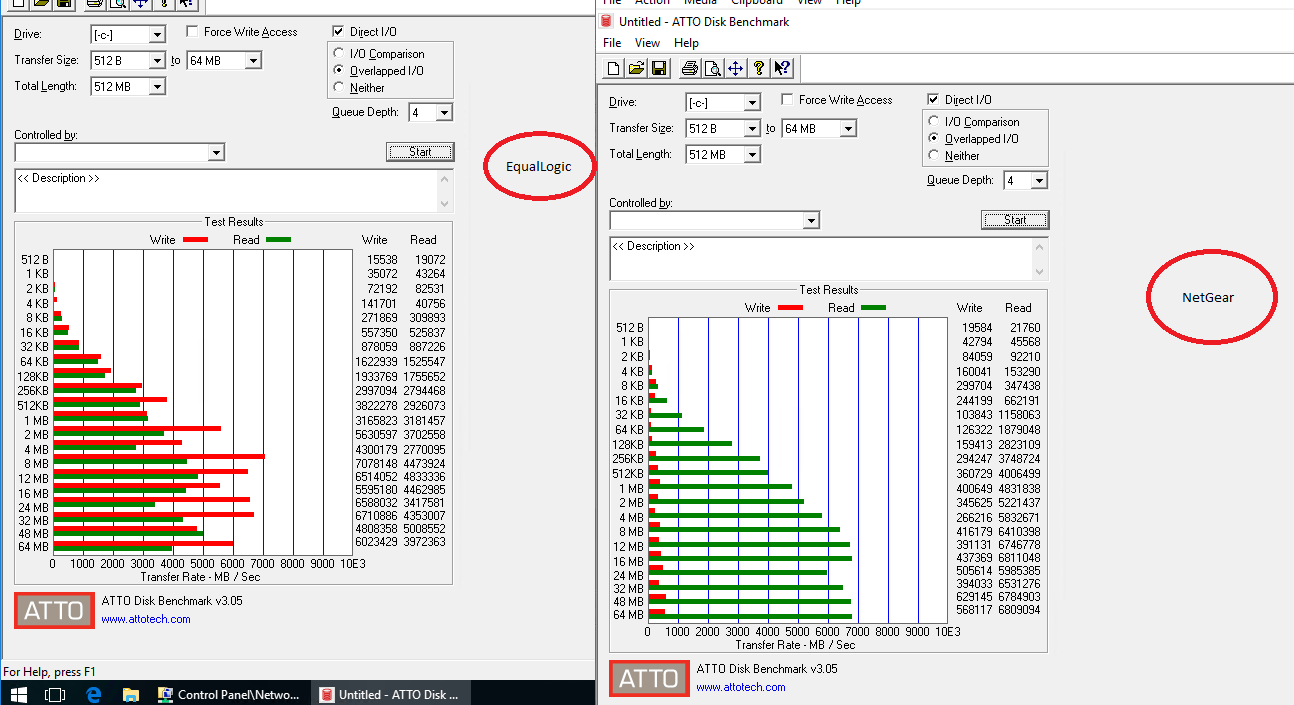

Here are the ATTO stats:

Same VM, on the same 1GbE network. In the case of the above over 1x 1GbE, single path, all running on the same switches.

Moving the VM back to the EqualLogic storage takes a fraction of the time to move and the performance of the VM is instantly restored.

According to the ATTO data above the ReadyNAS should be offering better performance than the substantially more expensive EqualLogic, however in this condition these units are next to useless.

If I create an SMB share and perform a file copy of the storage network, I can get 113MB/s off of a file copy on a 1x1GbE NIC, with no problems. So it does not look like a network issue.

Does anyone have any ideas? I have one of the 3220's in a state that I can do anything to.

The only thing that I haven't done is try Flex-RAID instead of X-RAID, but these numbers do not look like a RAID parity calculation issue.

Many thanks,

25 Replies

Replies have been turned off for this discussion

To offer an update.

I've now upgraded the firmware to 6.7.2, with no change in performance.

I deleted the array and created a new RAID6 accross all 12 drives using FLEX-RAID instead of X-RAID. I let it write the parity overnight and complete the sync and re-tested The performance was worse.

Next I deleted the RAID6, recreated it as a RAID0, and then repeated. The same problem, with similar dire write performance levels. The increase in the read performance is evident though.

Again, keeping it single path, no-MPIO. During the VHDX copy into the iSCSI target, the NIC is unable to get higher than 700Mbps and the Max value on the NIC graph on the RN3220 shows 74.1M. The average value is about 450Mbps. Sending it the other way caps out at about 800Mbps (I would expect higher, but the test EqualLogic shelf is actually busy in production). There is however a very clear difference in write behaviours between the two.

Sending the VHDX from the EqualLogic to the NetGear is bursting! It'll go 200, 700, 100, 300, 500, 400, 700, 200, 400, 600, 700, 400 and so on. Sending the VHDX back to the EqualLogic is completely stable at 800Mbps within a margin of ~40Kbps. It is as if the RN3220 has filled a buffer and had to back off.

I've also tried it on a hypervisor that does not have Dell HIT installed on it in case the Dell DSM is being "anti-competitive". There was no change.

I've re-confirmed the 9K end to end frame size is working correctly.

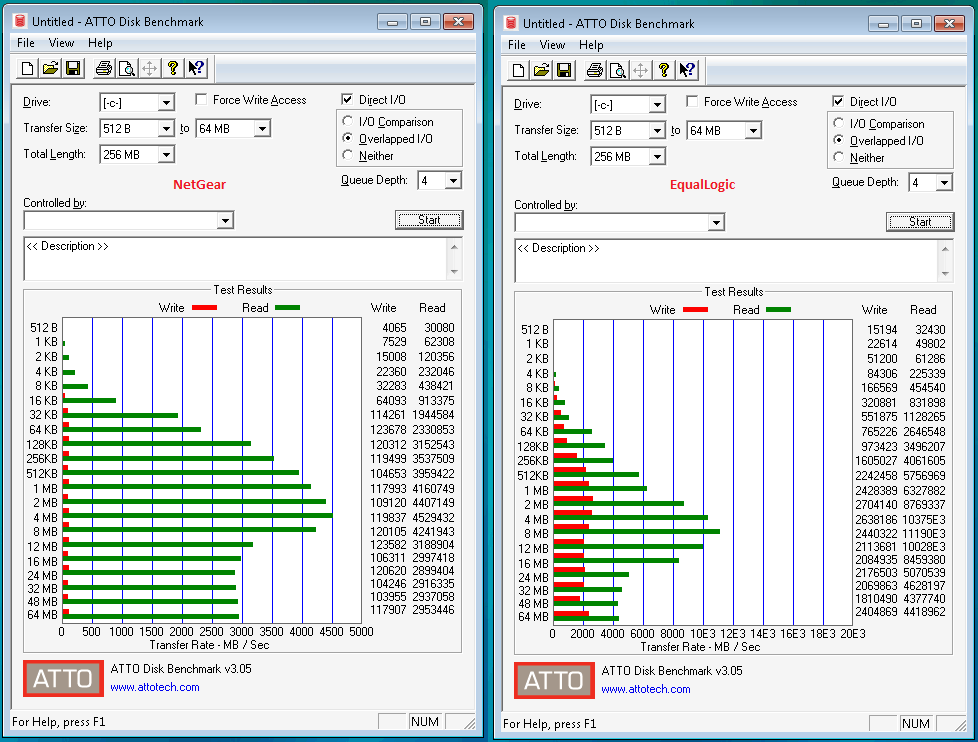

Here is an ATTO retest after everything new that I have tried. The NetGear is RAID0 in this, the EqualLogic RAID10.

Also to add to the above list, the iSCSI LUN's are formatted as 64K NTFS

p.s. the EqualLogic was extremely busy when I did this, hence the far lower performance numbers.

I've now tried playing with the NIC buffers in /etc/sysctl.conf. They are only 206KB by default. I've tried between that and 12MB and while there was a slight improvment in read numbers around the 2MB mark, nothing at all happened with the write speeds. The array is still RAID0.

I've also tried as per waldbauer_com's post in

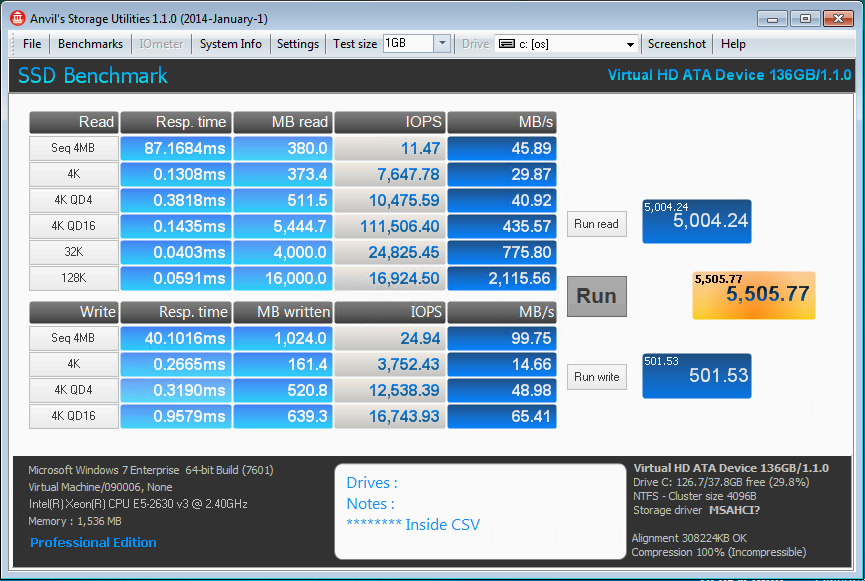

The Windows 7 VM is so sluggish, if you try and do too much at once, it blue screens out.

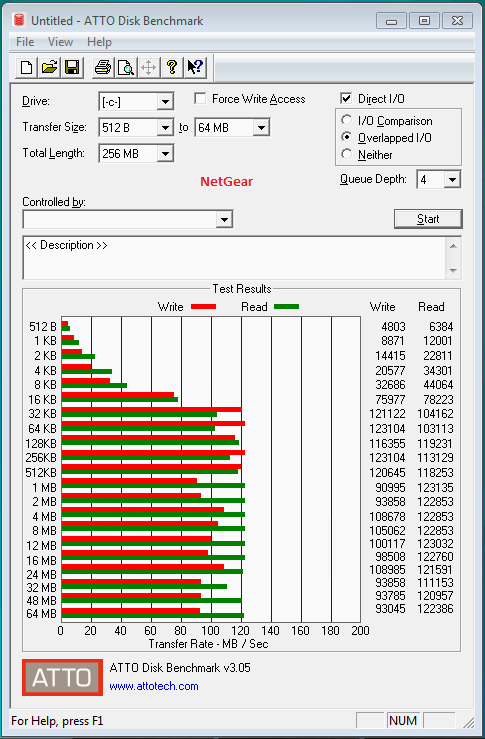

Finally, if I take LUN out of the CSV and re-mount it (on the same iSCSI connection) as local storage on the hypervisor, this is what happens to the ATTO stats and quite a lot of the sluggishness from running on a single 1GbE vanishes too.

So perhaps the issue is actually in NetGear compatibility with CSV?

I've now swapped into the array an Intel DP NIC and have linked the 10GbE into the system and it's offering even worse performance.

Intel DP ET 1000BASE-T Server Adapter

Switched

9k frames

Tested as one port and dual port RR MPIO

Slightly worse performance in read and write than the built-in adapters

QLogic BCM57810 10GbE 10GSFP+Cu

Point to point on a 3m SFP+DA, no switching involved

No Cluster Shared Volume

On a dedicated 1TB LUN

RAID0 underlying array

Nothing else happening on the array

All other optimisations as above.

So I think it's safe to assume it's not any of the NICs involved here.

First few lines of TOP during a benchmark

%Cpu(s): 0.0 us, 1.9 sy, 0.0 ni, 97.7 id, 0.3 wa, 0.0 hi, 0.1 si, 0.0 st

KiB Mem: 3926940 total, 3679804 used, 247136 free, 2196 buffers

KiB Swap: 3139580 total, 0 used, 3139580 free. 3318992 cached MemPID USER PR NI VIRT RES SHR S %CPU %MEM TIME+ COMMAND

6306 root 20 0 0 0 0 S 4.0 0.0 5:23.73 iscsi_trxInternally, do a lage file copy within a VM and it'll settle at 19.8MB/s

So basically I've inherited several 4x1GbE disk arrays that on 12x 7200 rpm Enterprise SATA drives in RAID 0 cannot write at more than 690Mbps in occasional, yet quite infrequent bursts?

- mdgm-ntgrNETGEAR Employee Retired

Hi mdgm,

Thanks for replying,

I have installed 6.7.3 as advised and rebooted.

Going from 9000/1500 on the Netgear, 9014/1514 on the hypervisor yields a more stable sequential file transfer when moving the test VM back in (102GB). It broadly sits between 580 and 660Mbps, predominatly over 600Mbps. This is on 1x1GbE Netgear side. Max Rx on eth2 on the Netgear states 76.7MB (613.6Mbps).

Here is an off thing though, I've set the eth2 IP address to 192.168.171.1 on 1500 MTU and then rebooted the array. Yet, i can still ping 192.168.171.1 with a 8000 byte ICMP echo. ARP confirms that it is talking to the correct NIC.

I've set the hypervisor to 1514

Jumbo Packet Disabled *JumboPacket {1514}

Rebooted the hypervisor.

Inside the CSV:

Not in a CSV (E:\ as a local iSCSI mount) - this was painful as the array had to copy from and to itself via the Hypervisor. The hypervisor was receiving and sending on the NIC at 380Mbps, the Netgear eth2 says 43.6MB. A larger burst variance was visible during the copy, between 230 and 480Mbps.

Max values on the NICs have dropped to 55.8MB, which would presumably be down to the lack of 9K

Related Content

NETGEAR Academy

Boost your skills with the Netgear Academy - Get trained, certified and stay ahead with the latest Netgear technology!

Join Us!