- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

Missing volume after power failure

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I had a power failure that lasted too long so my UPS shut down my Ultra 4 (OS 6.6.1)

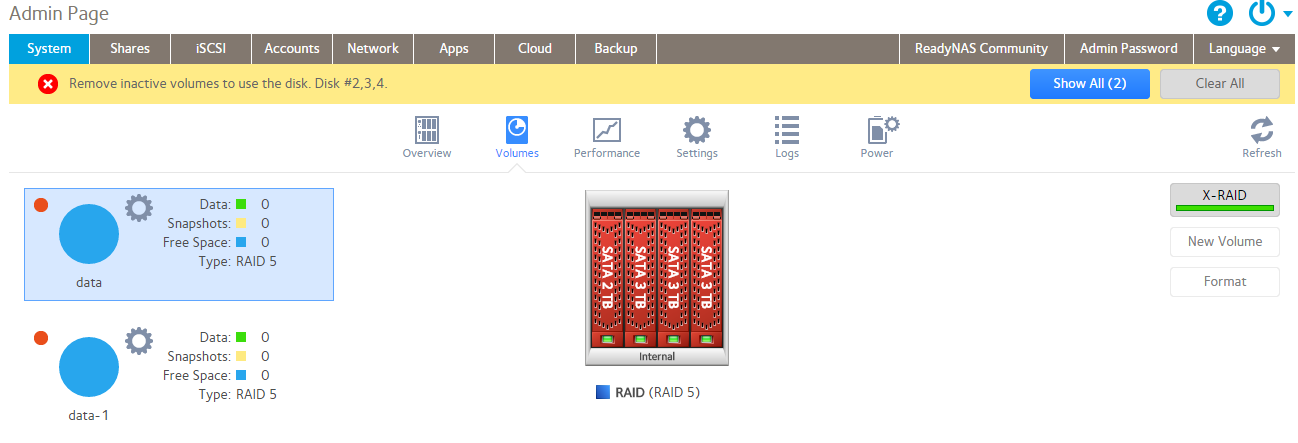

When booting it up again I had this screen:

I am missing my Volume and ofc my shares too and it seems that the raid is broken since I now got a data-1?

Using ssh I can see that the volume is not mounted:

root@Ultra:~# df Filesystem 1K-blocks Used Available Use% Mounted on udev 10240 4 10236 1% /dev /dev/md0 4190208 1917460 1827244 52% / tmpfs 1017304 4 1017300 1% /dev/shm tmpfs 1017304 5468 1011836 1% /run tmpfs 508652 1020 507632 1% /run/lock tmpfs 1017304 0 1017304 0% /sys/fs/cgroup

No drives were reporting errors before so hopefully they are still ok

Is there anything I can do?

I´ve tried to reboot it a second time without change.

Any advice appreciated.

Solved! Go to Solution.

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@F_L_ wrote:Then I get:

root@Ultra:/# mount --bind / /mnt root@Ultra:/# du -h --max-depth=1 /mnt 1.8G /mnt/data

There's your issue... /mnt/data should be empty.

Do you have replicate jobs running? It's common that it pushes data to /data even though the data volume is not mounted, filling up the OS volume.

Then, once the data volume is mounted on /data, you can no longer see what was in the folder itself but the OS volume is still full.

As you have temporarily mounted the OS volume back to /mnt, you should check what's in /mnt/data and clear it to free up space on your OS volume. WARNING: Be careful what you're doing, you want to clear /mnt/data, NOT /data (where your actual data volume is mounted)!

All Replies

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: Missing volume after power failure

Well, of course you aren't entitled to normal support (even if you are willing to pay), since you are running OS 6 on a legacy ReadyNAS. Netgear can offer you a data recovery contract, but that would be pretty expensive.

Perhaps a Netgear mod here will reach out to you and offer to take a look.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: Missing volume after power failure

I understand that.

I was hoping that the expertise in the community could help me since I don´t really understand what happend.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: Missing volume after power failure

I've seen the symptoms posted here before, but unfortunately I don't know how to resolve it.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: Missing volume after power failure

I see what you mean now when reading the forum.

This seems to have something to do with >=6.6.0?

Can´t find any good response from Netgear though 😞

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: Missing volume after power failure

You could PM mdgm or skywalker, and ask if there there are ssh commands you can use to remount the original volume.

FWIW, I agree with @Sandshark's comment that this seems to be happening too often.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: Missing volume after power failure

Can the image be approved so non-modos can see it please?

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: Missing volume after power failure

@jak0lantash wrote:

Can the image be approved so non-modos can see it please?

Done.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: Missing volume after power failure

Thanks @StephenB

The screenshot shows two RAID arrays which correspond to the members of the BTRFS volume created by X-RAID:

md127 = (RAID5) 4x2TB

md126 = (RAID5) 3x1TB

If you download the logs and look inside systemd-journal.log, you should see some lines with "kernel: md" with information about sda3, sdb3, sdc3, sdd3, md127, sdb4, sdc4, sdd4 and md126.

If you share here, it would help understand the current status of your RAID arrays.

In any case, contact NETGEAR Support for a Data Recovery Contract is probably the best chance to get your data back / fix the RAID arrays if you don't have (much) experience with mdadm stuff.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: Missing volume after power failure

Here are some entries from the log.

Let me know if you need some other info.

Mar 13 19:17:27 Ultra kernel: md: md1 stopped. Mar 13 19:17:27 Ultra kernel: md: bind<sdb2> Mar 13 19:17:27 Ultra kernel: md: bind<sdc2> Mar 13 19:17:27 Ultra kernel: md: bind<sdd2> Mar 13 19:17:27 Ultra kernel: md: bind<sda2> Mar 13 19:17:27 Ultra kernel: md/raid:md1: device sda2 operational as raid disk 0 Mar 13 19:17:27 Ultra kernel: md/raid:md1: device sdd2 operational as raid disk 3 Mar 13 19:17:27 Ultra kernel: md/raid:md1: device sdc2 operational as raid disk 2 Mar 13 19:17:27 Ultra kernel: md/raid:md1: device sdb2 operational as raid disk 1 Mar 13 19:17:27 Ultra kernel: md/raid:md1: allocated 4354kB Mar 13 19:17:27 Ultra kernel: md/raid:md1: raid level 6 active with 4 out of 4 devices, algorithm 2 Mar 13 19:17:27 Ultra kernel: RAID conf printout: Mar 13 19:17:27 Ultra kernel: --- level:6 rd:4 wd:4 Mar 13 19:17:27 Ultra kernel: disk 0, o:1, dev:sda2 Mar 13 19:17:27 Ultra kernel: disk 1, o:1, dev:sdb2 Mar 13 19:17:27 Ultra kernel: disk 2, o:1, dev:sdc2 Mar 13 19:17:27 Ultra kernel: disk 3, o:1, dev:sdd2 Mar 13 19:17:27 Ultra kernel: md1: detected capacity change from 0 to 1071644672 Mar 13 19:17:27 Ultra kernel: BTRFS: device label 5e26b5f2:root devid 1 transid 2850344 /dev/md0 Mar 13 19:17:27 Ultra systemd[1]: Failed to insert module 'kdbus': Function not implemented Mar 13 19:18:39 Ultra kernel: md/raid1:md0: redirecting sector 7560752 to other mirror: sda1 Mar 13 19:26:03 Ultra kernel: md: raid0 personality registered for level 0 Mar 13 19:26:03 Ultra kernel: md: raid1 personality registered for level 1 Mar 13 19:26:03 Ultra kernel: md: raid10 personality registered for level 10 Mar 13 19:26:03 Ultra kernel: md: raid6 personality registered for level 6 Mar 13 19:26:03 Ultra kernel: md: raid5 personality registered for level 5 Mar 13 19:26:03 Ultra kernel: md: raid4 personality registered for level 4 Mar 13 19:26:03 Ultra kernel: md: md0 stopped. Mar 13 19:26:03 Ultra kernel: md: bind<sdb1> Mar 13 19:26:03 Ultra kernel: md: bind<sdc1> Mar 13 19:26:03 Ultra kernel: md: bind<sdd1> Mar 13 19:26:03 Ultra kernel: md: bind<sda1> Mar 13 19:26:03 Ultra kernel: md/raid1:md0: active with 4 out of 4 mirrors Mar 13 19:26:03 Ultra kernel: md0: detected capacity change from 0 to 4290772992 Mar 13 19:26:03 Ultra kernel: md: md1 stopped. Mar 13 19:26:03 Ultra kernel: md: bind<sdb2> Mar 13 19:26:03 Ultra kernel: md: bind<sdc2> Mar 13 19:26:03 Ultra kernel: md: bind<sdd2> Mar 13 19:26:03 Ultra kernel: md: bind<sda2> Mar 13 19:26:03 Ultra kernel: md/raid:md1: device sda2 operational as raid disk 0 Mar 13 19:26:03 Ultra kernel: md/raid:md1: device sdd2 operational as raid disk 3 Mar 13 19:26:03 Ultra kernel: md/raid:md1: device sdc2 operational as raid disk 2 Mar 13 19:26:03 Ultra kernel: md/raid:md1: device sdb2 operational as raid disk 1 Mar 13 19:26:03 Ultra kernel: md/raid:md1: allocated 4354kB Mar 13 19:26:03 Ultra kernel: md/raid:md1: raid level 6 active with 4 out of 4 devices, algorithm 2 Mar 13 19:26:03 Ultra kernel: RAID conf printout: Mar 13 19:26:03 Ultra kernel: --- level:6 rd:4 wd:4 Mar 13 19:26:03 Ultra kernel: disk 0, o:1, dev:sda2 Mar 13 19:26:03 Ultra kernel: disk 1, o:1, dev:sdb2 Mar 13 19:26:03 Ultra kernel: disk 2, o:1, dev:sdc2 Mar 13 19:26:03 Ultra kernel: disk 3, o:1, dev:sdd2 Mar 13 19:26:03 Ultra kernel: md1: detected capacity change from 0 to 1071644672 Mar 13 19:26:03 Ultra kernel: BTRFS: device label 5e26b5f2:root devid 1 transid 2850363 /dev/md0 Mar 13 19:26:03 Ultra systemd[1]: Failed to insert module 'kdbus': Function not implemented Mar 13 19:26:03 Ultra systemd[1]: systemd 230 running in system mode. (+PAM +AUDIT +SELINUX +IMA +APPARMOR +SMACK +SYSVINIT +UTMP +LIBCRYPTSETUP +GCRYPT +GNUTLS +ACL +XZ +LZ4 +SECCOMP +BLKID +ELFUTILS +KMOD +IDN) Mar 13 19:26:03 Ultra systemd[1]: Detected architecture x86-64.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: Missing volume after power failure

Unfortunately, this is only showing info about the OS (on sdx1) and the swap volumes (on sdx2).

Is there no entry about sdx3 and sdx4?

Can you post the content of partitions.log?

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: Missing volume after power failure

I can´t find anything in log on sdx

Here is the partition log:

major minor #blocks name 8 0 1953514584 sda 8 1 4194304 sda1 8 2 524288 sda2 8 3 1948793912 sda3 8 16 2930266584 sdb 8 17 4194304 sdb1 8 18 524288 sdb2 8 19 1948793912 sdb3 8 20 976754027 sdb4 8 32 2930266584 sdc 8 33 4194304 sdc1 8 34 524288 sdc2 8 35 1948793912 sdc3 8 36 976754027 sdc4 8 48 2930266584 sdd 8 49 4194304 sdd1 8 50 524288 sdd2 8 51 1948793912 sdd3 8 52 976754027 sdd4 9 0 4190208 md0 9 1 1046528 md1 9 127 5845988352 md127

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: Missing volume after power failure

I think it is more efficient if you can read the log 🙂

https://www.dropbox.com/s/h2ubk4tldk0po4e/systemd-journal.log?dl=0

But please let me know if I should look for anything specific

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: Missing volume after power failure

Started well:

Mar 13 19:26:03 Ultra kernel: md: bind<sdb3> Mar 13 19:26:03 Ultra systemd[1]: Mounted POSIX Message Queue File System. Mar 13 19:26:03 Ultra kernel: md: bind<sdc3> Mar 13 19:26:03 Ultra kernel: md: bind<sdd3> Mar 13 19:26:03 Ultra kernel: md: bind<sda3> Mar 13 19:26:03 Ultra kernel: md/raid:md127: device sda3 operational as raid disk 0 Mar 13 19:26:03 Ultra kernel: md/raid:md127: device sdd3 operational as raid disk 3 Mar 13 19:26:03 Ultra kernel: md/raid:md127: device sdc3 operational as raid disk 2 Mar 13 19:26:03 Ultra kernel: md/raid:md127: device sdb3 operational as raid disk 1 Mar 13 19:26:03 Ultra kernel: md/raid:md127: allocated 4354kB Mar 13 19:26:03 Ultra kernel: md/raid:md127: raid level 5 active with 4 out of 4 devices, algorithm 2 Mar 13 19:26:03 Ultra kernel: RAID conf printout: Mar 13 19:26:03 Ultra kernel: --- level:5 rd:4 wd:4 Mar 13 19:26:03 Ultra kernel: disk 0, o:1, dev:sda3 Mar 13 19:26:03 Ultra kernel: disk 1, o:1, dev:sdb3 Mar 13 19:26:03 Ultra kernel: disk 2, o:1, dev:sdc3 Mar 13 19:26:03 Ultra kernel: disk 3, o:1, dev:sdd3 Mar 13 19:26:03 Ultra kernel: md127: detected capacity change from 0 to 5986292072448

But then... Ouch... I don't want to stress you, but that is not very good.

Mar 13 19:30:40 Ultra kernel: md: md126 stopped.

Mar 13 19:30:40 Ultra kernel: NMI watchdog: BUG: soft lockup - CPU#0 stuck for 22s! [systemd-hwdb:1338]

Mar 13 19:30:40 Ultra kernel: Modules linked in: vpd(PO)

Mar 13 19:30:42 Ultra kernel: CPU: 0 PID: 1338 Comm: systemd-hwdb Tainted: P O 4.1.30.x86_64.1 #1

Mar 13 19:30:42 Ultra kernel: Hardware name: NETGEAR ReadyNAS/ReadyNAS , BIOS 080016 08/23/2011

Mar 13 19:30:42 Ultra kernel: task: ffff88007bea6e40 ti: ffff880078594000 task.ti: ffff880078594000

Mar 13 19:30:42 Ultra kernel: RIP: 0010:[<ffffffff883d8529>] [<ffffffff883d8529>] _find_next_bit.part.0+0x69/0x70

Mar 13 19:30:42 Ultra kernel: RSP: 0018:ffff8800785975a0 EFLAGS: 00000216

Mar 13 19:30:42 Ultra kernel: RAX: 0000000000004612 RBX: 0000000000000001 RCX: 0000000000000012

Mar 13 19:30:42 Ultra kernel: RDX: 0000000000004612 RSI: fffffffffffc0000 RDI: ffff880078647000

Mar 13 19:30:42 Ultra kernel: RBP: ffff8800785975a8 R08: 800f3cd6de653800 R09: 0000000000000000

Mar 13 19:30:42 Ultra kernel: R10: 0000000000000001 R11: ffff88007c4019c0 R12: 0000000000000001

Mar 13 19:30:42 Ultra kernel: R13: ffff880078597598 R14: 0000000000080000 R15: ffff8800785975f8

Mar 13 19:30:42 Ultra kernel: FS: 00007ff340138740(0000) GS:ffff88007f000000(0000) knlGS:0000000000000000

Mar 13 19:30:42 Ultra kernel: CS: 0010 DS: 0000 ES: 0000 CR0: 0000000080050033

Mar 13 19:30:42 Ultra kernel: CR2: 00007f3ba07291f8 CR3: 000000007869c000 CR4: 00000000000006f0

Mar 13 19:30:42 Ultra kernel: Stack:

Mar 13 19:30:42 Ultra kernel: ffffffff883d8548 ffff880078597608 ffffffff8832dba1 ffff88007ba94ab2

Mar 13 19:30:42 Ultra kernel: ffff88007c341518 ffff880078597640 ffff880078597638 ffff880078597608

Mar 13 19:30:42 Ultra kernel: 000000000049c000 0000000000001000 ffff88007b852f00 ffff880078597720

Mar 13 19:30:42 Ultra kernel: Call Trace:

Mar 13 19:30:42 Ultra kernel: [<ffffffff883d8548>] ? find_next_bit+0x18/0x20

Mar 13 19:30:42 Ultra kernel: [<ffffffff8832dba1>] search_bitmap.isra.32+0x91/0x120

Mar 13 19:30:42 Ultra kernel: [<ffffffff8833077c>] btrfs_find_space_for_alloc+0xec/0x250

Mar 13 19:30:42 Ultra kernel: [<ffffffff882d403d>] find_free_extent.isra.90+0x35d/0xb20

Mar 13 19:30:42 Ultra kernel: [<ffffffff882d48b3>] btrfs_reserve_extent+0xb3/0x150

Mar 13 19:30:42 Ultra kernel: [<ffffffff882ee5bc>] cow_file_range+0x14c/0x4c0

Mar 13 19:30:42 Ultra kernel: [<ffffffff882ef86a>] run_delalloc_range+0x36a/0x3f0

Mar 13 19:30:42 Ultra kernel: [<ffffffff8830656c>] writepage_delalloc.isra.42+0xec/0x160

Mar 13 19:30:42 Ultra kernel: [<ffffffff88308d2f>] __extent_writepage+0xaf/0x260

Mar 13 19:30:42 Ultra kernel: [<ffffffff88309212>] extent_write_cache_pages.isra.36.constprop.53+0x332/0x400

Mar 13 19:30:42 Ultra kernel: [<ffffffff883097a8>] extent_writepages+0x48/0x60

Mar 13 19:30:42 Ultra kernel: [<ffffffff882eb190>] ? btrfs_update_inode_item+0x120/0x120

Mar 13 19:30:42 Ultra kernel: [<ffffffff882e9b03>] btrfs_writepages+0x23/0x30

Mar 13 19:30:42 Ultra kernel: [<ffffffff880f6929>] do_writepages+0x19/0x40

Mar 13 19:30:42 Ultra kernel: [<ffffffff880edb31>] __filemap_fdatawrite_range+0x51/0x60

Mar 13 19:30:42 Ultra kernel: [<ffffffff880edbd7>] filemap_flush+0x17/0x20

Mar 13 19:30:42 Ultra kernel: [<ffffffff882f5a1c>] btrfs_rename2+0x30c/0x6d0

Mar 13 19:30:42 Ultra kernel: [<ffffffff883757b7>] ? security_inode_permission+0x17/0x20

Mar 13 19:30:42 Ultra kernel: [<ffffffff88137cfa>] vfs_rename+0x53a/0x7e0

Mar 13 19:30:42 Ultra kernel: [<ffffffff8813c941>] SyS_renameat2+0x4c1/0x560

Mar 13 19:30:42 Ultra kernel: [<ffffffff88112c89>] ? vma_rb_erase+0x129/0x230

Mar 13 19:30:42 Ultra kernel: [<ffffffff8813ca09>] SyS_rename+0x19/0x20

Mar 13 19:30:42 Ultra kernel: [<ffffffff88a520d7>] system_call_fastpath+0x12/0x6a

Mar 13 19:30:42 Ultra kernel: Code: 66 90 48 83 c2 40 48 39 c2 73 1f 49 89 d0 4c 89 c9 49 c1 e8 06 4a 33 0c c7 74 e7 f3 48 0f bc c9 48 01 ca 48 39 d0 48 0f 47 c2 5d <c3> 66 0f 1f 44 00 00 48 39 d6 48 89 f0 76 11 48 85 f6 74 0c 55

Mar 13 19:30:42 Ultra kernel: NMI watchdog: BUG: soft lockup - CPU#0 stuck for 22s! [systemd-hwdb:1338]

Mar 13 19:30:42 Ultra kernel: Modules linked in: vpd(PO)

Mar 13 19:30:43 Ultra kernel: CPU: 0 PID: 1338 Comm: systemd-hwdb Tainted: P O L 4.1.30.x86_64.1 #1

Mar 13 19:30:43 Ultra kernel: Hardware name: NETGEAR ReadyNAS/ReadyNAS , BIOS 080016 08/23/2011

Mar 13 19:30:43 Ultra kernel: task: ffff88007bea6e40 ti: ffff880078594000 task.ti: ffff880078594000

Mar 13 19:30:43 Ultra kernel: RIP: 0010:[<ffffffff883d8529>] [<ffffffff883d8529>] _find_next_bit.part.0+0x69/0x70

Mar 13 19:30:43 Ultra kernel: RSP: 0018:ffff8800785975a0 EFLAGS: 00000202

Mar 13 19:30:43 Ultra kernel: RAX: 00000000000030f0 RBX: 0000000000000001 RCX: 0000000000000030

Mar 13 19:30:43 Ultra kernel: RDX: 00000000000030f0 RSI: fffffe0000000000 RDI: ffff880078643000

Mar 13 19:30:43 Ultra kernel: RBP: ffff8800785975a8 R08: 000f00f000000000 R09: 0000000000000000

Mar 13 19:30:43 Ultra kernel: R10: 0000000000000001 R11: ffff88007c4019c0 R12: 0000000000000001

Mar 13 19:30:43 Ultra kernel: R13: ffff880078597598 R14: 0000000000080000 R15: ffff8800785975f8

Mar 13 19:30:43 Ultra kernel: FS: 00007ff340138740(0000) GS:ffff88007f000000(0000) knlGS:0000000000000000

Mar 13 19:30:43 Ultra kernel: CS: 0010 DS: 0000 ES: 0000 CR0: 0000000080050033

Mar 13 19:30:43 Ultra kernel: CR2: 00007f3ba07291f8 CR3: 000000007869c000 CR4: 00000000000006f0

Mar 13 19:30:43 Ultra kernel: Stack:

Mar 13 19:30:43 Ultra kernel: ffffffff883d8548 ffff880078597608 ffffffff8832dba1 ffff88007ba94ab2

Mar 13 19:30:43 Ultra kernel: ffff88007c345618 ffff880078597640 ffff880078597638 ffff880078597608

Mar 13 19:30:43 Ultra kernel: 000000000049c000 0000000000001000 ffff88007b852280 ffff880078597720

Mar 13 19:30:43 Ultra kernel: Call Trace:

Mar 13 19:30:43 Ultra kernel: [<ffffffff883d8548>] ? find_next_bit+0x18/0x20

Mar 13 19:30:43 Ultra kernel: [<ffffffff8832dba1>] search_bitmap.isra.32+0x91/0x120

Mar 13 19:30:43 Ultra kernel: [<ffffffff8833077c>] btrfs_find_space_for_alloc+0xec/0x250

Mar 13 19:30:43 Ultra kernel: [<ffffffff882ca8da>] ? btrfs_put_block_group+0x1a/0x70

Mar 13 19:30:43 Ultra kernel: [<ffffffff882d403d>] find_free_extent.isra.90+0x35d/0xb20

Mar 13 19:30:43 Ultra kernel: [<ffffffff882d48b3>] btrfs_reserve_extent+0xb3/0x150

Mar 13 19:30:43 Ultra kernel: [<ffffffff882ee5bc>] cow_file_range+0x14c/0x4c0

Mar 13 19:30:43 Ultra kernel: [<ffffffff882ef86a>] run_delalloc_range+0x36a/0x3f0

Mar 13 19:30:43 Ultra kernel: [<ffffffff8830656c>] writepage_delalloc.isra.42+0xec/0x160

Mar 13 19:30:43 Ultra kernel: [<ffffffff88308d2f>] __extent_writepage+0xaf/0x260

Mar 13 19:30:43 Ultra kernel: [<ffffffff88309212>] extent_write_cache_pages.isra.36.constprop.53+0x332/0x400

Mar 13 19:30:43 Ultra kernel: [<ffffffff883097a8>] extent_writepages+0x48/0x60

Mar 13 19:30:43 Ultra kernel: [<ffffffff882eb190>] ? btrfs_update_inode_item+0x120/0x120

Mar 13 19:30:43 Ultra kernel: [<ffffffff882e9b03>] btrfs_writepages+0x23/0x30

Mar 13 19:30:43 Ultra kernel: [<ffffffff880f6929>] do_writepages+0x19/0x40

Mar 13 19:30:43 Ultra kernel: [<ffffffff880edb31>] __filemap_fdatawrite_range+0x51/0x60

Mar 13 19:30:43 Ultra kernel: [<ffffffff880edbd7>] filemap_flush+0x17/0x20

Mar 13 19:30:43 Ultra kernel: [<ffffffff882f5a1c>] btrfs_rename2+0x30c/0x6d0

Mar 13 19:30:43 Ultra kernel: [<ffffffff883757b7>] ? security_inode_permission+0x17/0x20

Mar 13 19:30:43 Ultra kernel: [<ffffffff88137cfa>] vfs_rename+0x53a/0x7e0

Mar 13 19:30:43 Ultra kernel: [<ffffffff8813c941>] SyS_renameat2+0x4c1/0x560

Mar 13 19:30:43 Ultra kernel: [<ffffffff88112c89>] ? vma_rb_erase+0x129/0x230

Mar 13 19:30:43 Ultra kernel: [<ffffffff8813ca09>] SyS_rename+0x19/0x20

Mar 13 19:30:43 Ultra kernel: [<ffffffff88a520d7>] system_call_fastpath+0x12/0x6a

Mar 13 19:30:43 Ultra kernel: Code: 66 90 48 83 c2 40 48 39 c2 73 1f 49 89 d0 4c 89 c9 49 c1 e8 06 4a 33 0c c7 74 e7 f3 48 0f bc c9 48 01 ca 48 39 d0 48 0f 47 c2 5d <c3> 66 0f 1f 44 00 00 48 39 d6 48 89 f0 76 11 48 85 f6 74 0c 55

Mar 13 19:30:43 Ultra kernel: INFO: rcu_sched self-detected stall on CPU { 0} (t=60000 jiffies g=1100 c=1099 q=3948)

[snip]

Mar 13 19:30:52 Ultra kernel: Code: 0f 1f 84 00 00 00 00 00 48 89 d0 48 8b 50 10 48 85 d2 75 f4 5d c3 66 90 48 8b 10 48 89 c7 48 89 d0 48 83 e0 fc 74 ed 48 3b 78 08 <74> eb 5d c3 31 c0 5d c3 0f 1f 44 00 00 55 48 8b 17 48 89 e5 48

Mar 13 19:30:52 Ultra systemd[1]: systemd-hwdb-update.service: Processes still around after final SIGKILL. Entering failed mode.

Mar 13 19:30:52 Ultra systemd[1]: Failed to start Rebuild Hardware Database.Full extract: http://pastebin.com/wfS7EcmX

I honestly don't know how you can proceed without the help of NETGEAR Support (or how to help you to safely proceed further).

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: Missing volume after power failure

Ok, that doesn´t sound to good 😞 Thanks for looking into it at least

I´ll wait and see it @mdgm-ntgr answer my pm.

Since this issue is something that seems to have increased in the later FW Netgear might have a clue.

I hope that this is not a bug in their FW, then my next NAS will be another brand for sure....

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: Missing volume after power failure

Can you send in the entire logs zip file (see the Sending Logs link in my sig)?

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: Missing volume after power failure

You have a few disks with current pending sectors. One of them has a very high count and a lot of reallocated sectors.

This does look like a data recovery situation. Various steps could be involved including disk cloning to attempt to recover the data.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: Missing volume after power failure

So how do you suggest to proceed?

Should I replace the disk with highest count? Can it then repair the volume?

I have some linux skills since before but never work with BTRFS

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: Missing volume after power failure

Still, should a disc with high count make the whole raid collapse? And without any warning?

I have email alerts set up and I have not received anything related to faulty/damaged discs.

I´m not an expert but it seems that this might not be the root cause for the failure, specially since it has been reported from several recently.

If I remove one disc from the NAS, can I mount it in Linux and extract (some) data?

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: Missing volume after power failure

@F_L_ wrote:should a disc with high count make the whole raid collapse? And without any warning?

I am NOT saying this is the case here. But the sake of the argument, if you take the case of an erroring disk that corrupts the RAID array, yes a single disk can bring down the whole volume.

There is a lot of misconception around the level of protection given by RAID technologies. Maybe product marketing is partially responsible for the myth that RAID redundancy is enough protection, but technically it's far from being enough. Nothing replaces a good external/offsite backup.

Even regarding backups, there is a lot of misconception. For example, "syncing" between two devices is not a backup. It's quite the opposite, you increase the surface of vulnerability.

The best option for data redundancy is a mix of all things, with the right balance. RAID redundancy, external backups, snapshots/versioning, checksums, etc.

Disaster Recovery and High Availability are yet completely different things. Taking the example of an imaginary and very resilient backup guarantying data integrity. It may allow to recover specific files to an absolute certainty which would make it an amazing backup solution, but may be a pain to recover all data at once or require a huge amount of time to complete, which would make it a terrible solution for DR. A backup has nothing to do with HA.

In your case, based on the logs you showed, as soon as mdadm starts assembling the second sub-array, the kernel spews a lot of call traces. This is exactly the type of situation that RAID redundancy can't protect you against.

I do not know what is happening and one would need to investigate directly on the machine to understand it and explain to you if possible.

I know I'm not solving your problem, but I hope this helps you understand the situation anyway.