- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

Re: ReadyNAS RN104 Dead Message

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

ReadyNAS RN104 Dead Message

I have had no problems with my ReadyNAS RN104 for 7+ years in RAID 5, when a few days ago I got this message: "Disk in channel 4 (Internal) changed state from ONLINE to FAILED." I had been running with 4x 4TB WD drives.

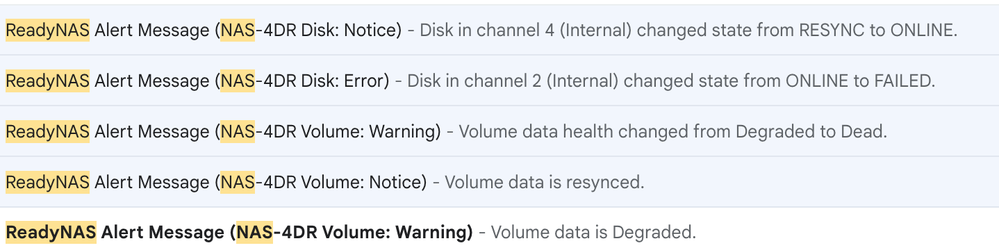

I hotswapped that drive in bay 4 with a new 6TB drive in the same WD drive family and after a few days, the resync seemed to complete. Last I looked it was at 85%, but the same night, I got FIVE messages all in a row, all within 6 seconds each other (bottom message was first):

This seems to suggest that immediately after a bay 4 failure, I have a bay 2 failure, all without any prior notice.

I went into the admin and while I could access some volumes, other critical volumes seemed to have no files.

At this point, I had 4 drives in the unit:

Bay 1: Original 4TB

Bay 2: Original 4TB

Bay 3: Original 4TB

Bay 4: New 6TB that presumably had been resynced (?)

Outside the unit is the old Bay 4 4TB drive

I pulled out drives 1-3 and copied all three to three new 6tb drives.

I ALSO copied the Old 4TB drive to a new 6TB drive

There were no errors whatsoever. The four drives all copied (using dd) without any issue whatsoever.

I have a total of NINE hard drives now:

All 4 original 4TBs and their copies onto 6TBs

A 6TB drive that was in Bay 4, after resync, before I shut down the machine to pull the drives.

I'm trying to figure out next steps and would deeply appreciate some guidance:

1) Is it possible that I have a bad power supply? This all happened after my lights flickered a number of times. If the psu were bad, could that give off the failures that I saw (first disk 4 and then disk 2)?

2) If I were to re-install drives, I would reinstall the 6tb copies of the first 3 drives, but WHAT drive should go in bay 4? Would it be the 6tb that was in there when the unit resynced, or the 6tb that now has the copied data from the "failed" 4tb that I pulled out of the unit?

3) Does it make sense for me to find some other 4-bay readynas and try to install the drives in that one, on the off-chance that this happened because the ReadyNas itself is bad (and the drives are fine)? These are no longer made, so is my only option a used ebay readynas RN1x4, RN2x4, RN3x4?

4) Is it possible/likely that this is all pointless because (a) when I went to access the NAS after the "dead" email, I was unable to access certain directories, or (b) I got the "dead" message, so my data is already gone?

Thanks very much in advance!

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: ReadyNAS RN104 Dead Message

@rxmoss wrote:

I went into the admin and while I could access some volumes, other critical volumes seemed to have no files.

Not sure what you mean here, because it sounds like you only have one volume. Do you mean shares?

@rxmoss wrote:

I'm trying to figure out next steps and would deeply appreciate some guidance:

I would start by putting in the cloned 6 TB drives in slots 1-3 (leaving slot 4 empty) with the NAS powered down. Then try booting up read-only, and see if the volume mounts. It will show degraded if it does.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: ReadyNAS RN104 Dead Message

@StephenB wrote:

@rxmoss wrote:

I went into the admin and while I could access some volumes, other critical volumes seemed to have no files.

Not sure what you mean here, because it sounds like you only have one volume. Do you mean shares?

@rxmoss wrote:

I'm trying to figure out next steps and would deeply appreciate some guidance:

I would start by putting in the cloned 6 TB drives in slots 1-3 (leaving slot 4 empty) with the NAS powered down. Then try booting up read-only, and see if the volume mounts. It will show degraded if it does.

Thanks very much for the reply.

I am sure I am using the wrong terminology, but yes, when I went into the admin, I could see the various folders (shares?) I created long ago, but some subfolders were empty and void of content.

Thanks for your suggestion re volumes 1-3 with the cloned drives. I will try that.

Is there any reason to believe that this is a hardware and/or power supply problem? Given that the drives seem to be fine, I'm trying to figure out why readynas would have given me a drive 4 error, followed by a drive 2 error.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: ReadyNAS RN104 Dead Message

@rxmoss wrote:I am sure I am using the wrong terminology, but yes, when I went into the admin, I could see the various folders (shares?) I created long ago, but some subfolders were empty and void of content.

To clarify the terminology: You had one RAID-5 volume (likely called data) comprised of four disks. You also had shares (folders) on the volume that you created with the NAS admin ui.

Keeping this straight will be helpful as you work to recover the data, as miscommunications could make things even more difficult.

@rxmoss wrote:Is there any reason to believe that this is a hardware and/or power supply problem? Given that the drives seem to be fine, I'm trying to figure out why readynas would have given me a drive 4 error, followed by a drive 2 error.

It's hard to rule out the power supply, but I am thinking there might have been a read error on disk 2. That would have aborted the resync, and let you with a failed volume. I've seen that scenario here before.

That is one rationale for suggesting using the three cloned disks, instead of the original three. I'm suggesting leaving out the new disk 4, because we don't know if the sync actually fully completed on the new drive. Booting read-only will keep the system from changing anything on the volume for now.

It might also be useful to download/install RAIDar on a PC.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: ReadyNAS RN104 Dead Message

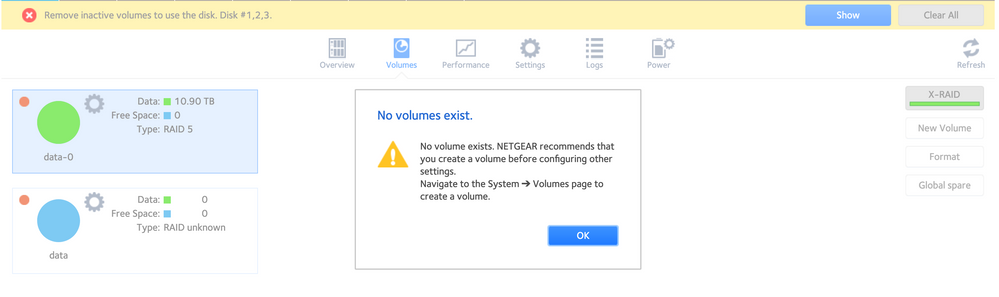

I booted my three 6tb cloned drives in read-only mode and I see the following. Am I supposed to do something else or does this indicate bad news?

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: ReadyNAS RN104 Dead Message

@rxmoss wrote:

I booted my three 6tb cloned drives in read-only mode and I see the following. Am I supposed to do something else or does this indicate bad news?

Not good news, but potentially fixable. You definitely don't want to create a new volume.

Try downloading the full log zip file. There's a lot in there - if you want help analyzing it, you can put the full zip into cloud storage (dropbox, google drive, etc), and send me a private message with a download link. Send the PM using the envelope icon in the upper right of the forum page. Don't post the log zip publicly.

Do you have any experience with the linux command line?

I'm asking because there are two main options to pursue:

- try to force the volume to mount in the NAS

- use RAID recovery software with BTRFS support (ex: ReclaiMe) on a Windows PC.

The first option requires technical skills and use of ssh/linux command line, the second option will incur some cost.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: ReadyNAS RN104 Dead Message

@StephenB wrote:

@rxmoss wrote:I booted my three 6tb cloned drives in read-only mode and I see the following. Am I supposed to do something else or does this indicate bad news?

Not good news, but potentially fixable. You definitely don't want to create a new volume.

Try downloading the full log zip file. There's a lot in there - if you want help analyzing it, you can put the full zip into cloud storage (dropbox, google drive, etc), and send me a private message with a download link. Send the PM using the envelope icon in the upper right of the forum page. Don't post the log zip publicly.

Do you have any experience with the linux command line?

I'm asking because there are two main options to pursue:

- try to force the volume to mount in the NAS

- use RAID recovery software with BTRFS support (ex: ReclaiMe) on a Windows PC.

The first option requires technical skills and use of ssh/linux command line, the second option will incur some cost.

Thank you--I have PM'd you with a link to the log file.

The NAS was used with a Mac, I have a Mac and have used Terminal to some degree.

I also have access to a Linux PC and have some rudimentary command line experience, but my experience is thin. Considering that I have the original 3 4TB drives (and the 4th) that seemed to have produced error-free copies, I can presumably re-copy them if my linux "experience" does more harm than good to the 3 6TBs I have in the NAS now.

At this point, you don't think there is any use in adding back the 4th drive to the NAS, right? Either the 6tb or the 4tb?

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: ReadyNAS RN104 Dead Message

@rxmoss wrote:At this point, you don't think there is any use in adding back the 4th drive to the NAS, right? Either the 6tb or the 4tb?

First, what I am seeing you your logs.

[Mon Mar 20 05:13:12 2023] md: md127 stopped.

[Mon Mar 20 05:13:12 2023] md: bind sdb3

[Mon Mar 20 05:13:12 2023] md: bind sdc3

[Mon Mar 20 05:13:12 2023] md: bind sda3

[Mon Mar 20 05:13:12 2023] md: kicking non-fresh sdb3 from array!

[Mon Mar 20 05:13:12 2023] md: unbind sdb3

[Mon Mar 20 05:13:12 2023] md: export_rdev(sdb3)

[Mon Mar 20 05:13:12 2023] md/raid:md127: device sda3 operational as raid disk 1

[Mon Mar 20 05:13:12 2023] md/raid:md127: device sdc3 operational as raid disk 3

[Mon Mar 20 05:13:12 2023] md/raid:md127: allocated 4294kB

[Mon Mar 20 05:13:12 2023] md/raid:md127: not enough operational devices (2/4 failed)

RAID maintains event counters on each disk, and if there is much disagreement in those counters, then the disk isn't included in the array. (that is basically detecting cached writes that never were written to the disk). That's happened in your case with disk 2 (sdb).

As you'd expect all disks are healthy (since they are cloned with no errors).

I don't think trying with disk 4 will help, but it wouldn't hurt to try if you boot read-only again. Insert the disk with the NAS powered down. It's not clear which of the four disks is more likely to work.

More likely, you'd need to try forcing disk 2 into the array (with only disks 1-3 inserted). The relevant command would be

mdadm --assemble --really-force /dev/md127 --verbose /dev/sd[abcd]3

You'd do this logging in as root, using the NAS admin password. SSH needs to be enabled from system->settings->services. Enablign ssh might fail because you have no volume, and if it does, we'd need to access the NAS via tech support mode. So post back if that is needed.

If md127 is successfully assembled, then just reboot the NAS, and the volume should mount (degraded). You'd then need to try to add the 6 TB drive again (reformatting it in the NAS to start the process).

There could be some corruption in the files (or some folders) since due to the missed writes. If you see much of this, you could still pursue data recovery using the original disks.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: ReadyNAS RN104 Dead Message

@StephenB wrote:You'd do this logging in as root, using the NAS admin password. SSH needs to be enabled from system->settings->services. Enablign ssh might fail because you have no volume, and if it does, we'd need to access the NAS via tech support mode. So post back if that is needed.

Unfortunately, when I tried to enable SSH, I got an error: Unable to start or modify service.

Do you happen to have instructions for how I can enable it in tech support mode?

Once I do that, I should be able to ssh into the device and try the command you have suggested in the earlier post.

Thank you!!

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: ReadyNAS RN104 Dead Message

ebUpdate: I'm back up and running with degraded data--THANK YOU THANK YOU!

I booted in tech support mode, used telnet to log into the device. Logged in using the default p/w found online.

Ran the command you suggested, got the code below, then rebooted and I have the degraded message and my data appears to be intact. THANK YOU!!!

# mdadm --assemble --really-force /dev/md127 --verbose /dev/sd[abcd]3

mdadm: looking for devices for /dev/md127

mdadm: /dev/sda3 is identified as a member of /dev/md127, slot 1.

mdadm: /dev/sdb3 is identified as a member of /dev/md127, slot 2.

mdadm: /dev/sdc3 is identified as a member of /dev/md127, slot 3.

mdadm: forcing event count in /dev/sdb3(2) from 42274 upto 43022

mdadm: clearing FAULTY flag for device 1 in /dev/md127 for /dev/sdb3

mdadm: Marking array /dev/md127 as 'clean'

mdadm: no uptodate device for slot 0 of /dev/md127

mdadm: added /dev/sdb3 to /dev/md127 as 2

mdadm: added /dev/sdc3 to /dev/md127 as 3

mdadm: added /dev/sda3 to /dev/md127 as 1

mdadm: /dev/md127 assembled from 3 drives - not enough to start the array.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: ReadyNAS RN104 Dead Message

@rxmoss wrote:

Ran the command you suggested, got the code below, then rebooted and I have the degraded message and my data appears to be intact. THANK YOU!!!

Glad I could help.

The array should expand after the last disk resyncs - let us know (either way) if that happens.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: ReadyNAS RN104 Dead Message

At long last, the resync is complete and my ReadyNAS is healthy again with four 6TB drives--thank you!

It does not look like the volume expanded, however. It is still showing the same total capacity as it did when I had the four 4TB drives installed.

Is there something I have to do to force it to expand and gain the extra space from the 24TB I now have?

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: ReadyNAS RN104 Dead Message

@rxmoss wrote:

It does not look like the volume expanded, however. It is still showing the same total capacity as it did when I had the four 4TB drives installed.

Is there something I have to do to force it to expand and gain the extra space from the 24TB I now have?

4x6 TB should be giving you an 18 TB (~16.37 TiB) volume size. So double-check that.

Not sure why it didn't expand, though I haven't done much with disk cloning.

I'd try a reboot first, and see if that triggers any expansion.

If that doesn't do it, then one option would be to resync the three clones - removing one, formatting it (or better - deleting the partitions) on a PC, and then adding it back. The volume should expand by 2 TB after each disk fully resyncs. So you could try this with one of the clones. Note you will see two resync operations - one for original RAID group, followed by a second that does the expansion. It's important not to do the second disk until the first resync finished.

It's also possible to use ssh, though the commands are pretty advanced, and getting the typing wrong could result in data loss. @Sandshark has experimented some with this, so might have a suggestion there.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: ReadyNAS RN104 Dead Message

The first thing to try in SSH is volume_util -e xraid. That's a ReadyNAS-specific command, though I'm not sure when it is intended to be used. It may be called by other parts of the OS when you insert a new drive.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: ReadyNAS RN104 Dead Message

I have almost the same problem as rxmoss has had, but different enough that I don't think his solution will be my solution. I'm hoping that you can help me out the same as you've helped him.

I have 4 drives in a ReadyNAS 104. One of the drives went out of sync, and when it tried to re-sync, another drive had issues and it declared itself as dead. (This was a while back but I shelved it until I stumbled on this forum). Booting it now, all the drives list themselves as "healthy", but there are no shares / volumes. Is there a way I can force them to re-mount as a volume so that I can recover some of the files? I'm not overly concerned if some files are corrupt because of the failed sync, as long as I can get old photos back.

Cheers 🙂

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: ReadyNAS RN104 Dead Message

Thanks for all of the advice but I'm still stuck at 10.9TB of storage.

I rebooted--no change.

I logged in ssh and ran the command "volume_util -e xraid"

I got a message "expanding pools..." for an instant and that was it.

@StephenB-- You wrote: "If that doesn't do it, then one option would be to resync the three clones - removing one, formatting it (or better - deleting the partitions) on a PC, and then adding it back. The volume should expand by 2 TB after each disk fully resyncs. So you could try this with one of the clones. Note you will see two resync operations - one for original RAID group, followed by a second that does the expansion. It's important not to do the second disk until the first resync finished."

Unfortunately I don't understand the terminology here. When you suggest I "resync the three clones," are you suggesting I remove one 6TB drive at a time, wiping it, and then reinstalling that same drive and allowing it to rebuild? Or when you refer to "one of the clones" are you referring to my set of 4TB drives that I pulled?

Needless to say, if there's an easier way than rebuilding one drive by one (which took several days just to resync one drive), I'd much prefer that...but beggars can't be choosers and I deeply appreciate where I am even now with 10.9TB.

Thanks again!

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: ReadyNAS RN104 Dead Message

@rxmoss wrote:

When you suggest I "resync the three clones," are you suggesting I remove one 6TB drive at a time, wiping it, and then reinstalling that same drive and allowing it to rebuild?

Yes.

@rxmoss wrote:

Needless to say, if there's an easier way than rebuilding one drive by one (which took several days just to resync one drive), I'd much prefer that...

SSH would be faster, but IMO not easier.

You'd need to

- make sure the disk partitions were correctly sized and matched on all four disks.

- sync the RAID array to use the full partition size

- expand the BTRFS file system to use the full RAID array.

If you PM me a full log zip, I can take a look at the partitions. That would be good to do before you begin.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: ReadyNAS RN104 Dead Message

@StephenB wrote:If you PM me a full log zip, I can take a look at the partitions. That would be good to do before you begin.

Partition sizes look the same for all the disks.

8 0 5860522584 sda

8 1 4194304 sda1

8 2 524288 sda2

8 3 3902297912 sda3

8 16 5860522584 sdb

8 17 4194304 sdb1

8 18 524288 sdb2

8 19 3902297912 sdb3

8 32 5860522584 sdc

8 33 4194304 sdc1

8 34 524288 sdc2

8 35 3902297912 sdc3

8 48 5860522584 sdd

8 49 4194304 sdd1

8 50 524288 sdd2

8 51 3902297912 sdd3

The expansion error is:

Mar 25 07:25:44 NAS-4DR rn-expand[2342]: Found pool data, RAID data-0 to be expandable (using concatenation)

Mar 25 07:25:44 NAS-4DR rn-expand[2342]: 3 disks expandable in data

Mar 25 07:25:44 NAS-4DR rn-expand[2342]: Checking if RAID disk sdd is expandable...

Mar 25 07:25:44 NAS-4DR rn-expand[2342]: Checking if RAID disk sdc is expandable...

Mar 25 07:25:44 NAS-4DR rn-expand[2342]: Checking if RAID disk sdb is expandable...

Mar 25 07:25:44 NAS-4DR rn-expand[2342]: Checking if RAID disk sda is expandable...

Mar 25 07:25:44 NAS-4DR rn-expand[2342]: Checking if RAID disk sdd is expandable...

Mar 25 07:25:44 NAS-4DR rn-expand[2342]: Checking if RAID disk sdc is expandable...

Mar 25 07:25:44 NAS-4DR rn-expand[2342]: Preparing disk 0:1 (sdc,WDC_WD62PURZ-85B3AY0,5589GB)...

Mar 25 07:25:44 NAS-4DR rn-expand[2342]: prepare_disk: Prepare disk 0:1 (sdc,WDC_WD62PURZ-85B3AY0,5589GB) with partition size 3907012048

Mar 25 07:25:44 NAS-4DR rn-expand[2342]: Partitioning failed! [4]

Mar 25 07:25:44 NAS-4DR rn-expand[2342]: output: Warning: Setting alignment to a value that does not match the disk's;physical block size! Performance degradation may result!;Physical block size = 4096;Logical block size = 512;Optimal alignment = 8 or multiples thereof.;Could not create partition 4 from 7814033072 to 11721045119;Could not change partition 4's type code to fd00!;Error encountered; not saving changes.;

Mar 25 07:25:44 NAS-4DR rn-expand[2342]: add_partition: Input/output error [5]

Mar 25 07:25:44 NAS-4DR rn-expand[2342]: prepare_disk failed: Input/output error

I'm not sure what to do about this error (or if it is limited to sdc, or if whether that is simply the first disk on which the NAS tried to create the new partition). Also I'm a bit puzzled on why it only detected the cloned disks as expandable ("3 disks expandable in data").

I am thinking that the process I suggested (resyncing the cloned disks one at a time) likely won't work, given this error.

@Sandshark might have some thoughts on how to investigate this with ssh.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: ReadyNAS RN104 Dead Message

OK, so I'm not entirely sure which part of the error message is the main issue, but I suspect it's the boundary issue. If it can't even create the partition, then the other error is sure to follow.

Warning: Setting alignment to a value that does not match the disk's;physical block size! Performance degradation may result!;Physical block size = 4096;Logical block size = 512;Optimal alignment = 8 or multiples thereof.;Could not create partition 4 from 7814033072 to 11721045119;Could not change partition 4's type code to fd00!;Error encountered; not saving changes.;

I believe the problem here is that the WDC_WD62PURZ-85B3AY0 isn't really 512e, though the NAS believes it is. No WD documentation mentions they are, they are simply labeled "advanced format", which usually means no 512e if 512e isn't expressly listed. The other drives in the NAS must be either 512N or 512e and the NAS wants to match the partition size to properly expand. But it can't ignore the warning about optimal alignment and create a non-optimized partition if the drives are not actually 512e, not matter what the tool used.

fdisk (standard Linux) has long been restricted to the 4K alignment specified on a 4KN drive with or without 512e. But the ReadyNAS has still been able (and usually does, as best I can tell) create them un-aligned. I'm not sure what it uses to do that and why it's not doing so here if the drive really is 512e. When I manually expanded my FlexRAID system (How-to-do-incremental-vertical-expansion-in-FlexRAID-mode ), I ended up having to install and use parted to create new partitions that started where the initial ones the OS created ended. But my drives were 512e.

The WD Purple drives are not a good choice for a NAS. They are optimized for fast, continuous writes where a NAS is typically used for reads more than writes. Since restricting to 4KN sectoring would help in optimizing the writes and the WD documentation doesn't mention 512e, they likely are not 512e. Why the NAS thinks they are, I don't know.

It is my understanding that the ReadyNAS can handle 4KN drives. If you start with at least one 4KN, then surely the NAS sees that and aligns the partitions accordingly. But if you start with all 512N or 512e drives, the NAS may partition them such that a 4KN drive's partitions won't be the same size. I believe MDADM can work around having partitions of slightly different sizes, though I've not tried it myself or observed in on a ReadyNAS formatted by XRAID. But I think it's not attempting to do so because it believes the drive is 512e.

Now, at some point in the updates, the OS may have started using all 4K-aligned partitions regardless. But on my NAS, the initial volume was made under OS 9.5.something, and they are not 4K aligned. If your volume was also made under an much older OS version, that may play a part in the issue.

For those unfamiliar with the terms:

512N = native 512 byte sectors. Old school -- storage on the drive is in 512 byte "packets")

4KN = native 4K byte sectors. Data is stored in 4k packets.

512e = 512 byte sector emulation on a 4KN drive. Allows, with some loss of speed due to multi-pass writing of the "odd" sectors (not a multiple of 4K), a drive to be written to in 512k packets, though still stored in 4K packets, and thus can also be non-optimally formatted at (emulated) 512 byte boundaries.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: ReadyNAS RN104 Dead Message

I have verified that adding a 4KN drive to a unit that previously contained all 512N or 512e drives works fine. I don't know about replacing one. But the partitions actually do appear 4K aligned in my system, so I'm not sure why fdisk thought they weren't on my system or yours.

Can you post the results of fdisk -l ?

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: ReadyNAS RN104 Dead Message

Thanks very much for the help! I know that purple drives may not be optimal, but it's what I can get and I have been using them for 6 yrs or so now in the NAS.

Here's the info you requested:

Disk /dev/mtdblock0: 1.5 MiB, 1572864 bytes, 3072 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk /dev/mtdblock1: 512 KiB, 524288 bytes, 1024 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk /dev/mtdblock2: 6 MiB, 6291456 bytes, 12288 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk /dev/mtdblock3: 4 MiB, 4194304 bytes, 8192 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk /dev/mtdblock4: 116 MiB, 121634816 bytes, 237568 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk /dev/sda: 5.5 TiB, 6001175126016 bytes, 11721045168 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 4096 bytes

I/O size (minimum/optimal): 4096 bytes / 4096 bytes

Disklabel type: gpt

Disk identifier: ADAF7F6E-958D-4AC6-96D1-7A50E451A1FF

Device Start End Sectors Size Type

/dev/sda1 64 8388671 8388608 4G Linux RAID

/dev/sda2 8388672 9437247 1048576 512M Linux RAID

/dev/sda3 9437248 7814033071 7804595824 3.6T Linux RAID

Disk /dev/sdb: 5.5 TiB, 6001175126016 bytes, 11721045168 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 4096 bytes

I/O size (minimum/optimal): 4096 bytes / 4096 bytes

Disklabel type: gpt

Disk identifier: CB953AE0-8CB9-4E19-9153-3D70439C8A55

Device Start End Sectors Size Type

/dev/sdb1 64 8388671 8388608 4G Linux RAID

/dev/sdb2 8388672 9437247 1048576 512M Linux RAID

/dev/sdb3 9437248 7814033071 7804595824 3.6T Linux RAID

Disk /dev/sdc: 5.5 TiB, 6001175126016 bytes, 11721045168 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 4096 bytes

I/O size (minimum/optimal): 4096 bytes / 4096 bytes

Disklabel type: gpt

Disk identifier: 992BD3A0-4137-40CA-A075-CA589E9D4AF3

Device Start End Sectors Size Type

/dev/sdc1 64 8388671 8388608 4G Linux RAID

/dev/sdc2 8388672 9437247 1048576 512M Linux RAID

/dev/sdc3 9437248 7814033071 7804595824 3.6T Linux RAID

Disk /dev/sdd: 5.5 TiB, 6001175126016 bytes, 11721045168 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 4096 bytes

I/O size (minimum/optimal): 4096 bytes / 4096 bytes

Disklabel type: gpt

Disk identifier: 5B61EDD8-CC02-4206-866E-3A36BBE0DFEF

Device Start End Sectors Size Type

/dev/sdd1 64 8388671 8388608 4G Linux RAID

/dev/sdd2 8388672 9437247 1048576 512M Linux RAID

/dev/sdd3 9437248 7814033071 7804595824 3.6T Linux RAID

Disk /dev/md0: 4 GiB, 4290772992 bytes, 8380416 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 4096 bytes

I/O size (minimum/optimal): 4096 bytes / 4096 bytes

Disk /dev/md1: 1020 MiB, 1069547520 bytes, 2088960 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 4096 bytes

I/O size (minimum/optimal): 524288 bytes / 1048576 bytes

Disk /dev/md127: 10.9 TiB, 11987456360448 bytes, 23413000704 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 4096 bytes

I/O size (minimum/optimal): 65536 bytes / 196608 bytes- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: ReadyNAS RN104 Dead Message

@rxmoss is having trouble with things getting caught in the SPAM filter, so he sent the fdisk -l listing. I'm only posting the part for sda, as all of the drives are the same.

Disk /dev/sda: 5.5 TiB, 6001175126016 bytes, 11721045168 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 4096 bytes

I/O size (minimum/optimal): 4096 bytes / 4096 bytes

Disklabel type: gpt

Disk identifier: ADAF7F6E-958D-4AC6-96D1-7A50E451A1FF

Device Start End Sectors Size Type

/dev/sda1 64 8388671 8388608 4G Linux RAID

/dev/sda2 8388672 9437247 1048576 512M Linux RAID

/dev/sda3 9437248 7814033071 7804595824 3.6T Linux RAID

So, all drives do appear to be 512N or 512e, and they all have 11721045168 sectors, so it should have no problem creating a partition that goes out to 11721045119. Furthermore, 11721045119 is the normal highest sector used by a ReadyNAS on a 6TB drive. In other words: nothing unusual here.

So, it sounds to me like a drive problem that's preventing the partition from being made. Maybe a hardware issue, or maybe some "junk" put on by the cloning process that's blocking it.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: ReadyNAS RN104 Dead Message

@Sandshark wrote:

So, it sounds to me like a drive problem that's preventing the partition from being made. Maybe a hardware issue, or maybe some "junk" put on by the cloning process that's blocking it.

Looking at systemd-journal.log, there are actually two errors - one for sdc, and one for sdd:

Mar 25 07:25:41 NAS-4DR rn-expand[2342]: Trying auto-expand (in-place)

Mar 25 07:25:41 NAS-4DR rn-expand[2342]: Considering inplace auto-expansion for data

Mar 25 07:25:41 NAS-4DR rn-expand[2342]: Checking if RAID disk sdd is expandable...

Mar 25 07:25:41 NAS-4DR rn-expand[2342]: Checking if RAID disk sdc is expandable...

Mar 25 07:25:41 NAS-4DR systemd[1]: systemd-journald-audit.socket: Cannot add dependency job, ignoring: Unit systemd-journald-audit.socket is masked.

Mar 25 07:25:41 NAS-4DR rn-expand[2342]: Checking if RAID disk sdb is expandable...

Mar 25 07:25:41 NAS-4DR systemd[1]: Started Radar Update Timer.

Mar 25 07:25:41 NAS-4DR rn-expand[2342]: Checking if RAID disk sda is expandable...

Mar 25 07:25:41 NAS-4DR rn-expand[2342]: Found pool data, RAID data-0 to be fully expandable

Mar 25 07:25:41 NAS-4DR rn-expand[2342]: Checking if RAID disk sdd is expandable...

Mar 25 07:25:41 NAS-4DR rn-expand[2342]: Checking if RAID disk sdc is expandable...

Mar 25 07:25:41 NAS-4DR rn-expand[2342]: Checking if RAID disk sdb is expandable...

Mar 25 07:25:41 NAS-4DR rn-expand[2342]: Checking if RAID disk sda is expandable...

Mar 25 07:25:41 NAS-4DR rn-expand[2342]: Expanding pool data, RAID data-0

Mar 25 07:25:41 NAS-4DR rn-expand[2342]: Expanding disk 0:0 (sdd,WDC_WD62PURZ-85B3AY0,5589GB) from 7804595824 to 11711607856

Mar 25 07:25:41 NAS-4DR apache2[2335]: [cgi:error] [pid 2335] [client 192.168.0.172:56570] AH01215: Connect rddclient failed: /frontview/lib/dbbroker.cgi, referer: http://192.168.0.181/admin/index.html

Mar 25 07:25:41 NAS-4DR readynasd[2342]: Could not create partition 3 from 9437248 to 11721045103

Mar 25 07:25:41 NAS-4DR readynasd[2342]: Could not change partition 3's type code to fd00!

Mar 25 07:25:41 NAS-4DR readynasd[2342]: Error encountered; not saving changes.

Mar 25 07:25:41 NAS-4DR rn-expand[2342]: command failed [4]: /sbin/sgdisk -a1 -d3 -n3:9437248:11721045103 -t3:fd00 /dev/sdd >/dev/null

Mar 25 07:25:41 NAS-4DR rn-expand[2342]: Expansion failed for pool data [Invalid argument]

Mar 25 07:25:42 NAS-4DR apache2[2336]: [cgi:error] [pid 2336] [client 192.168.0.172:56571] AH01215: Connect rddclient failed: /frontview/lib/dbbroker.cgi, referer: http://192.168.0.181/admin/index.html

Mar 25 07:25:44 NAS-4DR apachectl[2536]: [Sat Mar 25 07:25:44.103953 2023] [so:warn] [pid 2583] AH01574: module cgi_module is already loaded, skipping

Mar 25 07:25:44 NAS-4DR apachectl[2536]: AH00558: apache2: Could not reliably determine the server's fully qualified domain name, using fe80::eafc:afff:fee5:2261. Set the 'ServerName' directive globally to suppress this message

Mar 25 07:25:44 NAS-4DR systemd[1]: Reloaded The Apache HTTP Server.

Mar 25 07:25:44 NAS-4DR rn-expand[2342]: Trying auto-extend (grow onto additional disks)

Mar 25 07:25:44 NAS-4DR rn-expand[2342]: auto_extend: Checking disk sdd...

Mar 25 07:25:44 NAS-4DR rn-expand[2342]: auto_extend: Checking disk sdc...

Mar 25 07:25:44 NAS-4DR rn-expand[2342]: auto_extend: Checking disk sdb...

Mar 25 07:25:44 NAS-4DR rn-expand[2342]: auto_extend: Checking disk sda...

Mar 25 07:25:44 NAS-4DR rn-expand[2342]: Trying xraid-expand (tiered expansion)

Mar 25 07:25:44 NAS-4DR rn-expand[2342]: Considering X-RAID auto-expansion for data

Mar 25 07:25:44 NAS-4DR rn-expand[2342]: Checking if RAID disk sdd is expandable...

Mar 25 07:25:44 NAS-4DR systemd[1]: Started ReadyNAS System Daemon.

Mar 25 07:25:44 NAS-4DR rn-expand[2342]: Checking if RAID disk sdc is expandable...

Mar 25 07:25:44 NAS-4DR systemd[1]: Started ReadyNAS Backup Service.

Mar 25 07:25:44 NAS-4DR rn-expand[2342]: Checking if RAID disk sdb is expandable...

Mar 25 07:25:44 NAS-4DR systemd[1]: Starting MiniSSDPd...

Mar 25 07:25:44 NAS-4DR rn-expand[2342]: Checking if RAID disk sda is expandable...

Mar 25 07:25:44 NAS-4DR rn-expand[2342]: Found pool data, RAID data-0 to be expandable (using concatenation)

Mar 25 07:25:44 NAS-4DR fvbackup-q[2589]: Start fvbackup-q

Mar 25 07:25:44 NAS-4DR rn-expand[2342]: 3 disks expandable in data

Mar 25 07:25:44 NAS-4DR rn-expand[2342]: Checking if RAID disk sdd is expandable...

Mar 25 07:25:44 NAS-4DR rn-expand[2342]: Checking if RAID disk sdc is expandable...

Mar 25 07:25:44 NAS-4DR rn-expand[2342]: Checking if RAID disk sdb is expandable...

Mar 25 07:25:44 NAS-4DR rn-expand[2342]: Checking if RAID disk sda is expandable...

Mar 25 07:25:44 NAS-4DR rn-expand[2342]: Checking if RAID disk sdd is expandable...

Mar 25 07:25:44 NAS-4DR rn-expand[2342]: Checking if RAID disk sdc is expandable...

Mar 25 07:25:44 NAS-4DR rn-expand[2342]: Preparing disk 0:1 (sdc,WDC_WD62PURZ-85B3AY0,5589GB)...

Mar 25 07:25:44 NAS-4DR rn-expand[2342]: prepare_disk: Prepare disk 0:1 (sdc,WDC_WD62PURZ-85B3AY0,5589GB) with partition size 3907012048

Mar 25 07:25:44 NAS-4DR readynasd[2342]: ReadyNASOS background service started.

Mar 25 07:25:44 NAS-4DR rn-expand[2342]: Partitioning failed! [4]

Mar 25 07:25:44 NAS-4DR rn-expand[2342]: output: Warning: Setting alignment to a value that does not match the disk's;physical block size! Performance degradation may result!;Physical block size = 4096;Logical block size = 512;Optimal alignment = 8 or multiples thereof.;Could not create partition 4 from 7814033072 to 11721045119;Could not change partition 4's type code to fd00!;Error encountered; not saving changes.;

Mar 25 07:25:44 NAS-4DR rn-expand[2342]: add_partition: Input/output error [5]

Mar 25 07:25:44 NAS-4DR rn-expand[2342]: prepare_disk failed: Input/output error

The first error (sdd) is a failure to set the partition type to fd00.

I don't know why the NAS actually needs to do that. It could be useful to know that what the partition type actually is for all 4 drives. fdisk won't list it, but gdisk will. So maybe let us see this output:

gdisk -l /dev/sda

gdisk -l /dev/sdb

gdisk -l /dev/sdc

gdisk -l /dev/sdd

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: ReadyNAS RN104 Dead Message

gdisk is not standard in the OS. fdisk will show the partition type if you use it drive by drive (same as your commands for gdisk, just substituting fdisk). But since the other partitions are from a cloning process, I suspect they are right. The inability to set the partition type is undoubtedly just fallout because the partition creation failed and the OS ignores that and tries to set the partition type anyway.

I'm not familiar with partition tables enough to know if there is there something in a cloned partition table that makes you unable to expand beyond the size of the cloned drive. But that would make some sense since it's affecting more than one drive.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: ReadyNAS RN104 Dead Message

I sent over the gpart output to both @Sandshark and @StephenB. Having trouble posting the code publicly. Thank you both!