- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

Re: Сhecksum errors in files on RAID5 Netgear ReadyNAS Pro 6 RNDP6000

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Netgear ReadyNAS Pro 6 RNDP6000

RAID-5 (of 6 disks) 2TB

FW 6.10.2

I got a checksum error message in the backup software.

I was check disks and found on one many bad sectors. (using HDAT2 software).

NetGear was no errors in GUI.

Bad disk was extracted.

In degraded mode without one disk (via root ssh)

created random file

dd if=/dev/urandom of=Test.flie bs=64M count=32

immidatly check file

md5sum Test.flie 594eacb844ae053ab8bccadb9f3e43b4 Test.flie

waiting 10 min and checked again

md5sum Test.flie 522c8afffd428e14b425d31d8b5d7f52 Test.flie

Checksums not equal.

Whataps happend?

Solved! Go to Solution.

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Roman304 wrote:

I have partitioned jbod raid drives into each drive. RAID number 4 checksum error.

That's a clear indication that your issue is either linked to that disk or to that slot. The next step is to figure out which.

I suggest destroying RAID 1,2,5,6, and removing those disks. Then power down the NAS, and swap RAID 3 and RAID 4. Power up and re-run the test on both volumes. That will let you know if the problem is linked to the disk or the slot.

If the problem disappears on both disks, then it could be power-related. You can confirm that by adding the removed disks back one at a time, and see when the problem starts happening again.

All Replies

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: Сhecksum errors in files on RAID5 Netgear ReadyNAS Pro 6 RNDP6000

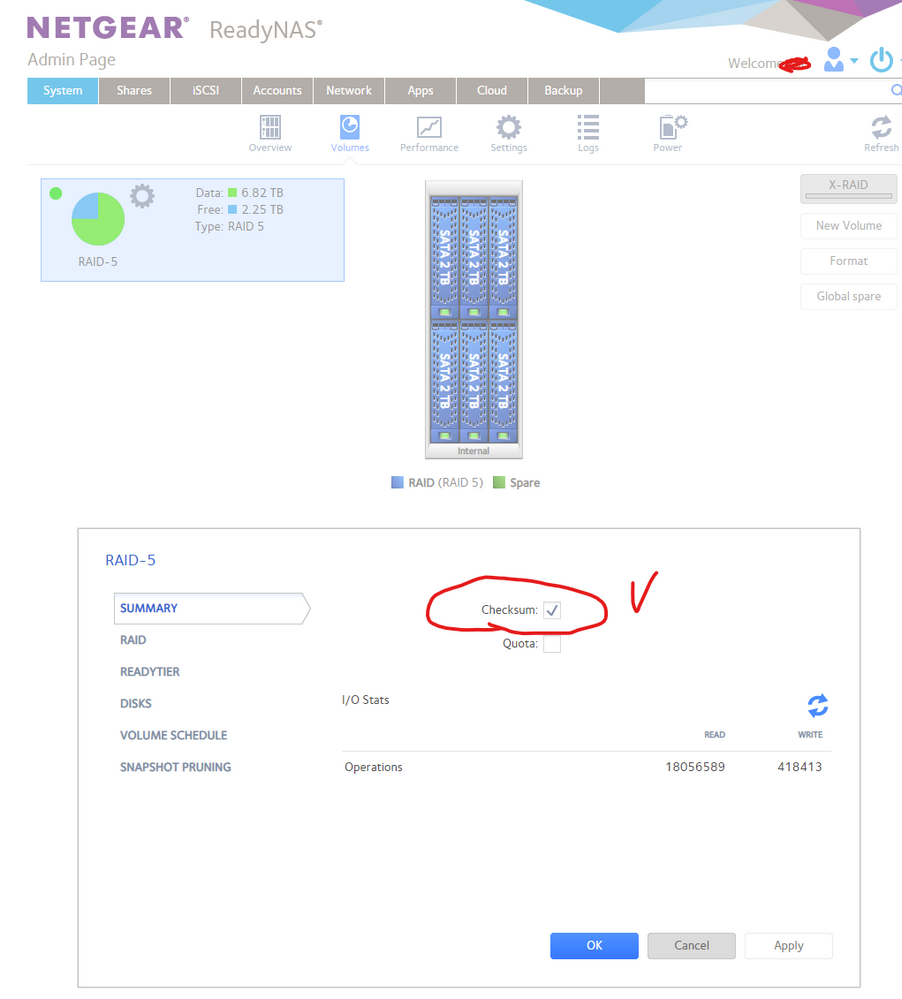

Did you have bit-rot protection turned on or disabled on the share?

The checksum of the test file changing would seem to suggest bad RAM (memory) or a loose RAM module as a possibility. Have you run the memory test boot menu option?

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: Сhecksum errors in files on RAID5 Netgear ReadyNAS Pro 6 RNDP6000

@Roman304 wrote:

immidatly check file

md5sum Test.flie 594eacb844ae053ab8bccadb9f3e43b4 Test.fliewaiting 10 min and checked again

md5sum Test.flie 522c8afffd428e14b425d31d8b5d7f52 Test.flieChecksums not equal.

Whataps happend?

Sounds like you might have more than one bad disk. I hope you have a backup.

I suggest downloading the full log zip file, and then look for errors around the time you created and checksumed the test file.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: Сhecksum errors in files on RAID5 Netgear ReadyNAS Pro 6 RNDP6000

Bit-rot Protection was disabled for all share. RAM (Memory) was tested by MemTest86 - No error (7 laps).

I create file under ReadyNAS os directly on mountpoint /RAID-5

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: Сhecksum errors in files on RAID5 Netgear ReadyNAS Pro 6 RNDP6000

@Roman304 wrote:

Bit-rot Protection was disabled for all share.

I create file under ReadyNAS os directly on mountpoint /RAID-5

I understood that from your commands.

Not sure what happened, as of course md5sum should have generated the same result the second time. Of course the volume is degraded, so any other disk errors can't be corrected by RAID parity.

So a read error or file system error could account for it - which is why I suggested looking in the log zip for any errors that occured while the commands were being executed. You could substitute journalctl if you prefer to use ssh.

You might also try rebooting the NAS and see if you can reproduce the error.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: Сhecksum errors in files on RAID5 Netgear ReadyNAS Pro 6 RNDP6000

dmesg on boot nothing about RAID-5 /dev/md127

root@NAS-2:~# dmesg | grep md0 [ 31.068901] md: md0 stopped. [ 31.075222] md/raid1:md0: active with 6 out of 6 mirrors [ 31.075307] md0: detected capacity change from 0 to 4290772992 [ 31.602526] BTRFS: device label 33ea55f9:root devid 1 transid 467355 /dev/md0 [ 31.603155] BTRFS info (device md0): has skinny extents [ 33.369153] BTRFS warning (device md0): csum failed ino 117932 off 2420736 csum 3710567192 expected csum 4039208015 [ 33.378234] BTRFS warning (device md0): csum failed ino 117932 off 3203072 csum 2302637777 expected csum 2765412742 [ 39.711268] BTRFS warning (device md0): csum failed ino 26800 off 2105344 csum 3723732640 expected csum 4129019946 root@NAS-2:~# dmesg | grep md1 [ 31.100266] md: md1 stopped. [ 31.108850] md/raid10:md1: active with 6 out of 6 devices [ 31.108933] md1: detected capacity change from 0 to 1604321280 [ 34.218589] md: md127 stopped. [ 34.246979] md/raid:md127: device sda3 operational as raid disk 0 [ 34.246985] md/raid:md127: device sdf3 operational as raid disk 5 [ 34.246988] md/raid:md127: device sde3 operational as raid disk 4 [ 34.246990] md/raid:md127: device sdd3 operational as raid disk 3 [ 34.246993] md/raid:md127: device sdc3 operational as raid disk 2 [ 34.246996] md/raid:md127: device sdb3 operational as raid disk 1 [ 34.247777] md/raid:md127: allocated 6474kB [ 34.247926] md/raid:md127: raid level 5 active with 6 out of 6 devices, algorithm 2 [ 34.248112] md127: detected capacity change from 0 to 9977158696960 [ 34.658138] Adding 1566716k swap on /dev/md1. Priority:-1 extents:1 across:1566716k [ 34.670593] BTRFS: device label 33ea55f9:RAID-5 devid 1 transid 27093 /dev/md127 [ 34.980123] BTRFS info (device md127): has skinny extents root@NAS-2:~# dmesg | grep md127 [ 34.218589] md: md127 stopped. [ 34.246979] md/raid:md127: device sda3 operational as raid disk 0 [ 34.246985] md/raid:md127: device sdf3 operational as raid disk 5 [ 34.246988] md/raid:md127: device sde3 operational as raid disk 4 [ 34.246990] md/raid:md127: device sdd3 operational as raid disk 3 [ 34.246993] md/raid:md127: device sdc3 operational as raid disk 2 [ 34.246996] md/raid:md127: device sdb3 operational as raid disk 1 [ 34.247777] md/raid:md127: allocated 6474kB [ 34.247926] md/raid:md127: raid level 5 active with 6 out of 6 devices, algorithm 2 [ 34.248112] md127: detected capacity change from 0 to 9977158696960 [ 34.670593] BTRFS: device label 33ea55f9:RAID-5 devid 1 transid 27093 /dev/md127 [ 34.980123] BTRFS info (device md127): has skinny extents

What log file do you recommend watching?

There are a lot of them in zip.

Problem not solved after reboot.

root@NAS-2:/RAID-5/TEST-FILE# dd if=/dev/urandom of=Test.flie bs=64M count=32 dd: warning: partial read (33554431 bytes); suggest iflag=fullblock 0+32 records in 0+32 records out 1073741792 bytes (1.1 GB, 1.0 GiB) copied, 104.607 s, 10.3 MB/s root@NAS-2:/RAID-5/TEST-FILE# md5sum Test.flie 5c07ebd42dc2af232c0431d3b86cab7d Test.flie root@NAS-2:/RAID-5/TEST-FILE# md5sum Test.flie 5c07ebd42dc2af232c0431d3b86cab7d Test.flie root@NAS-2:/RAID-5/TEST-FILE# md5sum Test.flie 433c2099b00285f11ed88d0c7d580f32 Test.flie root@NAS-2:/RAID-5/TEST-FILE# md5sum Test.flie 38b511f0129c3ac888f19a430b3938c8 Test.flie root@NAS-2:/RAID-5/TEST-FILE# md5sum Test.flie 38b511f0129c3ac888f19a430b3938c8 Test.flie

Nothing errors in GUI after rebuild.

/dev/md127:

Version : 1.2

Creation Time : Mon Mar 16 21:27:21 2020

Raid Level : raid5

Array Size : 9743319040 (9291.95 GiB 9977.16 GB)

Used Dev Size : 1948663808 (1858.39 GiB 1995.43 GB)

Raid Devices : 6

Total Devices : 6

Persistence : Superblock is persistent

Update Time : Fri May 28 17:29:02 2021

State : clean

Active Devices : 6

Working Devices : 6

Failed Devices : 0

Spare Devices : 0

Layout : left-symmetric

Chunk Size : 64K

Consistency Policy : unknown

Name : 33ea55f9:RAID-5-0 (local to host 33ea55f9)

UUID : 04d214c4:ee331e6a:74ca0a04:5e846481

Events : 977

Number Major Minor RaidDevice State

6 8 3 0 active sync /dev/sda3

1 8 19 1 active sync /dev/sdb3

2 8 35 2 active sync /dev/sdc3

3 8 51 3 active sync /dev/sdd3

4 8 67 4 active sync /dev/sde3

5 8 83 5 active sync /dev/sdf3cat /sys/block/md127/md/mismatch_cnt 0

What other test can i do?

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: Сhecksum errors in files on RAID5 Netgear ReadyNAS Pro 6 RNDP6000

i tried to check mdadm by signal.

echo repair > /sys/block/md127/md/sync_action

9012.910037] md: requested-resync of RAID array md127 [ 9012.910043] md: minimum _guaranteed_ speed: 30000 KB/sec/disk. [ 9012.910045] md: using maximum available idle IO bandwidth (but not more than 200000 KB/sec) for requested-resync. [ 9012.910053] md: using 128k window, over a total of 1948663808k. [ 9084.033911] BTRFS warning (device md0): csum failed ino 26800 off 3297280 csum 2509189606 expected csum 3194441580 [ 9084.454904] BTRFS warning (device md0): csum failed ino 26800 off 4055040 csum 1637586726 expected csum 1282422897 [ 9084.581585] BTRFS warning (device md0): csum failed ino 26800 off 6422528 csum 1593964658 expected csum 1961461496 [ 9084.612492] sh (25655): drop_caches: 3 [ 9084.614361] BTRFS warning (device md0): csum failed ino 26800 off 5677056 csum 1932001198 expected csum 1489994020 [ 9084.615384] BTRFS warning (device md0): csum failed ino 26800 off 5677056 csum 1932001198 expected csum 1489994020 [ 9084.622754] BTRFS warning (device md0): csum failed ino 26800 off 7094272 csum 3606228094 expected csum 4246393588 [ 9084.695542] sh (25658): drop_caches: 3 [ 9085.341325] sh (25699): drop_caches: 3 [ 9085.424200] sh (25700): drop_caches: 3 [ 9085.607585] sh (25701): drop_caches: 3 [ 9085.704849] sh (25702): drop_caches: 3 [ 9085.731888] sh (25704): drop_caches: 3 [ 9085.732521] mdcsrepair[25705]: segfault at 1902230 ip 00000000004048df sp 00007ffea4e019b0 error 4 in mdcsrepair[400000+10000] [ 9087.009513] sh (25731): drop_caches: 3 [ 9087.110047] sh (25732): drop_caches: 3 [ 9087.557204] sh (25762): drop_caches: 3 [ 9087.638523] sh (25766): drop_caches: 3 [ 9101.700580] BTRFS warning (device md0): csum failed ino 26800 off 3297280 csum 2509189606 expected csum 3194441580 [ 9102.140147] BTRFS warning (device md0): csum failed ino 26800 off 6729728 csum 843258588 expected csum 430662742 [ 9102.141347] BTRFS warning (device md0): csum failed ino 26800 off 4530176 csum 4014326161 expected csum 3266211526 [ 9102.142287] BTRFS warning (device md0): csum failed ino 26800 off 4055040 csum 1637586726 expected csum 1282422897 [ 9102.142732] BTRFS warning (device md0): csum failed ino 26800 off 4804608 csum 2561880108 expected csum 3042484091 [ 9103.060502] BTRFS warning (device md0): csum failed ino 26800 off 2076672 csum 188520152 expected csum 651639183 [ 9103.276951] sh (26055): drop_caches: 3 [ 9103.277401] sh (26056): drop_caches: 3 [ 9103.277815] sh (26058): drop_caches: 3 [ 9103.281850] sh (26054): drop_caches: 3 [ 9103.282294] sh (26057): drop_caches: 3 [ 9103.434179] sh (26062): drop_caches: 3 [ 9103.437582] sh (26060): drop_caches: 3 [ 9103.438571] sh (26059): drop_caches: 3 [ 9103.465113] sh (26061): drop_caches: 3 [ 9103.467454] sh (26063): drop_caches: 3 [ 9103.467969] sh (26064): drop_caches: 3 [ 9103.566172] sh (26066): drop_caches: 3 [ 9103.567000] mdcsrepair[26070]: segfault at 1ca6238 ip 00000000004048df sp 00007fffea17d9d0 error 4 in mdcsrepair[400000+10000] [ 9103.568900] sh (26067): drop_caches: 3 [ 9103.568901] sh (26065): drop_caches: 3 [ 9103.569312] mdcsrepair[26071]: segfault at 1d29220 ip 00000000004048df sp 00007ffcf1ef8270 error 4 in mdcsrepair[400000+10000] [ 9103.599827] sh (26069): drop_caches: 3 [ 9103.600311] mdcsrepair[26092]: segfault at 25a7228 ip 00000000004048df sp 00007ffd96a6c460 error 4 in mdcsrepair[400000+10000] [ 9103.639920] BTRFS warning (device md0): csum failed ino 26800 off 7749632 csum 976488093 expected csum 400533962 [ 9103.640132] BTRFS warning (device md0): csum failed ino 26800 off 7749632 csum 976488093 expected csum 400533962 [ 9105.156635] sh (26145): drop_caches: 3 [ 9105.262331] sh (26146): drop_caches: 3 [ 9105.338203] sh (26148): drop_caches: 3 [ 9105.338666] mdcsrepair[26149]: segfault at 14e0228 ip 00000000004048df sp 00007ffd2601bda0 error 4 in mdcsrepair[400000+10000] [ 9392.955849] BTRFS warning (device md0): csum failed ino 26800 off 3297280 csum 2509189606 expected csum 3194441580 [ 9393.396182] sh (27133): drop_caches: 3 [ 9393.407254] BTRFS warning (device md0): csum failed ino 26800 off 5730304 csum 1721535998 expected csum 1266098857 [ 9393.555932] sh (27134): drop_caches: 3 [ 9393.680572] BTRFS warning (device md0): csum failed ino 26800 off 4276224 csum 2257014538 expected csum 2876039261 [ 9393.705564] sh (27136): drop_caches: 3 [ 9393.705994] mdcsrepair[27138]: segfault at fb1238 ip 00000000004048df sp 00007fffde04bee0 error 4 in mdcsrepair[400000+10000] [ 9394.203447] BTRFS warning (device md0): csum failed ino 26800 off 5115904 csum 406066205 expected csum 870083223 [ 9394.203615] BTRFS warning (device md0): csum failed ino 26800 off 5115904 csum 406066205 expected csum 870083223 [ 9395.340527] sh (27180): drop_caches: 3 [ 9395.465281] sh (27182): drop_caches: 3 [ 9395.529394] sh (27184): drop_caches: 3 [ 9395.529871] mdcsrepair[27187]: segfault at 134d230 ip 00000000004048df sp 00007ffeebc6fb40 error 4 in mdcsrepair[400000+10000] [ 9395.831133] sh (27222): drop_caches: 3 [ 9395.950588] sh (27223): drop_caches: 3 [ 9395.951581] sh (27224): drop_caches: 3 [ 9395.980226] sh (27226): drop_caches: 3 [ 9396.077257] sh (27227): drop_caches: 3 [ 9396.077683] mdcsrepair[27229]: segfault at 1b93238 ip 00000000004048df sp 00007fff9c18ac80 error 4 in mdcsrepair[400000+10000] [11045.200001] BTRFS warning (device md0): csum failed ino 26800 off 2174976 csum 549178347 expected csum 190035297 [11045.294967] BTRFS warning (device md0): csum failed ino 26800 off 4857856 csum 2091937243 expected csum 1364968076 [11045.297180] BTRFS warning (device md0): csum failed ino 26800 off 5017600 csum 455988020 expected csum 918977635 [11045.323613] BTRFS warning (device md0): csum failed ino 26800 off 6045696 csum 3782956367 expected csum 3432053272 [11045.324193] sh (31561): drop_caches: 3 [11045.380741] BTRFS warning (device md0): csum failed ino 26800 off 7426048 csum 3231217454 expected csum 3983792249 [11045.540305] sh (31563): drop_caches: 3 [11046.101205] sh (31610): drop_caches: 3 [11046.200518] sh (31611): drop_caches: 3 [11046.201664] sh (31612): drop_caches: 3 [11046.212111] sh (31613): drop_caches: 3 [11046.212113] sh (31614): drop_caches: 3 [11046.226738] sh (31616): drop_caches: 3 [11046.226747] sh (31615): drop_caches: 3 [11046.238619] sh (31618): drop_caches: 3 [11046.239139] sh (31617): drop_caches: 3 [11046.239189] mdcsrepair[31619]: segfault at 2179228 ip 00000000004048df sp 00007ffdd7ed7ba0 error 4 in mdcsrepair[400000+10000] [33839.238730] md: md127: requested-resync done.

next cat /sys/block/md127/md/mismatch_cnt and results: 135008

but, no error status in GUI

/dev/md127:

Version : 1.2

Creation Time : Mon Mar 16 21:27:21 2020

Raid Level : raid5

Array Size : 9743319040 (9291.95 GiB 9977.16 GB)

Used Dev Size : 1948663808 (1858.39 GiB 1995.43 GB)

Raid Devices : 6

Total Devices : 6

Persistence : Superblock is persistent

Update Time : Sun May 30 11:49:08 2021

State : clean

Active Devices : 6

Working Devices : 6

Failed Devices : 0

Spare Devices : 0

Layout : left-symmetric

Chunk Size : 64K

Consistency Policy : unknown

Name : 33ea55f9:RAID-5-0 (local to host 33ea55f9)

UUID : 04d214c4:ee331e6a:74ca0a04:5e846481

Events : 979

Number Major Minor RaidDevice State

6 8 3 0 active sync /dev/sda3

1 8 19 1 active sync /dev/sdb3

2 8 35 2 active sync /dev/sdc3

3 8 51 3 active sync /dev/sdd3

4 8 67 4 active sync /dev/sde3

5 8 83 5 active sync /dev/sdf3

problem still exists

root@NAS-2:/RAID-5/TEST-FILE# dd if=/dev/urandom of=Test.flie bs=64M count=32dd: warning: partial read (33554431 bytes); suggest iflag=fullblock 0+32 records in 0+32 records out 1073741792 bytes (1.1 GB, 1.0 GiB) copied, 103.542 s, 10.4 MB/s root@NAS-2:/RAID-5/TEST-FILE# md5sum Test.flie 71b8e1ea63c2d543dd1b521698f1f40b Test.flie after 5-10 minutes root@NAS-2:/RAID-5/TEST-FILE# md5sum Test.flie 5952e1d1c6447efbbc4e76b13f090dbd Test.flie

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: Сhecksum errors in files on RAID5 Netgear ReadyNAS Pro 6 RNDP6000

It seems incredibly strange that BTRFS would have a file get corrupted like this and not throw BTRFS errors all over the place.. What's in the journal would not match what's on the disk!

Are you absolutely sure there's not some application out there touching/modifying the files? Can you also track the modify date of the file ($ stat <file>)? Perhaps even turn on Auditing? It's a pretty major thing for a file to get changed like that silently, BTRFS should detect and report corruption as soon as you try to access the file.. Unless.... maybe you have turned off checksumming on your data volume?

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: Сhecksum errors in files on RAID5 Netgear ReadyNAS Pro 6 RNDP6000

@DEADDEADBEEF wrote:

Are you absolutely sure there's not some application out there touching/modifying the files? Can you also track the modify date of the file ($ stat <file>)? Perhaps even turn on Auditing?

i am not sure... But i use default all services and nothing install outhere.

about $ stat <file> , file not modified, but checksum changed.

root@HQ-NAS-2:/RAID-5/TEST-FILE# dd if=/dev/urandom of=Test.flie bs=64M count=32 dd: warning: partial read (33554431 bytes); suggest iflag=fullblock 0+32 records in 0+32 records out 1073741792 bytes (1.1 GB, 1.0 GiB) copied, 103.885 s, 10.3 MB/s root@HQ-NAS-2:/RAID-5/TEST-FILE# md5sum Test.flie 0542952ac3e7e9d494a26a37c41a6c9e Test.flie root@HQ-NAS-2:/RAID-5/TEST-FILE# stat Test.flie File: 'Test.flie' Size: 1073741792 Blocks: 2097160 IO Block: 4096 regular file Device: 35h/53d Inode: 1049 Links: 1 Access: (0660/-rw-rw----) Uid: ( 0/ root) Gid: ( 0/ root) Access: 2021-05-31 10:54:16.835309473 +0300 Modify: 2021-05-31 10:56:00.681735098 +0300 Change: 2021-05-31 10:56:00.681735098 +0300 Birth: - root@HQ-NAS-2:/RAID-5/TEST-FILE# md5sum Test.flie 0542952ac3e7e9d494a26a37c41a6c9e Test.flie root@HQ-NAS-2:/RAID-5/TEST-FILE# md5sum Test.flie 0efe119d6aba0648ba32fc722fd72095 Test.flie root@HQ-NAS-2:/RAID-5/TEST-FILE# stat Test.flie File: 'Test.flie' Size: 1073741792 Blocks: 2097152 IO Block: 4096 regular file Device: 35h/53d Inode: 1049 Links: 1 Access: (0660/-rw-rw----) Uid: ( 0/ root) Gid: ( 0/ root) Access: 2021-05-31 10:54:16.835309473 +0300 Modify: 2021-05-31 10:56:00.681735098 +0300 Change: 2021-05-31 10:56:00.681735098 +0300 Birth: - root@HQ-NAS-2:/RAID-5/TEST-FILE# md5sum Test.flie d289a229916a49bede053b9cdc778ec6 Test.flie root@HQ-NAS-2:/RAID-5/TEST-FILE# stat Test.flie File: 'Test.flie' Size: 1073741792 Blocks: 2097152 IO Block: 4096 regular file Device: 35h/53d Inode: 1049 Links: 1 Access: (0660/-rw-rw----) Uid: ( 0/ root) Gid: ( 0/ root) Access: 2021-05-31 10:54:16.835309473 +0300 Modify: 2021-05-31 10:56:00.681735098 +0300 Change: 2021-05-31 10:56:00.681735098 +0300 Birth: -

@DEADDEADBEEF wrote:Unless.... maybe you have turned off checksumming on your data volume?

where i can have turned off or turned on checksumming on data volume?

checksum was turned on only this box.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: Сhecksum errors in files on RAID5 Netgear ReadyNAS Pro 6 RNDP6000

@StephenB wrote:

@Roman304 wrote:What log file do you recommend watching?

system.log, kernel.log, and system-journal.log

in kernel.log

May 30 14:35:29 NAS-2 kernel: mdcsrepair[13627]: segfault at 2325238 ip 00000000004048df sp 00007fff08ffa500 error 4 in mdcsrepair[400000+10000] May 30 14:35:29 NAS-2 kernel: sh (13626): drop_caches: 3 May 30 14:35:29 NAS-2 kernel: sh (13625): drop_caches: 3 May 30 14:35:29 NAS-2 kernel: mdcsrepair[13639]: segfault at 1cd6230 ip 00000000004048df sp 00007ffeba060c50 error 4 in mdcsrepair[400000+10000] May 30 14:35:29 NAS-2 kernel: mdcsrepair[13640]: segfault at bfe230 ip 00000000004048df sp 00007ffe6f442530 error 4 in mdcsrepair[400000+10000] May 30 14:35:30 NAS-2 kernel: sh (13647): drop_caches: 3 May 30 14:35:30 NAS-2 kernel: sh (13648): drop_caches: 3 May 30 17:47:16 NAS-2 kernel: BTRFS warning (device md0): csum failed ino 26800 off 6852608 csum 846329252 expected csum 429493038 May 30 17:47:16 NAS-2 kernel: sh (9521): drop_caches: 3 May 30 17:47:17 NAS-2 kernel: sh (9522): drop_caches: 3 May 30 20:41:32 NAS-2 kernel: BTRFS warning (device md0): csum failed ino 26800 off 2220032 csum 4138936066 expected csum 3680012373 May 30 20:41:32 NAS-2 kernel: BTRFS warning (device md0): csum failed ino 26800 off 5521408 csum 988873707 expected csum 286883169 May 30 20:41:32 NAS-2 kernel: BTRFS warning (device md0): csum failed ino 26800 off 5603328 csum 2031798327 expected csum 1425106784 May 30 20:41:32 NAS-2 kernel: sh (2563): drop_caches: 3 May 30 20:41:32 NAS-2 kernel: BTRFS warning (device md0): csum failed ino 26800 off 6266880 csum 1389706193 expected csum 2134807686 May 30 20:41:32 NAS-2 kernel: BTRFS warning (device md0): csum failed ino 26800 off 6754304 csum 1977957496 expected csum 1477350191 May 30 20:41:32 NAS-2 kernel: BTRFS warning (device md0): csum failed ino 26800 off 6754304 csum 1977957496 expected csum 1477350191 May 30 20:41:32 NAS-2 kernel: sh (2567): drop_caches: 3 May 30 20:41:32 NAS-2 kernel: sh (2569): drop_caches: 3 May 30 20:41:32 NAS-2 kernel: mdcsrepair[2571]: segfault at 1fd5230 ip 00000000004048df sp 00007ffe294f0c30 error 4 in mdcsrepair[400000+10000]

what is mean - mdcsrepair segfault ?

i found problems in logs on root device /dev/md0

in system-journal.log

May 30 20:41:32 NAS-2 kernel: BTRFS warning (device md0): csum failed ino 26800 off 2220032 csum 4138936066 expected csum 3680012373 May 30 20:41:32 NAS-2 mdcsrepaird[2862]: mdcsrepaird: mdcsrepair /dev/md0 622764032 4096 fdd84c09 aa77a724 //var/readynasd/db.sq3 May 30 20:41:32 NAS-2 kernel: BTRFS warning (device md0): csum failed ino 26800 off 5521408 csum 988873707 expected csum 286883169 May 30 20:41:32 NAS-2 kernel: BTRFS warning (device md0): csum failed ino 26800 off 5603328 csum 2031798327 expected csum 1425106784 May 30 20:41:32 NAS-2 kernel: sh (2563): drop_caches: 3 May 30 20:41:32 NAS-2 kernel: BTRFS warning (device md0): csum failed ino 26800 off 6266880 csum 1389706193 expected csum 2134807686 May 30 20:41:32 NAS-2 kernel: BTRFS warning (device md0): csum failed ino 26800 off 6754304 csum 1977957496 expected csum 1477350191 May 30 20:41:32 NAS-2 kernel: BTRFS warning (device md0): csum failed ino 26800 off 6754304 csum 1977957496 expected csum 1477350191 May 30 20:41:32 NAS-2 kernel: sh (2567): drop_caches: 3 May 30 20:41:32 NAS-2 mdcsrepaird[2862]: mdcsrepair: repairing /dev/md0 @ 622764032 [//var/readynasd/db.sq3] succeeded. May 30 20:41:32 NAS-2 kernel: sh (2569): drop_caches: 3 May 30 20:41:32 NAS-2 kernel: mdcsrepair[2571]: segfault at 1fd5230 ip 00000000004048df sp 00007ffe294f0c30 error 4 in mdcsrepair[400000+10000] May 30 20:41:32 NAS-2 mdcsrepaird[2862]: mdcsrepaird: mdcsrepair /dev/md0 626065408 4096 14fc0ec5 9e82e6ee //var/readynasd/db.sq3 May 30 20:41:32 NAS-2 mdcsrepaird[2862]: mdcsrepaird: mdcsrepair /dev/md0 626147328 4096 c837e586 9f980eab //var/readynasd/db.sq3 May 30 20:41:32 NAS-2 mdcsrepaird[2862]: mdcsrepaird: mdcsrepair /dev/md0 626810880 4096 2ec42aad 796bc180 //var/readynasd/db.sq3 May 30 20:41:32 NAS-2 mdcsrepaird[2862]: mdcsrepaird: mdcsrepair /dev/md0 627298304 4096 87c31a8a d06cf1a7 //var/readynasd/db.sq3 May 30 20:41:33 NAS-2 kernel: sh (2616): drop_caches: 3 May 30 20:41:33 NAS-2 kernel: sh (2617): drop_caches: 3 May 30 20:41:33 NAS-2 mdcsrepaird[2862]: mdcsrepair: designated data mismatches bad checksum /dev/md0 @ 626065408 May 30 20:41:33 NAS-2 kernel: sh (2620): drop_caches: 3 May 30 20:41:33 NAS-2 kernel: sh (2619): drop_caches: 3 May 30 20:41:33 NAS-2 mdcsrepaird[2862]: mdcsrepair: designated data mismatches bad checksum /dev/md0 @ 627298304 May 30 20:41:33 NAS-2 kernel: sh (2618): drop_caches: 3 May 30 20:41:33 NAS-2 kernel: sh (2622): drop_caches: 3 May 30 20:41:33 NAS-2 mdcsrepaird[2862]: mdcsrepair: designated data mismatches bad checksum /dev/md0 @ 626147328 May 30 20:41:33 NAS-2 kernel: sh (2621): drop_caches: 3 May 30 20:41:33 NAS-2 kernel: sh (2623): drop_caches: 3 May 30 20:41:33 NAS-2 mdcsrepaird[2862]: mdcsrepair: designated data mismatches bad checksum /dev/md0 @ 626810880

Can we conclude that my device is completely defective and the operating system does not work correctly? What can I do about it?

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: Сhecksum errors in files on RAID5 Netgear ReadyNAS Pro 6 RNDP6000

@Roman304 wrote: Can we conclude that my device is completely defective and the operating system does not work correctly? What can I do about it?

It looks that way to me. The csum errors say that files are damaged in the OS partition, and the segmentation faults mean that the repair attempt was either reading or writing to an illegal memory location.

Failing memory is a possibility, and could explain the symptoms. You could try running the memory test from the boot menu. Note the test will keep running until it finds an error. I'd stop it at 3 passes (unless of course it finds an error).

How much memory do you have in the Pro-6?

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: Сhecksum errors in files on RAID5 Netgear ReadyNAS Pro 6 RNDP6000

@StephenB wrote:

@Roman304 wrote: Can we conclude that my device is completely defective and the operating system does not work correctly? What can I do about it?It looks that way to me. The csum errors say that files are damaged in the OS partition, and the segmentation faults mean that the repair attempt was either reading or writing to an illegal memory location.

Failing memory is a possibility, and could explain the symptoms. You could try running the memory test from the boot menu. Note the test will keep running until it finds an error. I'd stop it at 3 passes (unless of course it finds an error).

How much memory do you have in the Pro-6?

2 x DDR2 1Gb Moudile - total 2056Mb ram

i tested from usb boot by memtest86 v5. 7 laps no errors.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: Сhecksum errors in files on RAID5 Netgear ReadyNAS Pro 6 RNDP6000

I can only imagine this being an issue in memory, it seems seriously strange to me that btrfs is not throwing errors all over the place if a file on disk was suddenly changed!!

I wonder if file is actually fine but it's something that happens when md5sum calculates the checksum.

Does it just happen with these large files? What if you just create a file with some normal text content like "this is a file"? If checksum changes for such a file it would be interesting to compare the contents before and after.

Can you try to copy the file out from the NAS via SMB and then check the checksum again on the machine that downloaded it, does it match the "new" checksum or the original?

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: Сhecksum errors in files on RAID5 Netgear ReadyNAS Pro 6 RNDP6000

This stuff is also a concern

May 30 20:41:32 NAS-2 kernel: mdcsrepair[2571]: segfault at 1fd5230 ip 00000000004048df sp 00007ffe294f0c30 error 4 in mdcsrepair[400000+10000] May 30 20:41:32 NAS-2 mdcsrepaird[2862]: mdcsrepaird: mdcsrepair /dev/md0 626065408 4096 14fc0ec5 9e82e6ee //var/readynasd/db.sq3 May 30 20:41:32 NAS-2 mdcsrepaird[2862]: mdcsrepaird: mdcsrepair /dev/md0 626147328 4096 c837e586 9f980eab //var/readynasd/db.sq3 May 30 20:41:32 NAS-2 mdcsrepaird[2862]: mdcsrepaird: mdcsrepair /dev/md0 626810880 4096 2ec42aad 796bc180 //var/readynasd/db.sq3 May 30 20:41:32 NAS-2 mdcsrepaird[2862]: mdcsrepaird: mdcsrepair /dev/md0 627298304 4096 87c31a8a d06cf1a7 //var/readynasd/db.sq3

If you don't think it's hardware, then I suggest doing a factory reset, rebuilding the NAS. The run your md5sum test again, and see if you get the same result.

A variant here is to remove the disks (labeling by slot), and then doing a fresh install on a scratch disk. Then run the test. But given the issues in the OS partition (/md0) I think you really do need a clean install.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: Сhecksum errors in files on RAID5 Netgear ReadyNAS Pro 6 RNDP6000

@DEADDEADBEEF wrote:I can only imagine this being an issue in memory, it seems seriously strange to me that btrfs is not throwing errors all over the place if a file on disk was suddenly changed!!

I wonder if file is actually fine but it's something that happens when md5sum calculates the checksum.

Does it just happen with these large files? What if you just create a file with some normal text content like "this is a file"? If checksum changes for such a file it would be interesting to compare the contents before and after.

Can you try to copy the file out from the NAS via SMB and then check the checksum again on the machine that downloaded it, does it match the "new" checksum or the original?

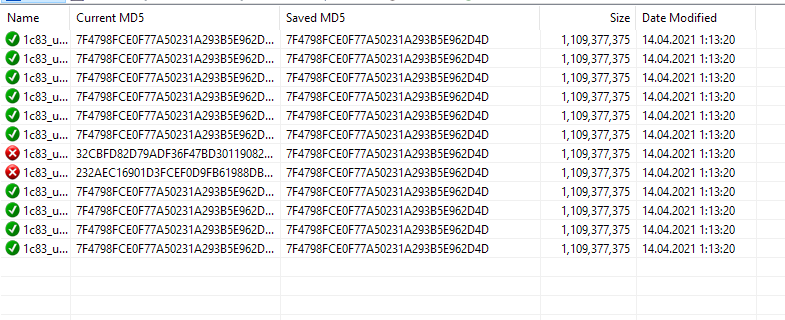

root@NAS-2:/RAID-5/TEST-FILE# dd if=/dev/urandom of=TestBigFile bs=64M count=32 dd: warning: partial read (33554431 bytes); suggest iflag=fullblock 0+32 records in 0+32 records out 1073741792 bytes (1.1 GB, 1.0 GiB) copied, 103.844 s, 10.3 MB/s root@NAS-2:/RAID-5/TEST-FILE# md5sum TestBigFile 8e90ba249e061fa5ca6ec60571435383 TestBigFile root@NAS-2:/RAID-5/TEST-FILE# echo 'This is test file' > test.txt root@NAS-2:/RAID-5/TEST-FILE# md5sum test.txt 6a9a70f9784007effeaa7da7800fa430 test.txt root@NAS-2:/RAID-5/TEST-FILE# md5sum TestBigFile 473d08a0b8032687ef5a1970e040b731 TestBigFile root@NAS-2:/RAID-5/TEST-FILE# md5sum test.txt 6a9a70f9784007effeaa7da7800fa430 test.txt

after 10 min checksum no changed in small file, but big file was corrupt. (checksum was changed)

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: Сhecksum errors in files on RAID5 Netgear ReadyNAS Pro 6 RNDP6000

I cleaned all disks. Made clean install and upgrade to version 6.10.5

have no errors about "mdrepair" now.

but NEW errors (((

[ 34.581568] Adding 523260k swap on /dev/md1. Priority:-1 extents:1 across:523260k [ 199.391252] md: bind<sda1> [ 200.553853] md: bind<sdc1> [ 201.487715] md: bind<sdd1> [ 202.385661] md: bind<sde1> [ 203.268166] md: bind<sdf1> [ 204.258426] md1: detected capacity change from 535822336 to 0 [ 204.258434] md: md1 stopped. [ 204.258440] md: unbind<sdb2> [ 204.263050] md: export_rdev(sdb2) [ 204.394791] md: bind<sda2> [ 204.394985] md: bind<sdb2> [ 204.395552] md: bind<sdc2> [ 204.395783] md: bind<sdd2> [ 204.395959] md: bind<sde2> [ 204.396345] md: bind<sdf2> [ 204.415087] md/raid10:md1: not clean -- starting background reconstruction [ 204.415094] md/raid10:md1: active with 6 out of 6 devices [ 204.417162] md1: detected capacity change from 0 to 1604321280 [ 204.423052] md: resync of RAID array md1 [ 204.423057] md: minimum _guaranteed_ speed: 1000 KB/sec/disk. [ 204.423060] md: using maximum available idle IO bandwidth (but not more than 1000 KB/sec) for resync. [ 204.423067] md: using 128k window, over a total of 1566720k. [ 204.776747] Adding 1566716k swap on /dev/md1. Priority:-1 extents:1 across:1566716k [ 205.411408] md: bind<sda3> [ 205.463247] md: bind<sdb3> [ 205.463469] md: bind<sdc3> [ 205.463657] md: bind<sdd3> [ 205.463830] md: bind<sde3> [ 205.463970] md: bind<sdf3> [ 205.465330] md/raid:md127: not clean -- starting background reconstruction [ 205.465358] md/raid:md127: device sdf3 operational as raid disk 5 [ 205.465361] md/raid:md127: device sde3 operational as raid disk 4 [ 205.465364] md/raid:md127: device sdd3 operational as raid disk 3 [ 205.465367] md/raid:md127: device sdc3 operational as raid disk 2 [ 205.465370] md/raid:md127: device sdb3 operational as raid disk 1 [ 205.465372] md/raid:md127: device sda3 operational as raid disk 0 [ 205.466449] md/raid:md127: allocated 6474kB [ 205.466537] md/raid:md127: raid level 5 active with 6 out of 6 devices, algorithm 2 [ 205.466715] md127: detected capacity change from 0 to 9977158696960 [ 205.466828] md: resync of RAID array md127 [ 205.466831] md: minimum _guaranteed_ speed: 30000 KB/sec/disk. [ 205.466833] md: using maximum available idle IO bandwidth (but not more than 200000 KB/sec) for resync. [ 205.466839] md: using 128k window, over a total of 1948663808k. [ 206.468138] md: recovery of RAID array md0 [ 206.468142] md: minimum _guaranteed_ speed: 30000 KB/sec/disk. [ 206.468145] md: using maximum available idle IO bandwidth (but not more than 200000 KB/sec) for recovery. [ 206.468163] md: using 128k window, over a total of 4190208k. [ 207.068749] BTRFS: device label 33ea55f9:RAID-5 devid 1 transid 5 /dev/md/RAID-5-0 [ 207.536727] BTRFS info (device md127): has skinny extents [ 207.536733] BTRFS info (device md127): flagging fs with big metadata feature [ 207.753158] BTRFS info (device md127): checking UUID tree [ 207.779143] BTRFS info (device md127): quota is enabled [ 208.539681] BTRFS info (device md127): qgroup scan completed (inconsistency flag cleared) [ 212.002057] BTRFS info (device md127): new type for /dev/md127 is 2 [ 253.293798] md: md1: resync done. [ 879.835567] md: md0: recovery done. [29222.629724] md: md127: resync done. [76853.949311] md: requested-resync of RAID array md0 [76853.949316] md: minimum _guaranteed_ speed: 30000 KB/sec/disk. [76853.949319] md: using maximum available idle IO bandwidth (but not more than 200000 KB/sec) for requested-resync. [76853.949325] md: using 128k window, over a total of 4190208k. [76860.989358] md: requested-resync of RAID array md1 [76860.989364] md: minimum _guaranteed_ speed: 30000 KB/sec/disk. [76860.989367] md: using maximum available idle IO bandwidth (but not more than 200000 KB/sec) for requested-resync. [76860.989373] md: using 128k window, over a total of 1566720k. [76869.627690] md: md1: requested-resync done. [76968.845656] md: md0: requested-resync done. [77284.591955] BTRFS warning (device md127): csum failed ino 259 off 1257472 csum 2226542714 expected csum 2841507629 [77284.592253] BTRFS warning (device md127): csum failed ino 259 off 471040 csum 833631200 expected csum 475765943 [77284.599233] BTRFS warning (device md127): csum failed ino 259 off 471040 csum 833631200 expected csum 475765943 [77284.626801] BTRFS warning (device md127): csum failed ino 259 off 471040 csum 833631200 expected csum 475765943 [77284.627575] BTRFS warning (device md127): csum failed ino 259 off 471040 csum 833631200 expected csum 475765943 [77284.628600] BTRFS warning (device md127): csum failed ino 259 off 471040 csum 833631200 expected csum 475765943 [77284.629590] BTRFS warning (device md127): csum failed ino 259 off 471040 csum 833631200 expected csum 475765943 [77284.632721] BTRFS warning (device md127): csum failed ino 259 off 471040 csum 833631200 expected csum 475765943 [77284.633958] BTRFS warning (device md127): csum failed ino 259 off 471040 csum 833631200 expected csum 475765943 [77284.640105] BTRFS warning (device md127): csum failed ino 259 off 876544 csum 2896134451 expected csum 2171874916

When i try copy via smb i get an error in windows.

(And copy to NAS some files)

And when i try get check sum, get error i/o.

root@nas-2:/RAID-5/TEST# dd if=/dev/urandom of=Test.flie bs=64M count=32 dd: warning: partial read (33554431 bytes); suggest iflag=fullblock 0+32 records in 0+32 records out 1073741792 bytes (1.1 GB, 1.0 GiB) copied, 104.606 s, 10.3 MB/s root@nas-2:/RAID-5/TEST# md5sum Test.flie d72ea9d9c3c2641612de18c42deadccb Test.flie root@nas-2:/RAID-5/TEST# md5sum Test.flie d72ea9d9c3c2641612de18c42deadccb Test.flie root@nas-2:/RAID-5/TEST# md5sum Test.flie d72ea9d9c3c2641612de18c42deadccb Test.flie root@nas-2:/RAID-5/TEST# md5sum Test.flie d72ea9d9c3c2641612de18c42deadccb Test.flie

I try upload to NAS files and try download vian smb (gets error)

root@nas-2:/RAID-5/TEST# md5sum Test.flie md5sum: Test.flie: Input/output error root@nas-2:/RAID-5/TEST# md5sum Test.flie md5sum: Test.flie: Input/output error

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: Сhecksum errors in files on RAID5 Netgear ReadyNAS Pro 6 RNDP6000

@Roman304 wrote:

Made clean install and upgrade to version 6.10.5

Do you mean a factory default (or new factory install)? Or something a bit different?

@Roman304 wrote:

but NEW errors (((

[77284.591955] BTRFS warning (device md127): csum failed ino 259 off 1257472 csum 2226542714 expected csum 2841507629 [77284.592253] BTRFS warning (device md127): csum failed ino 259 off 471040 csum 833631200 expected csum 475765943 [77284.599233] BTRFS warning (device md127): csum failed ino 259 off 471040 csum 833631200 expected csum 475765943 [77284.626801] BTRFS warning (device md127): csum failed ino 259 off 471040 csum 833631200 expected csum 475765943 [77284.627575] BTRFS warning (device md127): csum failed ino 259 off 471040 csum 833631200 expected csum 475765943 [77284.628600] BTRFS warning (device md127): csum failed ino 259 off 471040 csum 833631200 expected csum 475765943 [77284.629590] BTRFS warning (device md127): csum failed ino 259 off 471040 csum 833631200 expected csum 475765943 [77284.632721] BTRFS warning (device md127): csum failed ino 259 off 471040 csum 833631200 expected csum 475765943 [77284.633958] BTRFS warning (device md127): csum failed ino 259 off 471040 csum 833631200 expected csum 475765943 [77284.640105] BTRFS warning (device md127): csum failed ino 259 off 876544 csum 2896134451 expected csum 2171874916

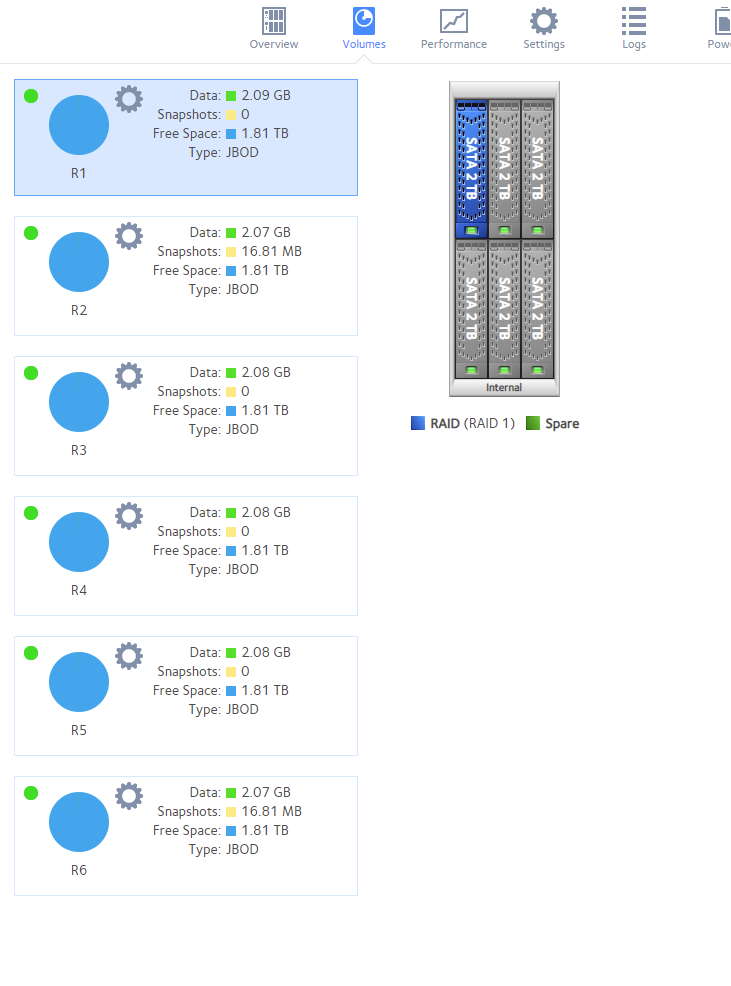

Definitely something still wrong. I expect that disabling volume checksums will clear the SMB error, but I think you will find errors in the files.

I am wondering if this is disk-related. If you are willing to do another factory default, you could set up jbod volumes (one per disk), and see if one disk in particular is giving the checksum errors.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: Сhecksum errors in files on RAID5 Netgear ReadyNAS Pro 6 RNDP6000

Something is seriously wrong with the system, either disk or memory - I'm leaning towards memory.

If you have a blank disk, could you factory default with this disk and then see if same behavior is seen on a clean single disk install? If so it's almost certainly memory (though I suppose it could also be something with the disk controller / motherboard)

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: Сhecksum errors in files on RAID5 Netgear ReadyNAS Pro 6 RNDP6000

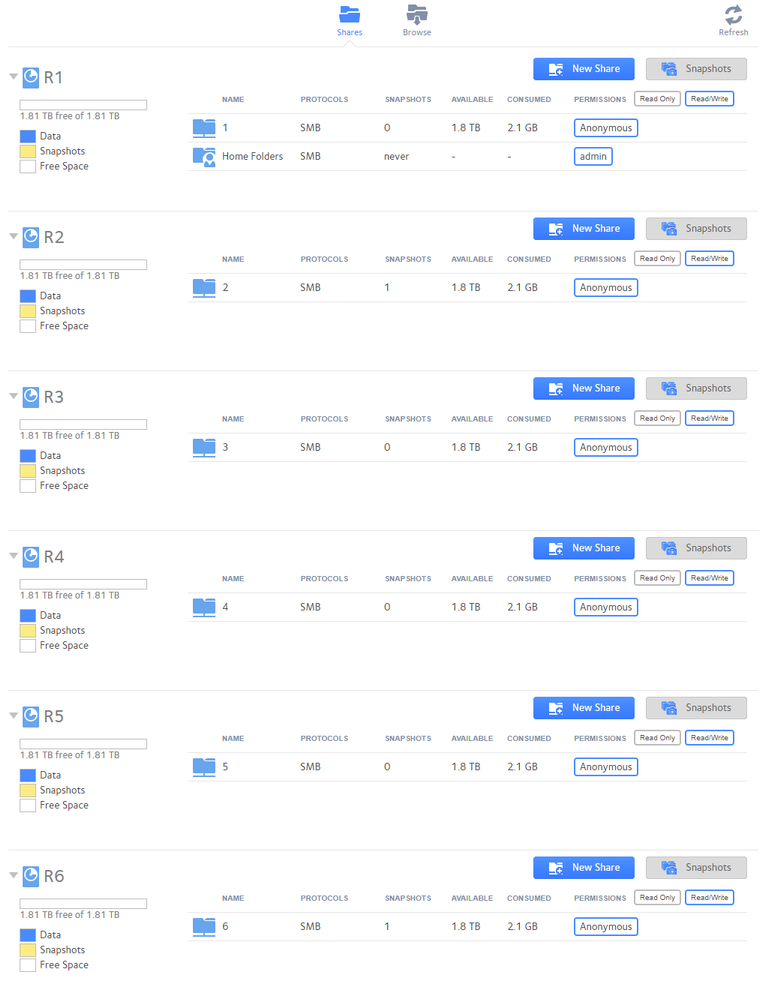

I have partitioned jbod raid drives into each drive. RAID number 4 checksum error. But no errors in dmesg.

Every 2.0s: cat /proc/mdstat Fri Jun 4 11:30:16 2021

Personalities : [raid0] [raid1] [raid10] [raid6] [raid5] [raid4]

md122 : active raid1 sdf3[0]

1948663808 blocks super 1.2 [1/1] [U]

md123 : active raid1 sde3[0]

1948663808 blocks super 1.2 [1/1] [U]

md124 : active raid1 sdd3[0]

1948663808 blocks super 1.2 [1/1] [U]

md125 : active raid1 sdc3[0]

1948663808 blocks super 1.2 [1/1] [U]

md126 : active raid1 sdb3[0]

1948663808 blocks super 1.2 [1/1] [U]

md127 : active raid1 sda3[0]

1948663808 blocks super 1.2 [1/1] [U]

md1 : active raid10 sda2[0] sdf2[5] sde2[4] sdd2[3] sdc2[2] sdb2[1]

1566720 blocks super 1.2 512K chunks 2 near-copies [6/6] [UUUUUU]

md0 : active raid1 sdf1[6] sdb1[2](S) sdc1[3](S) sdd1[4](S) sde1[5](S) sda1[1]

4190208 blocks super 1.2 [2/2] [UU]

unused devices: <none>

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Roman304 wrote:

I have partitioned jbod raid drives into each drive. RAID number 4 checksum error.

That's a clear indication that your issue is either linked to that disk or to that slot. The next step is to figure out which.

I suggest destroying RAID 1,2,5,6, and removing those disks. Then power down the NAS, and swap RAID 3 and RAID 4. Power up and re-run the test on both volumes. That will let you know if the problem is linked to the disk or the slot.

If the problem disappears on both disks, then it could be power-related. You can confirm that by adding the removed disks back one at a time, and see when the problem starts happening again.